https://github.com/kuroko1t/gdeep

simple golang deep learning framework

https://github.com/kuroko1t/gdeep

deep-learning deeplearning go golang machine-learning machinelearning

Last synced: 4 months ago

JSON representation

simple golang deep learning framework

- Host: GitHub

- URL: https://github.com/kuroko1t/gdeep

- Owner: kuroko1t

- License: apache-2.0

- Created: 2018-06-12T15:00:25.000Z (over 7 years ago)

- Default Branch: master

- Last Pushed: 2021-07-05T03:15:59.000Z (over 4 years ago)

- Last Synced: 2025-04-15T19:53:53.937Z (8 months ago)

- Topics: deep-learning, deeplearning, go, golang, machine-learning, machinelearning

- Language: Go

- Homepage:

- Size: 14 MB

- Stars: 10

- Watchers: 2

- Forks: 4

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- Funding: .github/FUNDING.yml

- License: LICENSE

Awesome Lists containing this project

- awesome-ai-ml-dl - GDeep - Deep learning library written in golang

README

# gdeep

deep learning library written by golang. wellcom to contribution!! and still in production..

# Getting Started

```

git clone https://github.com/kuroko1t/gdeep

cd gdeep

go run example/mlpMnist.go

```

[sample](https://github.com/kuroko1t/gdeep/blob/master/example/mlpMnist.go)

## distributed

* go get github.com/kuroko1t/gmpi

* install openmpi > 3.0

# Run

* cpu

```

go run example/mlpMnist.go

```

* distributed

```

mpirun -np 2 -H host1:1,host2:1 go run example/mlpMnist_allreduce.go

```

# Document

https://godoc.org/github.com/kuroko1t/gdeep

# implemented networks

only support 2D input yet

### layer

* Dense

* Dropout

### activation

* Relu

* Sigmoid

### loss

* crossentropy

### optimization

* Sgd

* Momentum

# Sample

* mlp sample

```golang

package main

import (

"fmt"

"github.com/kuroko1t/GoMNIST"

"github.com/kuroko1t/gdeep"

"github.com/kuroko1t/gmat"

)

func main() {

train, test, _ := GoMNIST.Load("./data")

trainDataSize := len(train.ImagesFloatNorm)

testDataSize := len(test.ImagesFloatNorm)

batchSize := 128

inputSize := 784

hiddenSize := 20

outputSize := 10

learningRate := 0.01

epochNum := 1

iterationNum := trainDataSize * epochNum / batchSize

dropout1 := &gdeep.Dropout{}

dropout2 := &gdeep.Dropout{}

layer := []gdeep.LayerInterface{}

gdeep.LayerAdd(&layer, &gdeep.Dense{}, []int{inputSize, hiddenSize})

gdeep.LayerAdd(&layer, &gdeep.Relu{})

gdeep.LayerAdd(&layer, dropout1, 0.2)

gdeep.LayerAdd(&layer, &gdeep.Dense{}, []int{hiddenSize, hiddenSize})

gdeep.LayerAdd(&layer, &gdeep.Relu{})

gdeep.LayerAdd(&layer, dropout2, 0.2)

gdeep.LayerAdd(&layer, &gdeep.Dense{}, []int{hiddenSize, outputSize})

gdeep.LayerAdd(&layer, &gdeep.SoftmaxWithLoss{})

momentum := &gdeep.Momentum{learningRate, 0.9}

iter := 0

for i := 0; i < iterationNum; i++ {

if (i+2)*batchSize > trainDataSize {

iter = 0

}

imageBatch := train.ImagesFloatNorm[:][iter*batchSize : (iter+1)*batchSize]

lagelBatch := train.LabelsOneHot[:][iter*batchSize : (iter+1)*batchSize]

x := gmat.Make2DInitArray(imageBatch)

t := gmat.Make2DInitArray(lagelBatch)

loss := gdeep.Run(layer, momentum, x, t)

gdeep.AvePrint(loss, "loss")

iter++

}

}

```

* distributed

[sample](https://github.com/kuroko1t/gdeep/blob/master/example/mlpMnist_allreduce.go)

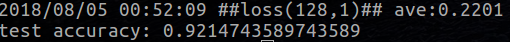

* Mnist Test Accuracy

# Done

* cpu calc parallelization(dot)

* learning param save and restore

* mlp with gpu

# Todo

* CNN

# License

gdeep is licensed under the Apache License, Version2.0