Ecosyste.ms: Awesome

An open API service indexing awesome lists of open source software.

https://github.com/lllyasviel/sketchKeras

an u-net with some algorithm to take sketch from paints

https://github.com/lllyasviel/sketchKeras

Last synced: 3 months ago

JSON representation

an u-net with some algorithm to take sketch from paints

- Host: GitHub

- URL: https://github.com/lllyasviel/sketchKeras

- Owner: lllyasviel

- License: apache-2.0

- Created: 2017-05-04T13:54:46.000Z (almost 8 years ago)

- Default Branch: master

- Last Pushed: 2017-05-04T15:43:17.000Z (almost 8 years ago)

- Last Synced: 2024-08-01T15:13:19.064Z (6 months ago)

- Language: Python

- Size: 1.54 MB

- Stars: 453

- Watchers: 25

- Forks: 75

- Open Issues: 5

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

- awesome - sketchKeras - an u-net with some algorithm to take sketch from paints (Python)

README

# sketchKeras

An u-net with some algorithm to take the sketch from a painting.

# requirement

* Keras

* Opencv

* tensorflow/theano

* numpy

# download mod

see release

# performance

Currently there are many edge-detecting algrithoms or nerual networks. But few of them has good performance on paintings, espatially those from comic or animate. Most of these existing methods just detect the edge and then add lines to the edge. However, we need a method to convert the painting to a sketch which looks like a painter drawed the outline of picture. It is important when we want to train a nerual networks to colorlize pictures.**(Paper is on the way)**

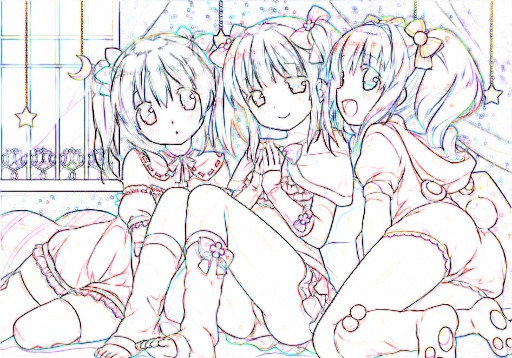

Here is a example of artificial sketch for reference.

Here is a conclusion of existing methods to handle this problem.

* use **opencv** and implement a high-pass effect to get the edge

* train a nerual network (HED Edge Detect)/(**PaintsChainer's lnet**)

* use this **sketchKeras** which combined algorithm and nerual networks

Take this pic as an example *(Picture is from internet and I am finding the author.)*

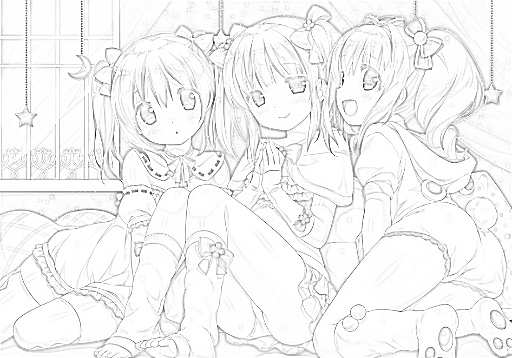

If we use the high-pass algorithm via opencv or something else, we may get this one:

As we can see, the result is far from artificial sketch. To achieve better performance, we may modify the parameters and enhance the pic, then it comes this one:

The result is still not good. People like to add shadow to their drawing by add dense lines or points and these will become noise and disturb the high-pass algorithm. It is apprent that we can modify the parameters or use denoise methods to improve it, but drawings differ from one another and it is impossible to handle these automatically.

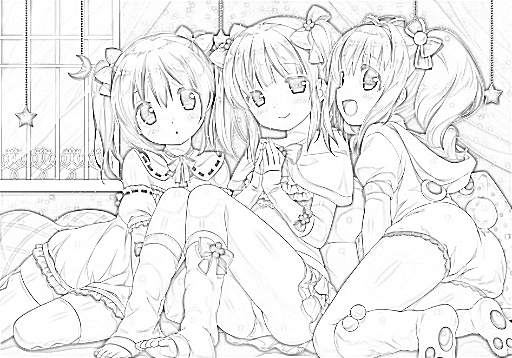

Then let us try the **lnet of PaintsChainer** (similar to HED)

The result from nerual networks looks different from those from algorithm. However, this is still not so good.

The author of PaintsChainer use threslod to avoid noise and normalize the line, as this:

In this picture, we can see clearly that the noise, espeacilly near eyes and in the shadow of hair. "threslod" can filter some noise but some useful lines is also dropped. Last but not least, the lines are too coarse and thick. Here is a reference of thresloded artificial sketch:

As we can see, though the picture is denoised by "threslod", it differs far from real artificial sketch. So it remains much improvement place in paintsChainer.

Finally, let us see the result of sketchKeras:

The four results are generated by sketchKeras. You can use your favorite one to generate your own training dataset for colorize networks.

---

## another example

(picture is from internet and I am finding the author.)

raw picture:

opencv and high-pass (all detail and noise remained so it is not suitable for training a colorize network)

opencv and high-pass enhanced (still not so good and we can see the pic is going to become a grayscaled detailed pic but not a sketch)

paintsChainer's lnet (all detail and noise remained, espcially the hair)

paintsChainer's lnet (thresloded) (just look at the hair)

Pics below are generated by sketchKeras. It can drop some noise and unimportant detail to achieve better performance.

## ability to generate colored highlighted sketch

As you can see, sketchKeras has the ability to generate colored highlighted sketch.