https://github.com/lucasxlu/LagouJob

Data Analysis & Mining for lagou.com

https://github.com/lucasxlu/LagouJob

data-analysis data-mining lagou machine-learning nlp python3 web-crawler

Last synced: 3 months ago

JSON representation

Data Analysis & Mining for lagou.com

- Host: GitHub

- URL: https://github.com/lucasxlu/LagouJob

- Owner: lucasxlu

- License: apache-2.0

- Created: 2016-03-15T01:51:57.000Z (over 9 years ago)

- Default Branch: master

- Last Pushed: 2019-04-19T01:59:03.000Z (over 6 years ago)

- Last Synced: 2024-08-07T22:35:30.125Z (about 1 year ago)

- Topics: data-analysis, data-mining, lagou, machine-learning, nlp, python3, web-crawler

- Language: Python

- Homepage: https://www.zhihu.com/question/36132174/answer/94392659

- Size: 28.1 MB

- Stars: 259

- Watchers: 29

- Forks: 129

- Open Issues: 1

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

# Data Analysis of [Lagou Job](http://www.lagou.com/)

## Introduction

This repository holds the code for job data analysis of [Lagou](http://www.lagou.com/).

The main functions included are listed as follows:

1. Crawling job data from [Lagou](www.lagou.com), and get the latest information of jobs about Internet.

2. Proxies are collected from [XiCiDaiLi](https://www.xicidaili.com/nn/1).

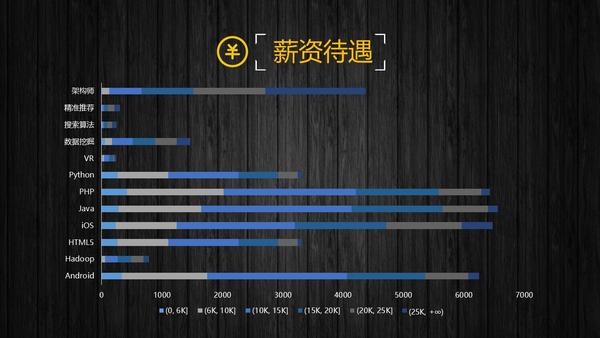

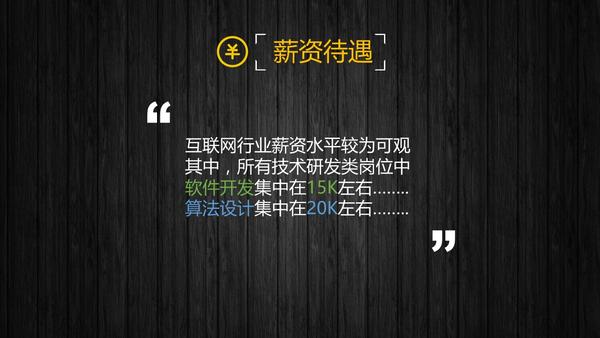

3. Data analysis and visualization.

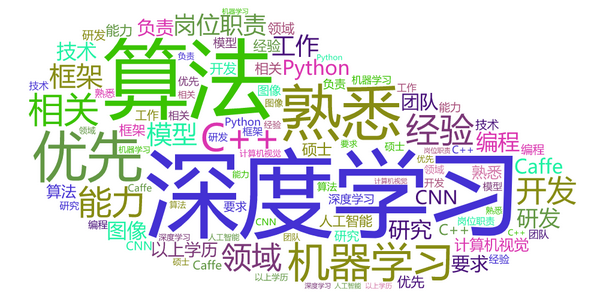

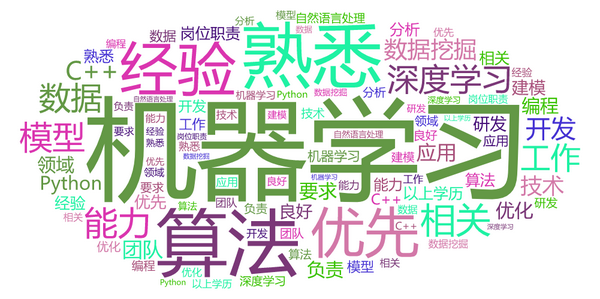

4. Crawling job details info and generate word cloud as __Job Impression__.

5. In order to train a [NLP](http://baike.baidu.com/item/nlp/25220#viewPageContent) task with machine learning, the data of interviewee's comments will be stored in [mongodb](https://docs.mongodb.com/)

## Prerequisites

1. Install 3rd party libraries

```sudo pip3 install -r requirements.txt```

2. Install [mongodb](https://docs.mongodb.com/) and start [mongodb](https://docs.mongodb.com/) service [optional]

```sudo service mongod start```

## How to Use

1. clone this project from [github](https://github.com/lucasxlu/LagouJob.git).

2. Lagou's anti-spider strategy has been upgrade frequently recently. I suggest you run [proxy_crawler.py](./spider/proxy_crawler.py) to get IP proxies and execute the code with [PhantomJS](http://phantomjs.org/).

3. run [m_lagou_spider.py](spider/m_lagou_spider.py) to crawl job data, it will generate a collection of Excel files in ```./data``` directory.

4. run [hot_words_generator.py](analysis/hot_words_generator.py) to cut sentences, it will return __TOP-30__ hot words and wordcloud figure.

## Analysis Results

>

>

>

>

>

>

>

## Report

* For technical details, please refer to my answer at [Zhihu](https://www.zhihu.com/question/36132174/answer/94392659).

* The PDF report can be downloaded from [here](https://lucasxlu.github.io/blog/projects/LagouJob.pdf).

## Change Log

- [V2.0] - 2019.04. Upgraded to [PhantomJS](http://phantomjs.org/) and IP proxies.

- [V1.2] - 2017.05. Rewrite WordCloud visualization module.

- [V1.0] - 2017.04. Upgraded to mobile Lagou.

- [V0.8] - 2016.05. Finish Lagou PC web spider.

## LICENSE

[Apache-2.0](./LICENSE)