https://github.com/luyadev/luya-module-crawler

Crawle a Website and provide intelligent search results

https://github.com/luyadev/luya-module-crawler

crawler hacktoberfest intelligent-search luya search yii2

Last synced: about 2 months ago

JSON representation

Crawle a Website and provide intelligent search results

- Host: GitHub

- URL: https://github.com/luyadev/luya-module-crawler

- Owner: luyadev

- License: mit

- Created: 2015-07-27T09:03:58.000Z (over 10 years ago)

- Default Branch: master

- Last Pushed: 2023-10-31T07:04:52.000Z (about 2 years ago)

- Last Synced: 2025-01-31T09:34:16.406Z (11 months ago)

- Topics: crawler, hacktoberfest, intelligent-search, luya, search, yii2

- Language: PHP

- Homepage: https://luya.io

- Size: 679 KB

- Stars: 7

- Watchers: 4

- Forks: 5

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- Changelog: CHANGELOG.md

- License: LICENSE.md

Awesome Lists containing this project

README

# Crawler

[](https://luya.io)

[](https://packagist.org/packages/luyadev/luya-module-crawler)

[](https://codeclimate.com/github/luyadev/luya-module-crawler/test_coverage)

[](https://packagist.org/packages/luyadev/luya-module-crawler)

An easy to use full-website page crawler to make provide search results on your page. The crawler module gather all information about the sites on the configured domain and stores the index in the database. From there you can now create search queries to provide search results. There are also helper methods which provide intelligent search results by splitting the input into multiple search queries (used by default).

## Installation

Install the module via composer:

```sh

composer require luyadev/luya-module-crawler:^3.0

```

After installation via Composer include the module to your configuration file within the modules section.

```php

'modules' => [

//...

'crawler' => [

'class' => 'luya\crawler\frontend\Module',

'baseUrl' => 'https://luya.io',

/*

'filterRegex' => [

'#.html#i', // filter all links with `.html`

'#/agenda#i', // filter all links which contain the word with leading slash agenda,

'#date\=#i, // filter all links with the word date inside. for example when using an agenda which will generate infinite links

],

'on beforeProcess' => function() {

// optional add or filter data from the BuilderIndex, which will be processed to the Index afterwards

},

'on afterIndex' => function() {

// optional add or filter data from the freshly built Index

}

*/

],

'crawleradmin' => 'luya\crawler\admin\Module',

]

```

> Where `baseUrl` is the domain you want to crawler all information.

After setup the module in your config you have to run the migrations and import command (to setup permissions):

```sh

./vendor/bin/luya migrate

./vendor/bin/luya import

```

## Running the Crawler

To execute the command (and run the crawler proccess) use the crawler command `crawl`, you should put this command in cronjob to make sure your index is up-to-date:

> Make sure your page is in utf8 mode (``) and make sure to set the language ``.

```sh

./vendor/bin/luya crawler/crawl

```

> In order to provide current crawl results you should create a cronjob which crawls the page each night: `cd httpdocs/current && ./vendor/bin/luya crawler/crawl`

### Crawler Arguments

All crawler arguments for `crawler/crawl`, an example would be `crawler/crawl --pdfs=0 --concurrent=5 --linkcheck=0`:

|name|description|default

|----|-----------|-------

|linkcheck|Whether all links should be checked after the crawler has indexed your site|true

|pdfs|Whether PDFs should be indexed by the crawler or not|true

|concurrent|The amount of conccurent page crawles|15

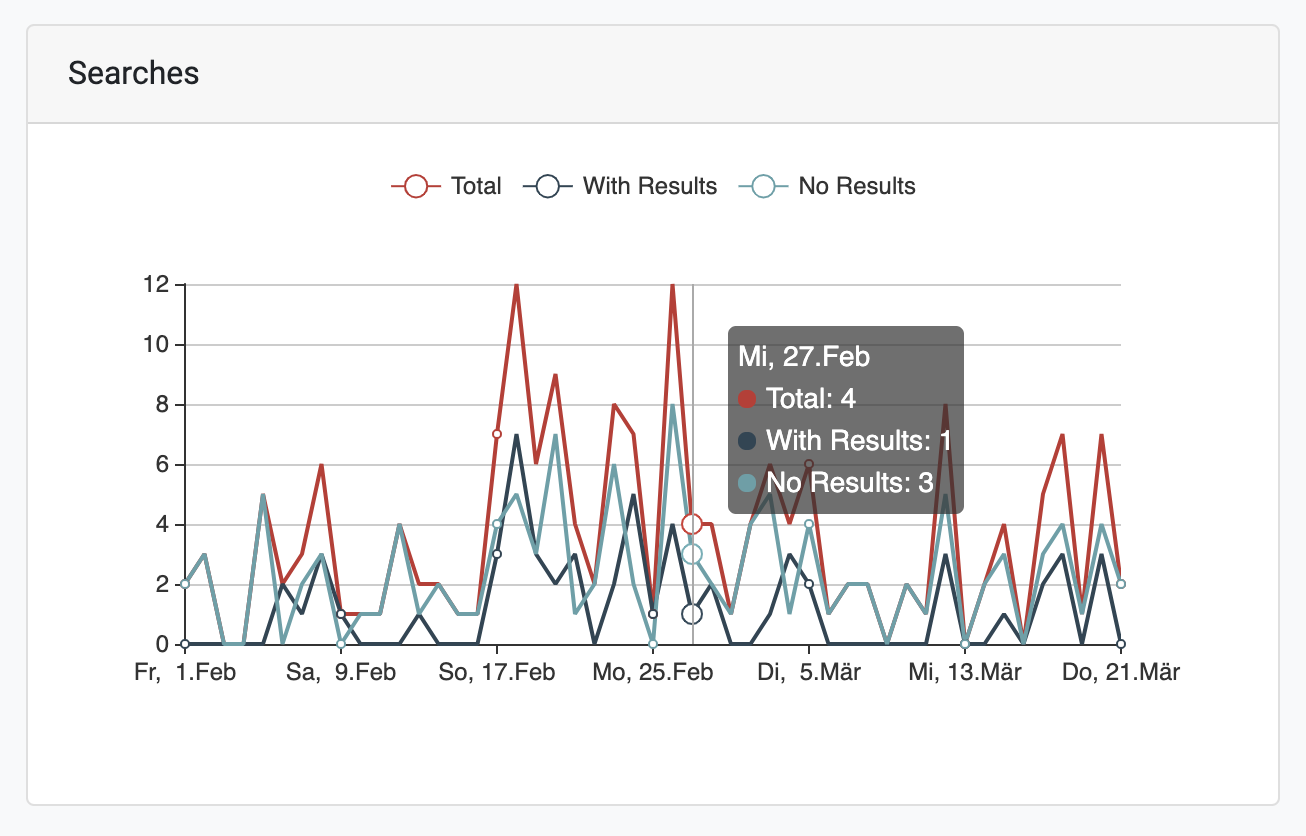

## Stats

You can also get statistic results enabling a cronjob executing each week:

```

./vendor/bin/luya crawler/statistic

```

## Create search form

Make a post request with `query` to the `crawler/default/index` route and render the view as follows:

```php

= $provider->totalCount; ?> Results

totalCount == 0): ?>

No results found for «= $query; ?>».

= DidYouMeanWidget::widget(['searchModel' => $searchModel]); ?>

models as $item): /* @var $item \luya\crawler\models\Index */ ?>

= $item->title; ?>

= $item->preview($query); ?>

= $item->url; ?>

= LinkPager::widget(['pagination' => $provider->pagination]); ?>

```

### Crawler Settings

You can use crawler tags to trigger certains events or store informations:

|tag|example|description

|---|-------|-----------

|CRAWL_IGNORE|`Ignore this`|Ignores a certain content from indexing.

|CRAWL_FULL_IGNORE|` `|Ignore a full page for the crawler, keep in mind that links will be added to index inside the ignore page.

|CRAWL_GROUP|``|Sometimes you want to group your results by a section of a page, in order to let crawler know about the group/section of your current page. Now you can group your results by the `group` field.

|CRAWL_TITLE|``|If you want to make sure to always use your customized title you can use the CRAWL_TITLE tag to ensure your title for the page: