https://github.com/machine-learning-tokyo/__init__

https://github.com/machine-learning-tokyo/__init__

Last synced: 10 months ago

JSON representation

- Host: GitHub

- URL: https://github.com/machine-learning-tokyo/__init__

- Owner: Machine-Learning-Tokyo

- Created: 2020-11-15T07:38:41.000Z (about 5 years ago)

- Default Branch: main

- Last Pushed: 2022-10-11T00:03:08.000Z (over 3 years ago)

- Last Synced: 2025-04-15T14:58:32.123Z (10 months ago)

- Language: Jupyter Notebook

- Size: 87.1 MB

- Stars: 137

- Watchers: 13

- Forks: 12

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

# Introduction

MLT \_\_init\_\_ is a monthly event led by [Jayson Cunanan](https://www.linkedin.com/in/jayson-cunanan-phd/) and [J. Miguel Valverde](https://twitter.com/jmlipman) where, similarly to a traditional journal club, a paper is first presented by a volunteer and then discussed among all attendees. Our goal is to give participants good **initializations** to effectively study and improve their understanding of Deep Learning. We will try to achieve this by:

* Discussing **fundamental papers**, whose main ideas are currently implemented on state-of-the-art models.

* Discussing recent papers.

# Sessions

| Date | Topic | Paper | Presenter | Presentation | Video |

|-------------|---------------------------------|------------------------|------------------|--------------------------|--------|

| 17/Sep/2022 | Inference Optimization | [LLM.int8()](https://arxiv.org/abs/2208.07339) | [Tim Dettmers](https://timdettmers.com/) | [Slides]() | [](https://www.youtube.com/watch?v=o94ODz1CAtk)|

| 25/May/2022 | CV: GAN | [SeamlessGAN](https://arxiv.org/abs/2201.05120) | [Carlos Rodríguez-Pardo](https://carlosrodriguezpardo.es/) | [Slides](https://github.com/Machine-Learning-Tokyo/__init__/blob/main/session_15/MLT%20-%20copia.pdf) | [](https://www.youtube.com/watch?v=5vWW84jsRyk)|

| 12/Apr/2022 | Data Pruning | [Deep Learning on a Data Diet](https://arxiv.org/abs/2107.07075) | [Karolina Dziugaite](https://gkdz.org/) | [Slides]() | [](https://www.youtube.com/watch?v=KUoEGz-Ztlw)|

| 24/Mar/2022 | Bayesian Learning | [The Bayesian Learning Rule](https://arxiv.org/abs/2107.04562) | [Mohammad Emtiyaz Khan](https://emtiyaz.github.io/index.html) | [Slides](https://github.com/Machine-Learning-Tokyo/__init__/blob/main/session_14/Mar24_2022_MLT.pdf) | []()|

| 26/Jan/2022 | NLP: Zero-shot | [T0](https://arxiv.org/abs/2110.08207) | [Victor Sanh](https://twitter.com/SanhEstPasMoi) | [Slides](https://github.com/Machine-Learning-Tokyo/__init__/blob/main/session_13/Talk%20MLT%20-%20Victor%20-%20Jan%2026%202022.pdf) | [](https://www.youtube.com/watch?v=RI9Wo2yGGt8)|

| 19/Dec/2021 | CV: Self-supervised learning | [SimCLR](https://arxiv.org/abs/2002.05709) | [Kartik Sachdev](https://www.linkedin.com/in/kartik-sachdev-7bb581ab/) | [Slides](https://github.com/Machine-Learning-Tokyo/__init__/raw/main/session_12/SimCLR.pptx) | [](https://www.youtube.com/watch?v=czAWRw5GIq4)|

| 25/Nov/2021 | CV: Image Classification | [ResNet strikes back](https://arxiv.org/abs/2110.00476) | [Ross Wightman](https://github.com/rwightman) | [Code](https://github.com/rwightman/pytorch-image-models) | [](https://youtu.be/sNiAX2ZCW34) |

| 17/Oct/2021 | Importance Weighting | [Rethinking Importance Weighting for Deep Learning under Distribution Shift](https://arxiv.org/abs/2006.04662) | [Nan Lu](https://scholar.google.co.jp/citations?user=KQUQlG4AAAAJ&hl=en) | [Slides](https://github.com/Machine-Learning-Tokyo/__init__/raw/main/session_10/MLT_1017.pdf) | [Code](https://github.com/TongtongFANG/DIW) | [](https://www.youtube.com/watch?v=UkbkhKIP_PY) |

| 12/Sep/2021 | Knowledge Distillation | [Self-Distillation as Instance-Specific Label Smoothing](https://arxiv.org/abs/2006.05065) | [Mauricio Orbes](https://www.linkedin.com/in/mauricio-orbes-b13916157/) | [Slides](https://github.com/Machine-Learning-Tokyo/__init__/blob/main/session_09/SelfDistillation.pdf) | [](https://www.youtube.com/watch?v=aeZ7vU9fFdI) |

| 15/Aug/2021 | Model Optimization | [Filter Pruning via Geometric Median](https://arxiv.org/abs/1811.00250) | [J. Miguel Valverde](https://www.twitter.com/jmlipman) | [Slides](https://github.com/Machine-Learning-Tokyo/__init__/blob/main/session_08/Filter%20Pruning%20via%20Geometric%20Median.pptx) | [](https://www.youtube.com/watch?v=k7rVPd_Wvpg) |

| 18/Jul/2021 | CV: Vision Transformers | [An Image is Worth 16x16 Words](https://arxiv.org/abs/2010.11929) | [Joshua Owoyemi](https://toluwajosh.github.io/) | [Slides](session_07/Transformers_for_Image_Recognition_at_Scale_slides.pdf) | [](https://www.youtube.com/watch?v=yCEpkEb7mvw)

| 13/Jun/2021 | NLP: Transformers | [Attention is all you need](https://arxiv.org/abs/1706.03762) | [Charles Melby-Thompson](https://www.linkedin.com/in/charles-melby-thompson/) | [Keynote](https://github.com/Machine-Learning-Tokyo/__init__/blob/main/session_06/transformers.key) | [](https://www.youtube.com/watch?v=F7k8M3xTLzk) |

| 9/May/2021 | NLP: RNN Encoder-Decoder | [RNN Encoder-Decoder](https://arxiv.org/abs/1406.1078) | [Ana Valeria González](https://anavaleriagonzalez.github.io/) | [Slides](https://github.com/Machine-Learning-Tokyo/__init__/blob/main/session_05/phrase-rep-3.pdf) | [](https://www.youtube.com/watch?v=Er8uAQoy6Sk) |

| 18/Apr/2021 | CV: Object Detection | [SSD: Single Shot MultiBox Detector](https://arxiv.org/pdf/1512.02325.pdf) | [Charles Melby-Thompson](https://www.linkedin.com/in/charles-melby-thompson/) | [Slides](https://github.com/Machine-Learning-Tokyo/__init__/blob/main/session_04/ssd.pdf) | [Keynote](https://github.com/Machine-Learning-Tokyo/__init__/blob/main/session_04/ssd.key) | [](https://www.youtube.com/watch?v=F-irLP2k3Dk) |

| 14/Mar/2021 | CV: Attention in Images | [Squeeze and Excitation](https://arxiv.org/abs/1709.01507) | [Alisher Abdulkhaev](https://twitter.com/alisher_ai) | [Slides](https://github.com/Machine-Learning-Tokyo/__init__/blob/main/session_03/Squeeze-and-Excitation%20Networks.pdf) | [PwA](https://github.com/Machine-Learning-Tokyo/papers-with-annotations/blob/master/convolutional-neural-networks/Squeeze-and-Excitation_Networks.pdf) | [](https://www.youtube.com/watch?v=1IYYzglEk0o) |

| 14/Feb/2021 | CV: Dilated Convolutions + ASPP | [DeepLabv2](https://arxiv.org/abs/1606.00915) | [J. Miguel Valverde](https://www.twitter.com/jmlipman) | [Slides](https://github.com/Machine-Learning-Tokyo/__init__/blob/main/session_02/DeepLabv2.pptx) | [Notebook](https://github.com/Machine-Learning-Tokyo/__init__/blob/main/session_02/DeepLabv2.ipynb) | [](https://www.youtube.com/watch?v=HTgvG57JFYw) |

| 10/Jan/2021 | CV: Separable Convolutions | [Xception](https://arxiv.org/abs/1610.02357) | [Jayson Cunanan](https://www.linkedin.com/in/jayson-cunanan-phd/) | [Slides](https://github.com/Machine-Learning-Tokyo/__init__/blob/main/session_01/Xception.pptx) | [Notebook](https://github.com/Machine-Learning-Tokyo/__init__/blob/main/session_01/Xception.ipynb) | [](https://www.youtube.com/watch?v=GIXDyJnFM5w) |

Sessions will be held via Zoom starting at 5pm (JST) / 10am (CET). Check at what time is in your region [here](https://www.worldtimebuddy.com/japan-tokyo-to-cet).

## 2021 Summary

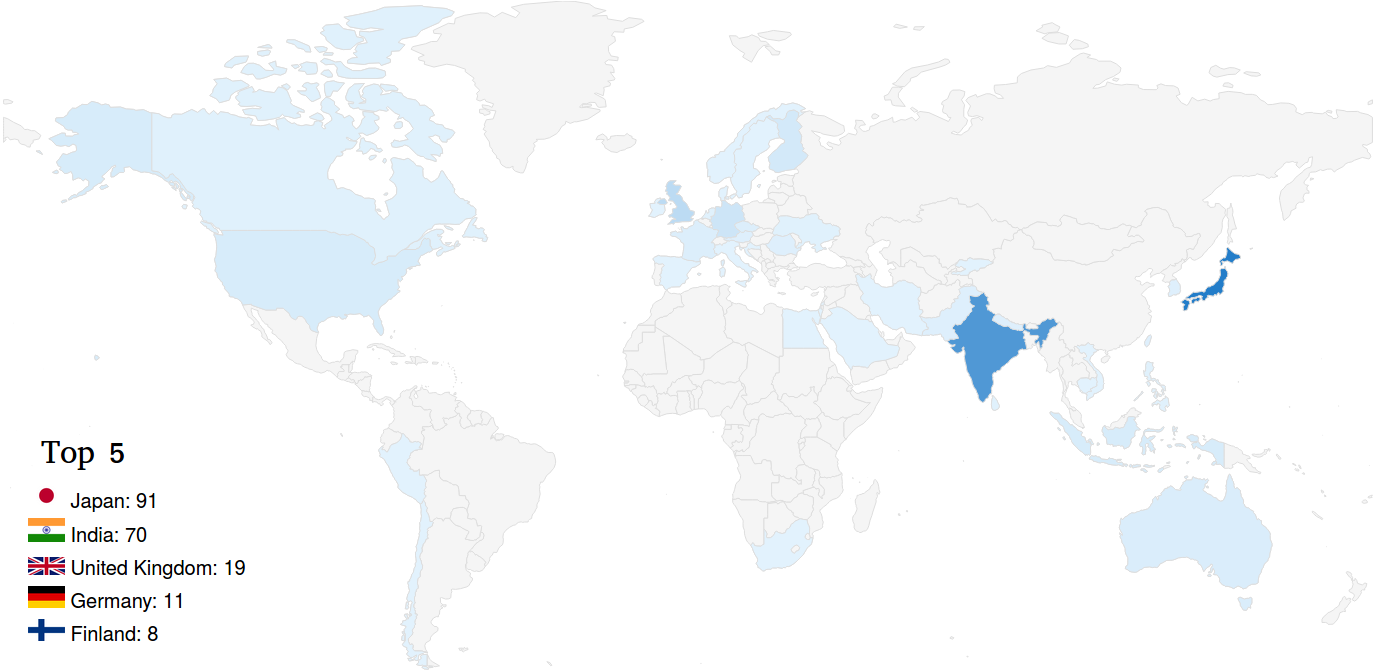

MLT \_\_init\_\_ is worldwide! On average we had around 30-50 participants in each of our sessions, joining from, at least, 42 countries. The top 5 countries with the highest number of participants were Japan 🇯🇵, India 🇮🇳, United Kingdom 🇬🇧, Germany 🇩🇪, and Finland 🇫🇮. Thank you for being part of MLT \_\_init\_\_ 🤗

## Format

Introduction (5min) + Paper presentation (25min) + Discussion (30min)

We record the introduction and the presentation but not the discussion, allowing participants to interact while protecting their privacy.

## For participants

We kindly ask participants to read the paper in advance and to join the session with questions and comments. These questions/comments can be to highlight interesting or unclear parts. For instance: what did you like the most about this paper? What did you learn? What did you not understand?

To make the session more interactive, participants can also ask questions during the presentation. We encourage everyone to use their microphone, but please keep in mind the environmental noise. If you cannot use your microphone or you want to keep your privacy, you are welcome to write in the Zoom chat or Slack channel, and either Jayson or Miguel will read your questions aloud.

## For presenters

Presenters will prepare a Powerpoint/Keynote presentation that will be shared in this repository after the session. The presentation **should last around 25 mins** so that there is enough time for questions and discussion. Inline with the goals of MLT \_\_init\_\_, we encourage presenters to incorporate intuitive visualizations, code, Jupyter notebooks, Colab, and any other material. Finally, please keep in mind that MLT \_\_init\_\_ audience has a very heterogeneous background.Some ideas for the presentation:

* Background knowledge required to understand the paper.

* Motivation of the paper, what is the problem that authors try to solve?

* Contributions of the paper.

## Code of Conduct

As this event aims to be interactive, please remember to be kind and respectful to each other. Full code of conduct [here](https://mltokyo.ai/about).

## We want your feedback!

Feedback and contact form: https://forms.gle/jJLWyAMjjVKL8KFRA