Ecosyste.ms: Awesome

An open API service indexing awesome lists of open source software.

https://github.com/manjushsh/local-code-completion-configs

https://github.com/manjushsh/local-code-completion-configs

Last synced: about 1 month ago

JSON representation

- Host: GitHub

- URL: https://github.com/manjushsh/local-code-completion-configs

- Owner: manjushsh

- Created: 2024-04-07T12:48:36.000Z (11 months ago)

- Default Branch: main

- Last Pushed: 2024-09-30T04:56:35.000Z (5 months ago)

- Last Synced: 2024-11-11T06:14:38.743Z (3 months ago)

- Homepage: https://manjushsh.github.io/local-code-completion-configs/

- Size: 6.91 MB

- Stars: 0

- Watchers: 1

- Forks: 1

- Open Issues: 1

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

# Configuring Ollama and Continue VS Code Extension for Local Coding Assistant

## 🔗 Links

[](https://github.com/manjushsh/local-code-completion-configs) [](https://manjushsh.github.io/local-code-completion-configs/)

## Prerequisites

- [Ollama](https://ollama.com/) installed on your system.

You can visit [Ollama](https://ollama.com/) and [download application](https://ollama.com/download) as per your system.

- AI model that we will be using here is Codellama. **You can use your prefered model**. Code Llama is a model for generating and discussing code, built on top of Llama 2. Code Llama supports many of the most popular programming languages including Python, C++, Java, PHP, Typescript (Javascript), C#, Bash and more. If not installed, you can install wiith following command:

``` bash

ollama pull codellama

```

You can also install `Starcoder 2 3B` for code autocomplete by running:

```bash

ollama pull starcoder2:3b

```

#### NOTE: It’s crucial to choose models that are compatible with your system to ensure smooth operation and avoid any hiccups.

## Installing Continue and configuring

You can install Continue from [here in VS Code store](https://marketplace.visualstudio.com/items?itemName=Continue.continue).

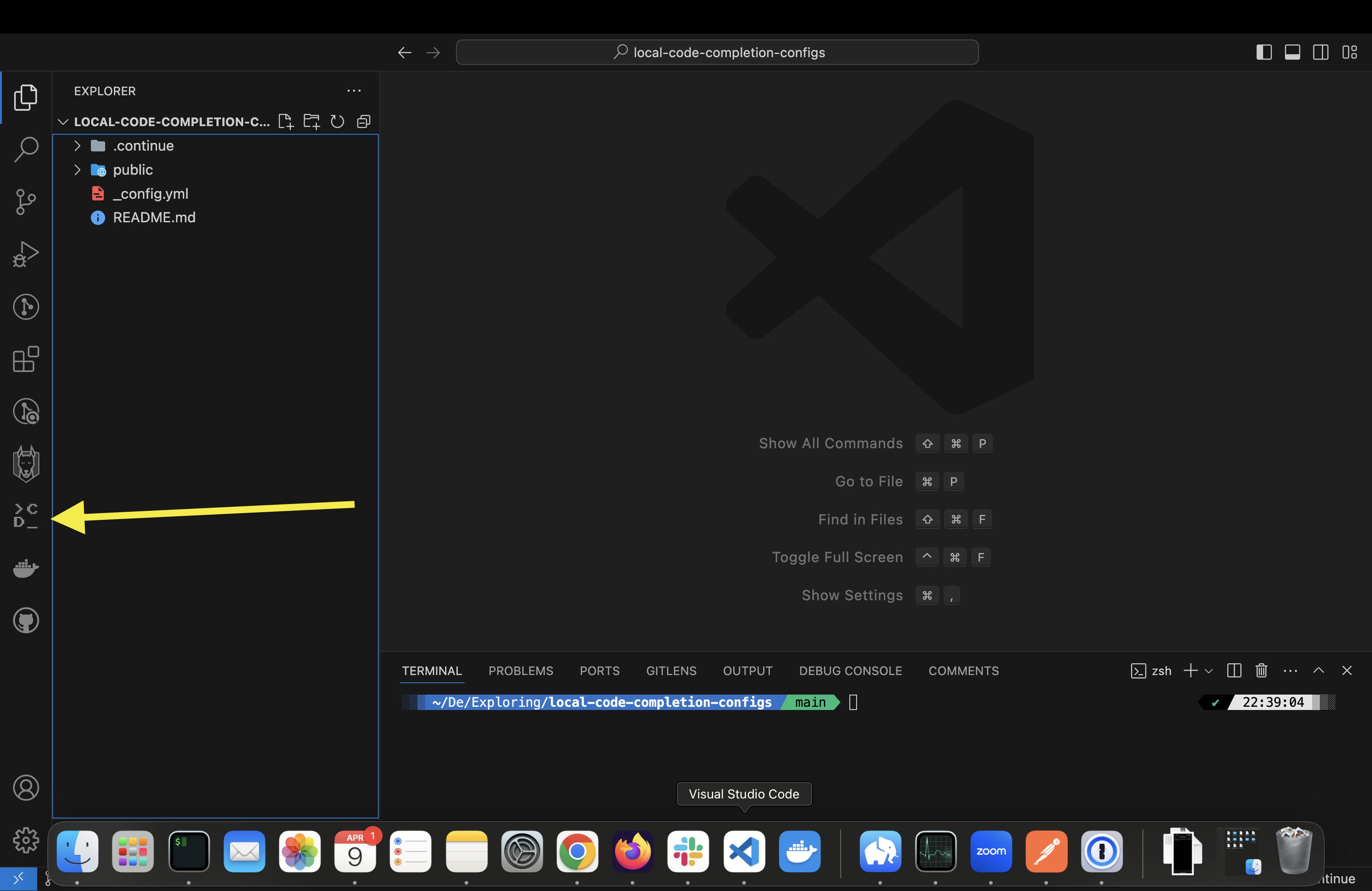

#### After installation, you should see it in sidebar as shown below:

## Configuring Continue to use local model

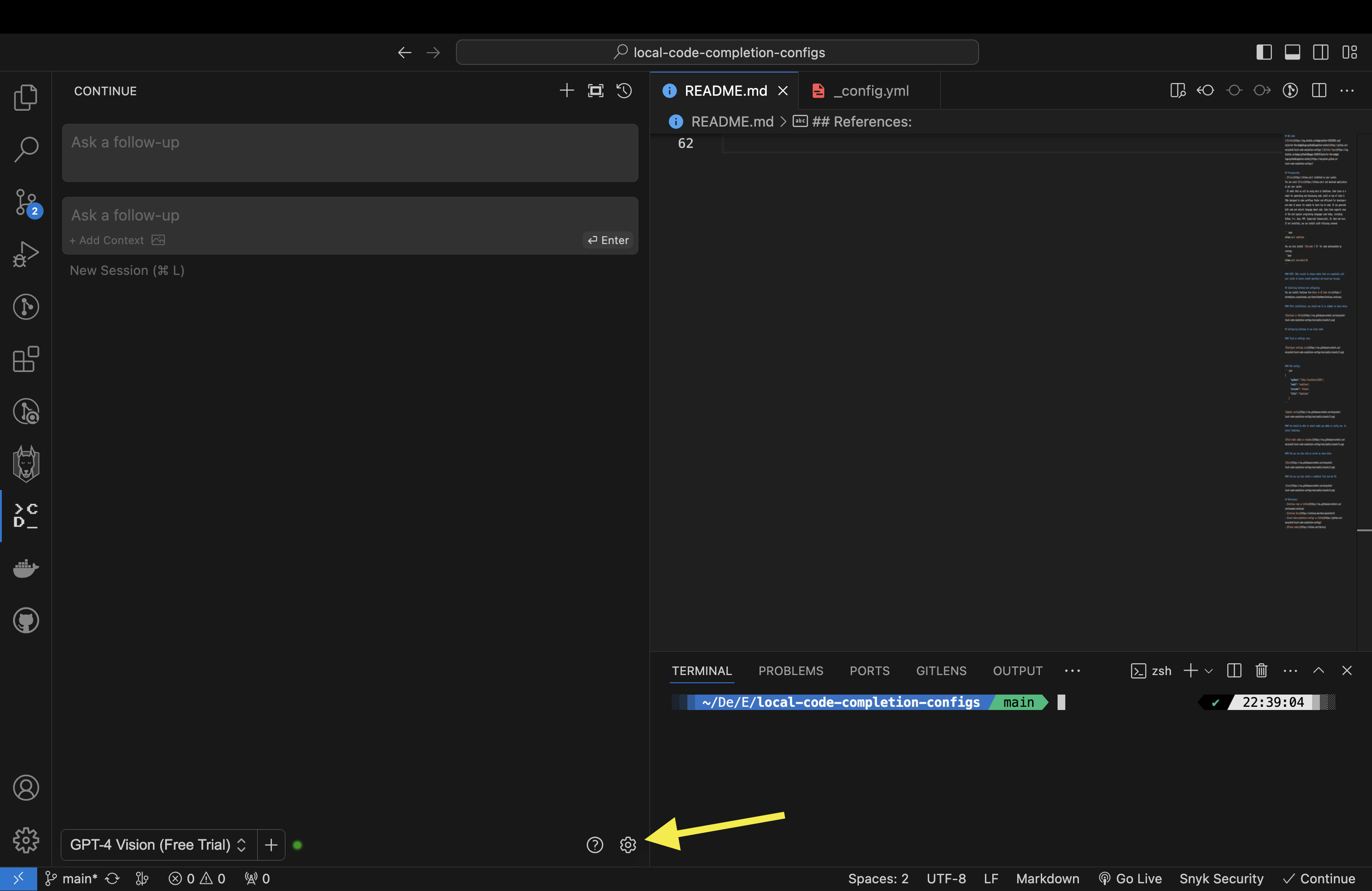

#### Click on settings icon. It will open a config.json in your editor

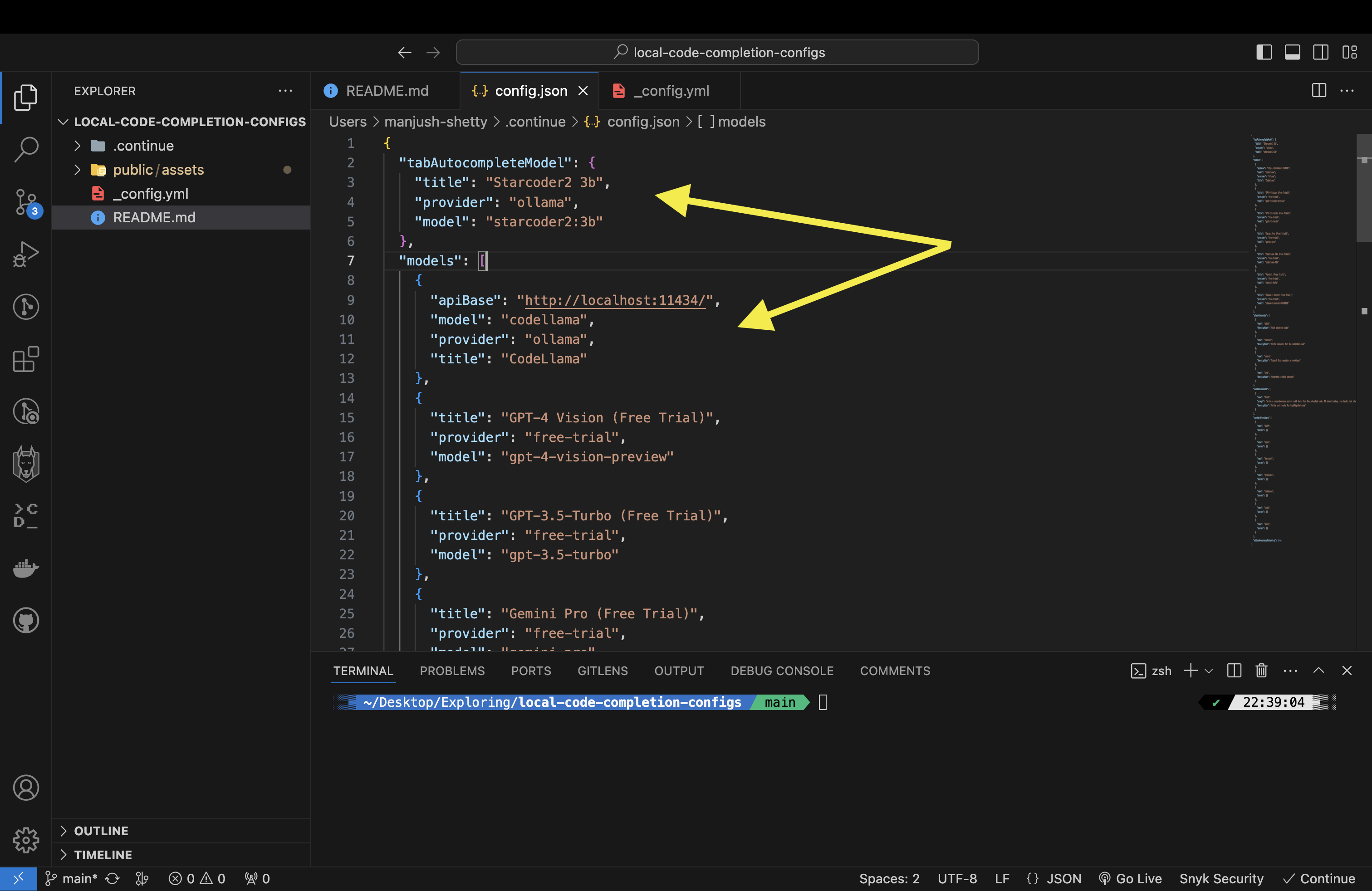

#### Add configs:

``` json

{

"apiBase": "http://localhost:11434/",

"model": "codellama",

"provider": "ollama",

"title": "CodeLlama"

}

```

and also add `tabAutocompleteModel` to config

```json

"tabAutocompleteModel": {

"apiBase": "http://localhost:11434/",

"title": "Starcoder2 3b",

"provider": "ollama",

"model": "starcoder2:3b"

}

```

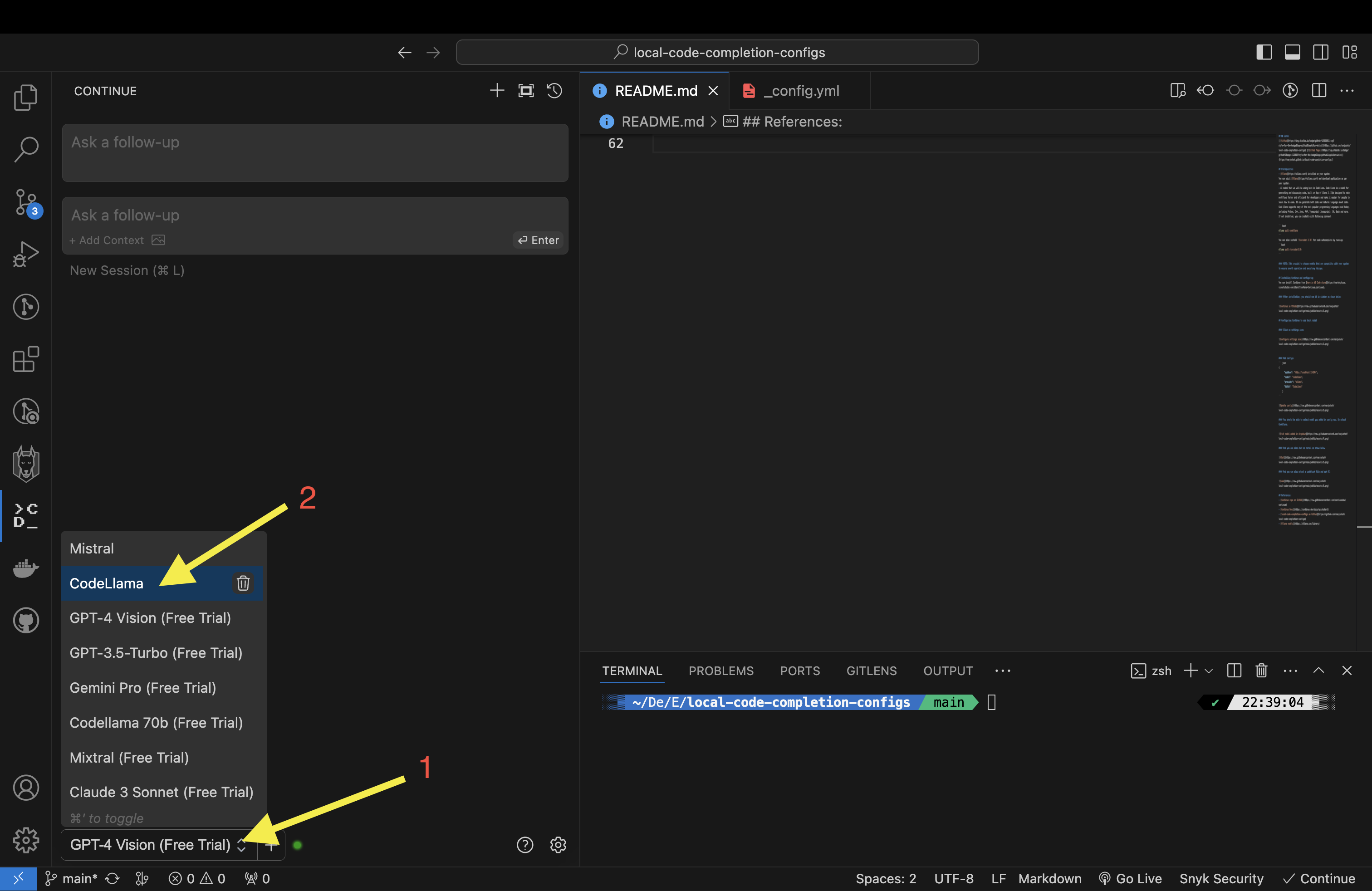

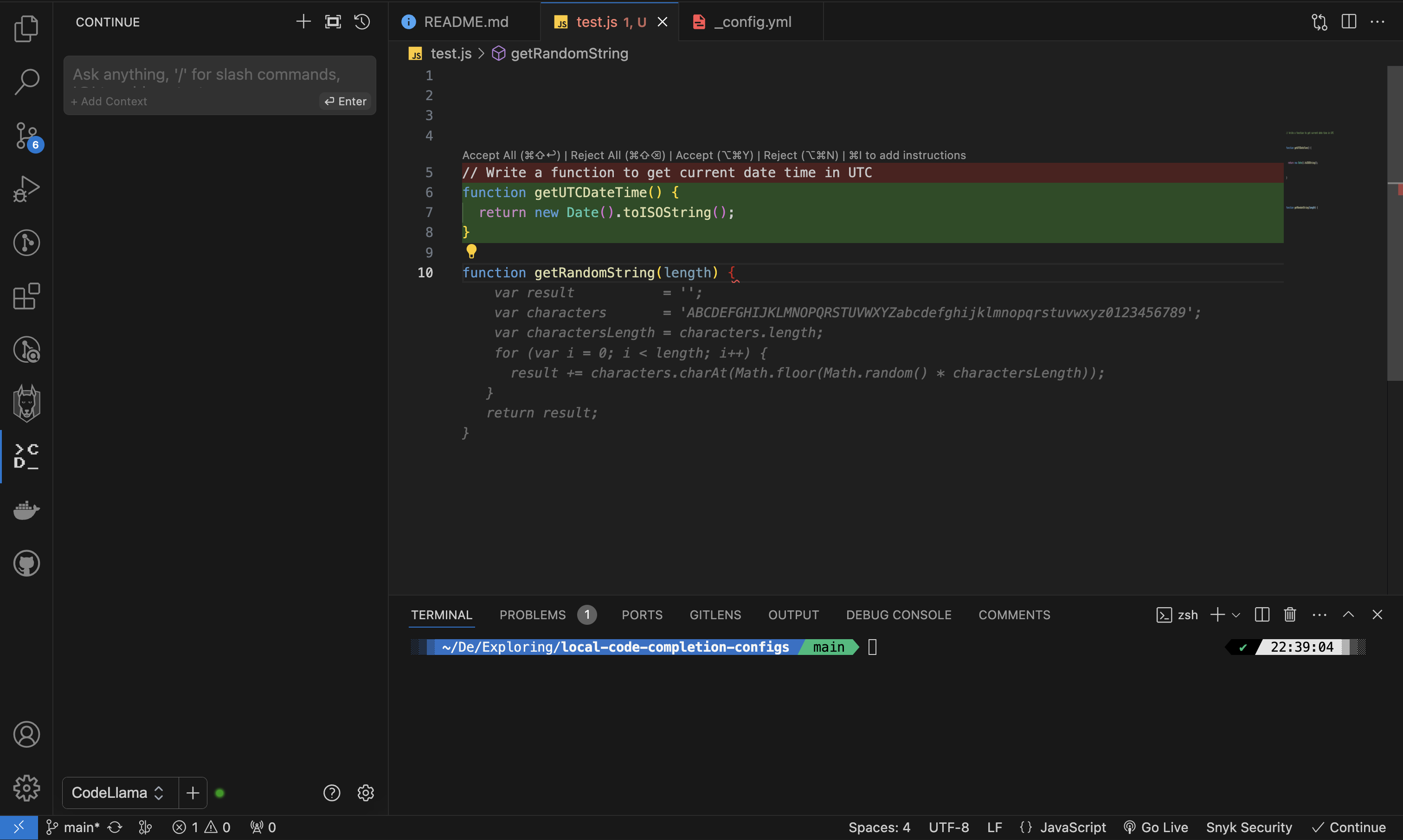

#### Select CodeLlama, which would be visible in dropdown once you add it in config

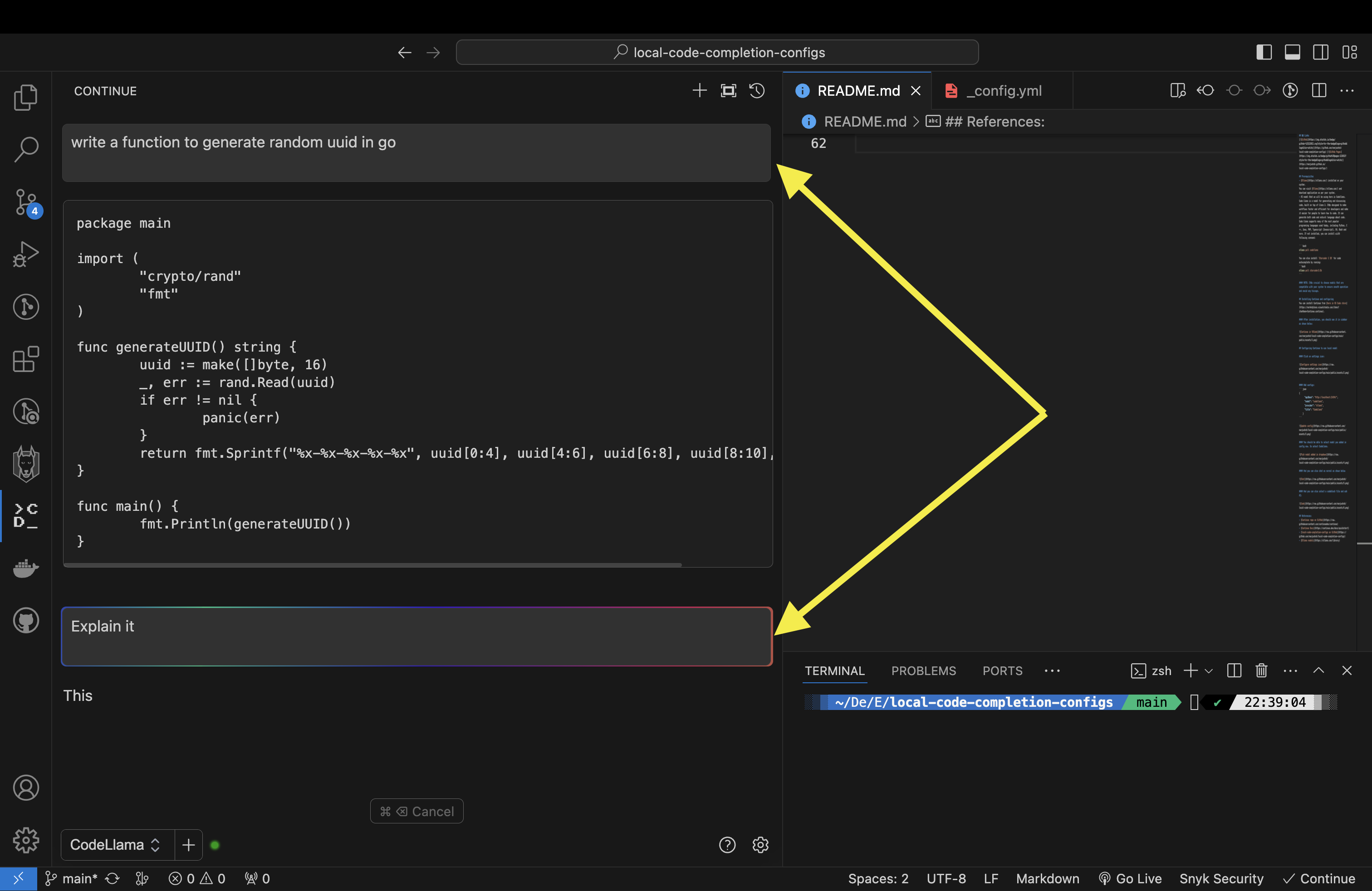

#### Once model is configured, you should be able to ask queastions to the model in chat window

#### And you can also select a codeblock file and ask AI similar to copilot:

## References:

- [Article by Ollama](https://ollama.com/blog/continue-code-assistant)

- [Continue repo on GitHub](https://github.com/continuedev/continue)

- [Continue Docs](https://continue.dev/docs/quickstart)

- [local-code-completion-configs on GitHub](https://github.com/manjushsh/local-code-completion-configs)

- [Ollama models](https://ollama.com/library)