https://github.com/maxhumber/gazpacho

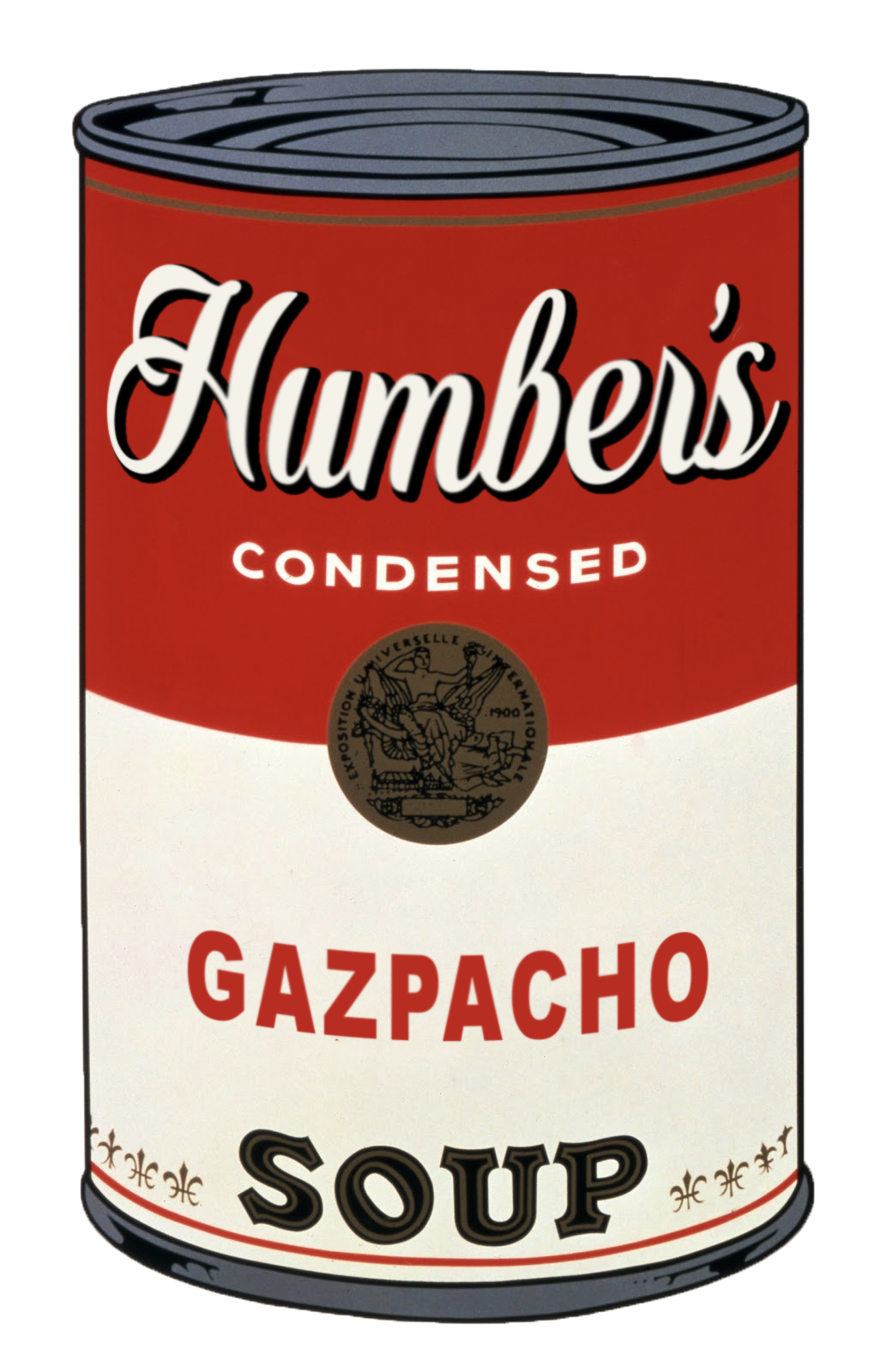

🥫 The simple, fast, and modern web scraping library

https://github.com/maxhumber/gazpacho

gazpacho scraping webscraping

Last synced: 7 months ago

JSON representation

🥫 The simple, fast, and modern web scraping library

- Host: GitHub

- URL: https://github.com/maxhumber/gazpacho

- Owner: maxhumber

- License: mit

- Created: 2019-09-23T20:21:55.000Z (about 6 years ago)

- Default Branch: master

- Last Pushed: 2023-12-07T03:03:36.000Z (about 2 years ago)

- Last Synced: 2025-05-14T23:17:14.418Z (7 months ago)

- Topics: gazpacho, scraping, webscraping

- Language: Python

- Homepage:

- Size: 12.3 MB

- Stars: 769

- Watchers: 17

- Forks: 55

- Open Issues: 16

-

Metadata Files:

- Readme: README.md

- Changelog: CHANGELOG.md

- Funding: .github/FUNDING.yml

- License: LICENSE

Awesome Lists containing this project

- stars - maxhumber/gazpacho - 🥫 The simple, fast, and modern web scraping library (Python)

- best-of-web-python - GitHub - 31% open · ⏱️ 07.12.2023): (Web Scraping & Crawling)

- awesome-rainmana - maxhumber/gazpacho - 🥫 The simple, fast, and modern web scraping library (Python)

README

## About

gazpacho is a simple, fast, and modern web scraping library. The library is stable, and installed with **zero** dependencies.

## Install

Install with `pip` at the command line:

```

pip install -U gazpacho

```

## Quickstart

Give this a try:

```python

from gazpacho import get, Soup

url = 'https://scrape.world/books'

html = get(url)

soup = Soup(html)

books = soup.find('div', {'class': 'book-'}, partial=True)

def parse(book):

name = book.find('h4').text

price = float(book.find('p').text[1:].split(' ')[0])

return name, price

[parse(book) for book in books]

```

## Tutorial

#### Import

Import gazpacho following the convention:

```python

from gazpacho import get, Soup

```

#### get

Use the `get` function to download raw HTML:

```python

url = 'https://scrape.world/soup'

html = get(url)

print(html[:50])

# '\n\n \n Soup

```

#### attrs=

Use the `attrs` argument to isolate tags that contain specific HTML element attributes:

```python

soup.find('div', attrs={'class': 'section-'})

```

#### partial=

Element attributes are partially matched by default. Turn this off by setting `partial` to `False`:

```python

soup.find('div', {'class': 'soup'}, partial=False)

```

#### mode=

Override the mode argument {`'auto', 'first', 'all'`} to guarantee return behaviour:

```python

print(soup.find('span', mode='first'))

#

len(soup.find('span', mode='all'))

# 8

```

#### dir()

`Soup` objects have `html`, `tag`, `attrs`, and `text` attributes:

```python

dir(h1)

# ['attrs', 'find', 'get', 'html', 'strip', 'tag', 'text']

```

Use them accordingly:

```python

print(h1.html)

# '

Soup

'

print(h1.tag)

# h1

print(h1.attrs)

# {'id': 'firstHeading', 'class': 'firstHeading', 'lang': 'en'}

print(h1.text)

# Soup

```

## Support

If you use gazpacho, consider adding the [](https://github.com/maxhumber/gazpacho) badge to your project README.md:

```markdown

[](https://github.com/maxhumber/gazpacho)

```

## Contribute

For feature requests or bug reports, please use [Github Issues](https://github.com/maxhumber/gazpacho/issues)

For PRs, please read the [CONTRIBUTING.md](https://github.com/maxhumber/gazpacho/blob/master/CONTRIBUTING.md) document