https://github.com/microsoft/Peekaboo

Interactive Video Generation via Masked-Diffusion

https://github.com/microsoft/Peekaboo

Last synced: 6 months ago

JSON representation

Interactive Video Generation via Masked-Diffusion

- Host: GitHub

- URL: https://github.com/microsoft/Peekaboo

- Owner: microsoft

- License: mit

- Created: 2023-12-14T03:13:15.000Z (about 2 years ago)

- Default Branch: main

- Last Pushed: 2024-02-12T23:02:58.000Z (almost 2 years ago)

- Last Synced: 2024-04-08T00:52:06.685Z (almost 2 years ago)

- Language: Python

- Size: 12.7 MB

- Stars: 49

- Watchers: 7

- Forks: 2

- Open Issues: 2

-

Metadata Files:

- Readme: README.md

- License: LICENSE

- Code of conduct: CODE_OF_CONDUCT.md

- Security: SECURITY.md

- Support: SUPPORT.md

Awesome Lists containing this project

- awesome-diffusion-categorized - [Code

README

# Peekaboo: Interactive Video Generation via Masked-Diffusion

### [Project Page](https://jinga-lala.github.io/projects/Peekaboo/) | [Paper](https://arxiv.org/abs/2312.07509) | [Data](eval/README.md) | [HuggingFace Demo](https://huggingface.co/spaces/anshuln/peekaboo-demo)

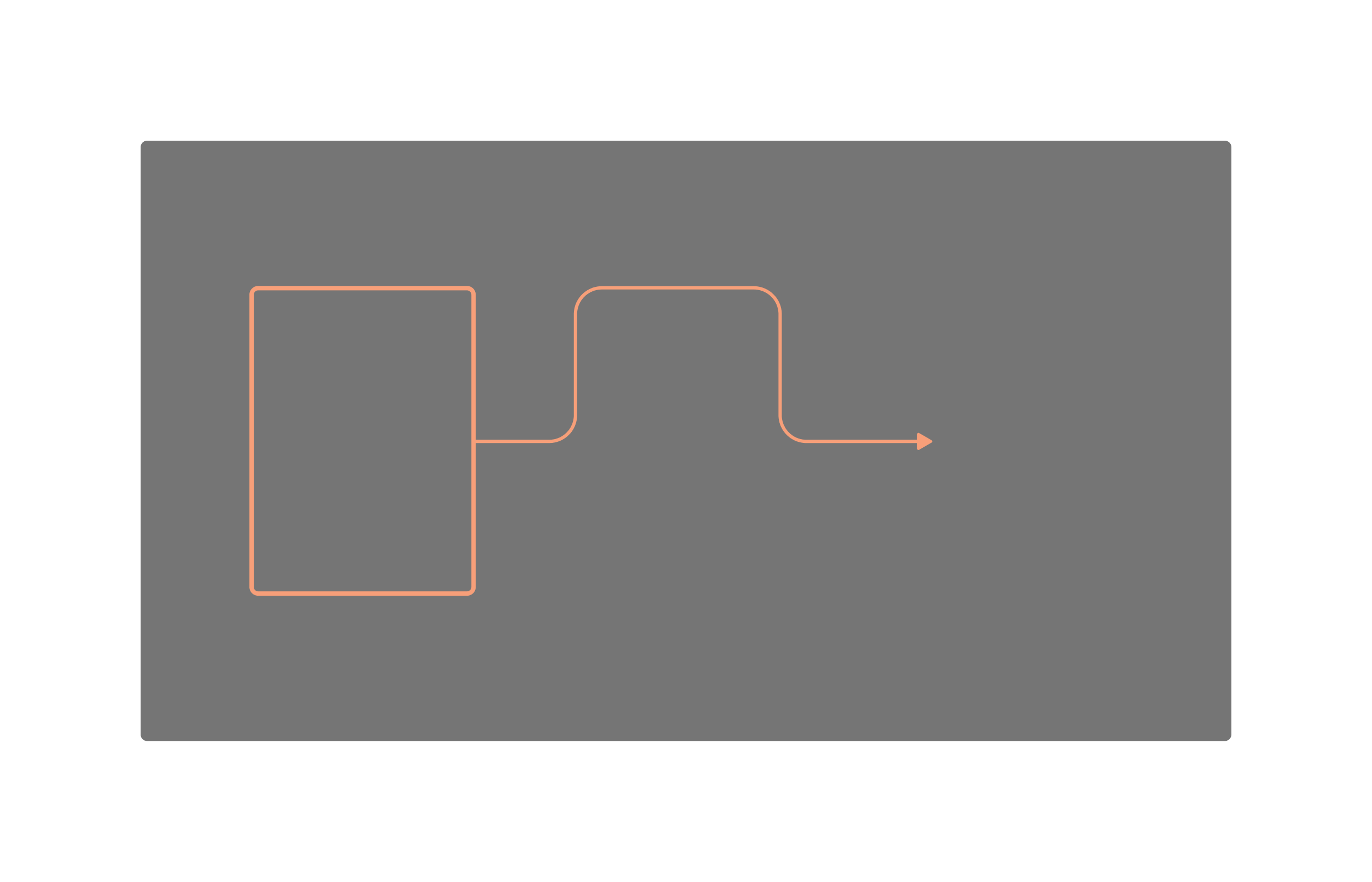

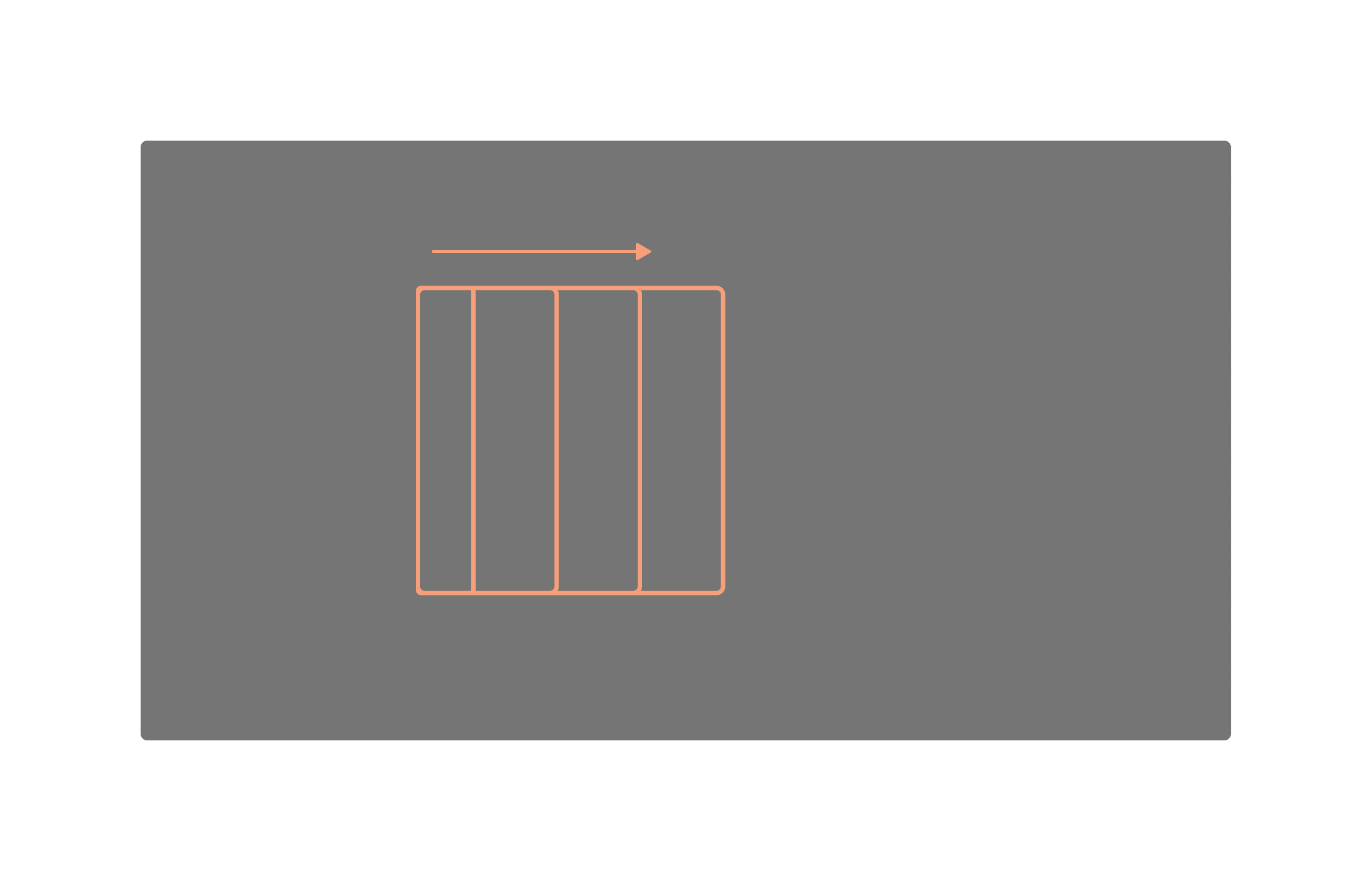

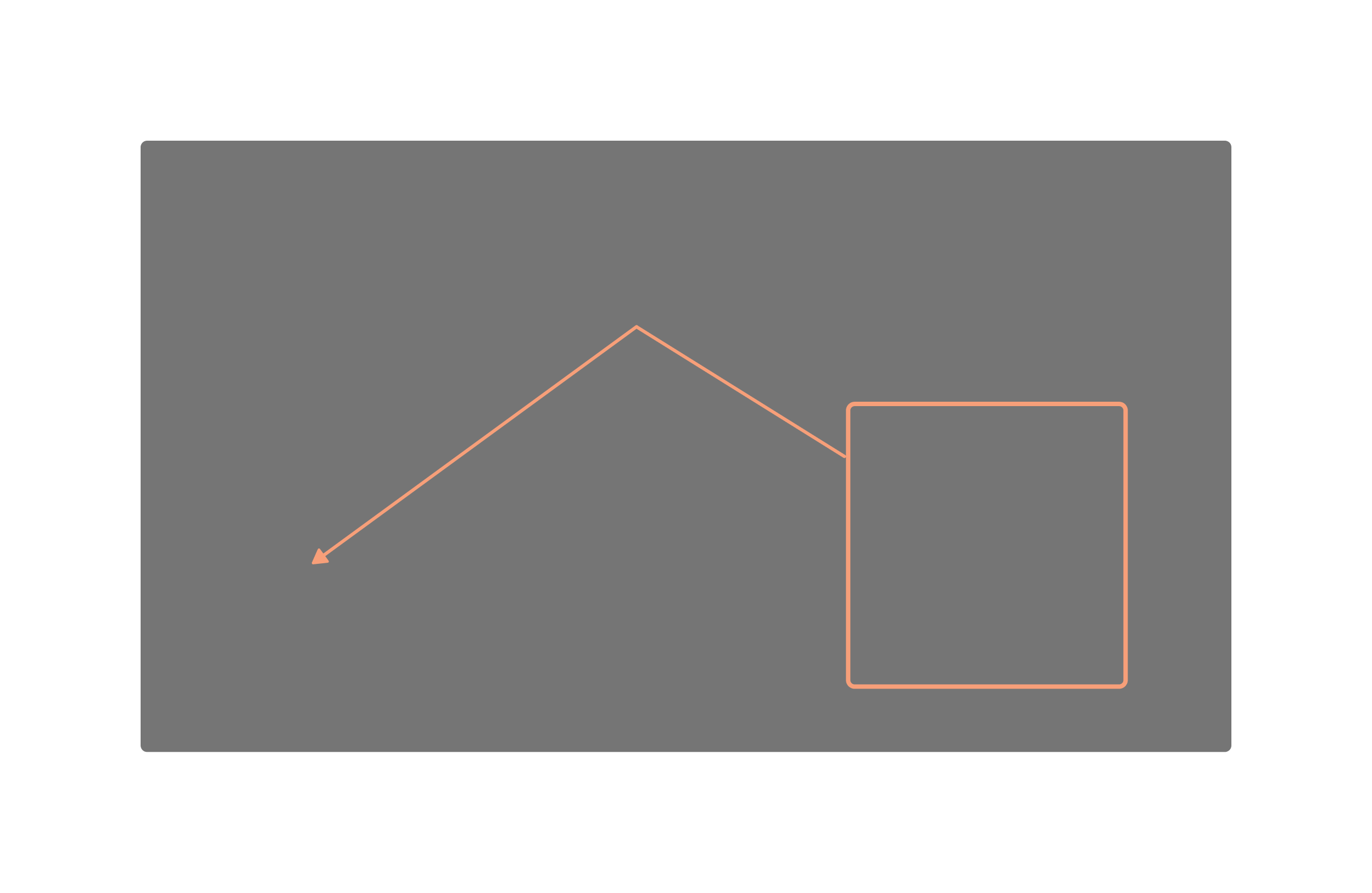

PyTorch implementation of Peekaboo, a training-free and zero-latency interactive video generation pipeline

[Peekaboo: Interactive Video Generation via Masked-Diffusion](https://jinga-lala.github.io/projects/Peekaboo/)

[Yash Jain](https://jinga-lala.github.io/)1*,

[Anshul Nasery](https://anshuln.github.io/)2*,

[Vibhav Vineet](https://vibhav-vineet.github.io/)3,

[Harkirat Behl](https://harkiratbehl.github.io/)3

1Microsoft, 2University of Washington, 3Microsoft Research

\*denotes equal contribution

|  |  |  |

|:----------------------------------------------------------------------------------------------------------------------:|:-------------------------------------------------------------------------------------------------------------------:|:--------------------------------------------------------------------------------------------------------------------:|

|  |  |  |

| *A Horse galloping through a meadow* | *A Panda playing Peekaboo* | *An Eagle flying in the sky* |

## Quickstart :rocket:

Follow the instructions below to download and run Peekaboo on your own prompts and bbox inputs. These instructions need a GPU with ~40GB VRAM for zeroscope and ~13GB VRAM for modelscope . If you don't have a GPU, you may need to change the default configuration from cuda to cpu or follow intstructions given [here](https://huggingface.co/docs/diffusers/main/en/optimization/memory).

### Set up a conda environment:

```

conda env create -f env.yaml

conda activate peekaboo

```

### Generate peekaboo videos through CLI:

```

python src/generate.py --model zeroscope --prompt "A panda eating bamboo in a lush bamboo forest" --fg_object "panda"

# Optionally, you can specify parameters to tune your result:

# python src/generate.py --model zeroscope --frozen_steps 2 --seed 1234 --num_inference_steps 50 --output_path src/demo --prompt "A panda eating bamboo in a lush bamboo forest" --fg_object "panda"

```

### Or launch your own interactive editing Gradio app:

```

python src/app_modelscope.py

```

_(For advice on how to get the best results by tuning parameters, see the [Tips](#tips) section)._

## Changing bbox

We recommend using our interactive Gradio demo to play with different input bbox. Alternatively, editing the `bbox_mask` variable in `generate.py` will achieve similar results.

## Datasets and Evaluation

Please follow the [README](eval/README.md) to download the datasets and learn more about Peekaboo's evaluation.

## Tips

If you're not getting the quality result you want, there may be a few reasons:

1. **Is the video not gaining control or degrading?** The number of `frozen_steps` might be insufficient. The number of steps dictate how long the peekaboo attention mask modulation will act during the generation process. Too less will not affect the output video and too much will degrade the video quality. The default `frozen_steps` is set to 2, but aren't necessarily optimal for each (prompt, bbox) pair. Try:

* Increasing the `frozen_steps`, or

* Decreasing the `frozen_steps`

2. Poor video quality: A potential reason could be that the base model does not support the prompt well or in other words, generate a poor quality video for the given prompt. Try changing the `model` or improving the `prompt`.

3. Try generating results with different random seeds by chaning `seed` parameter and running generation multiple times.

5. Increasing the number of steps sometimes improves results.

## BibTeX

```

@article{jain2023peekaboo,

title={PEEKABOO: Interactive Video Generation via Masked-Diffusion},

author={Jain, Yash and Nasery, Anshul and Vineet, Vibhav and Behl, Harkirat},

journal={arXiv preprint arXiv:2312.07509},

year={2023}

}

```

## Comments

If you implement Peekaboo in newer text-to-video models, feel free to raise a PR. :smile:

This readme is inspired by [InstructPix2Pix](https://github.com/timothybrooks/instruct-pix2pix)