Ecosyste.ms: Awesome

An open API service indexing awesome lists of open source software.

https://github.com/mikewolfd/raprom

https://github.com/mikewolfd/raprom

Last synced: 7 days ago

JSON representation

- Host: GitHub

- URL: https://github.com/mikewolfd/raprom

- Owner: mikewolfd

- License: mit

- Created: 2018-04-13T14:54:51.000Z (over 6 years ago)

- Default Branch: nvidia-smi

- Last Pushed: 2018-04-14T18:51:01.000Z (over 6 years ago)

- Last Synced: 2024-11-14T18:47:36.971Z (about 2 months ago)

- Size: 1.36 MB

- Stars: 0

- Watchers: 2

- Forks: 0

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

dockprom

========

A monitoring solution for Docker hosts and containers with [Prometheus](https://prometheus.io/), [Grafana](http://grafana.org/), [cAdvisor](https://github.com/google/cadvisor),

[NodeExporter](https://github.com/prometheus/node_exporter) and alerting with [AlertManager](https://github.com/prometheus/alertmanager).

***If you're looking for the Docker Swarm version please go to [stefanprodan/swarmprom](https://github.com/stefanprodan/swarmprom)***

## Install

Clone this repository on your Docker host, cd into dockprom directory and run compose up:

```bash

git clone https://github.com/stefanprodan/dockprom

cd dockprom

ADMIN_USER=admin ADMIN_PASSWORD=admin docker-compose up -d

```

Prerequisites:

* Docker Engine >= 1.13

* Docker Compose >= 1.11

Containers:

* Prometheus (metrics database) `http://:9090`

* AlertManager (alerts management) `http://:9093`

* Grafana (visualize metrics) `http://:3000`

* NodeExporter (host metrics collector)

* cAdvisor (containers metrics collector)

* Caddy (reverse proxy and basic auth provider for prometheus and alertmanager)

## Setup Grafana

Navigate to `http://:3000` and login with user ***admin*** password ***admin***. You can change the credentials in the compose file or by supplying the `ADMIN_USER` and `ADMIN_PASSWORD` environment variables on compose up.

Grafana is preconfigured with dashboards and Prometheus as the default data source:

* Name: Prometheus

* Type: Prometheus

* Url: http://prometheus:9090

* Access: proxy

***Docker Host Dashboard***

The Docker Host Dashboard shows key metrics for monitoring the resource usage of your server:

* Server uptime, CPU idle percent, number of CPU cores, available memory, swap and storage

* System load average graph, running and blocked by IO processes graph, interrupts graph

* CPU usage graph by mode (guest, idle, iowait, irq, nice, softirq, steal, system, user)

* Memory usage graph by distribution (used, free, buffers, cached)

* IO usage graph (read Bps, read Bps and IO time)

* Network usage graph by device (inbound Bps, Outbound Bps)

* Swap usage and activity graphs

For storage and particularly Free Storage graph, you have to specify the fstype in grafana graph request.

You can find it in `grafana/dashboards/docker_host.json`, at line 480 :

"expr": "sum(node_filesystem_free{fstype=\"btrfs\"})",

I work on BTRFS, so i need to change `aufs` to `btrfs`.

You can find right value for your system in Prometheus `http://:9090` launching this request :

node_filesystem_free

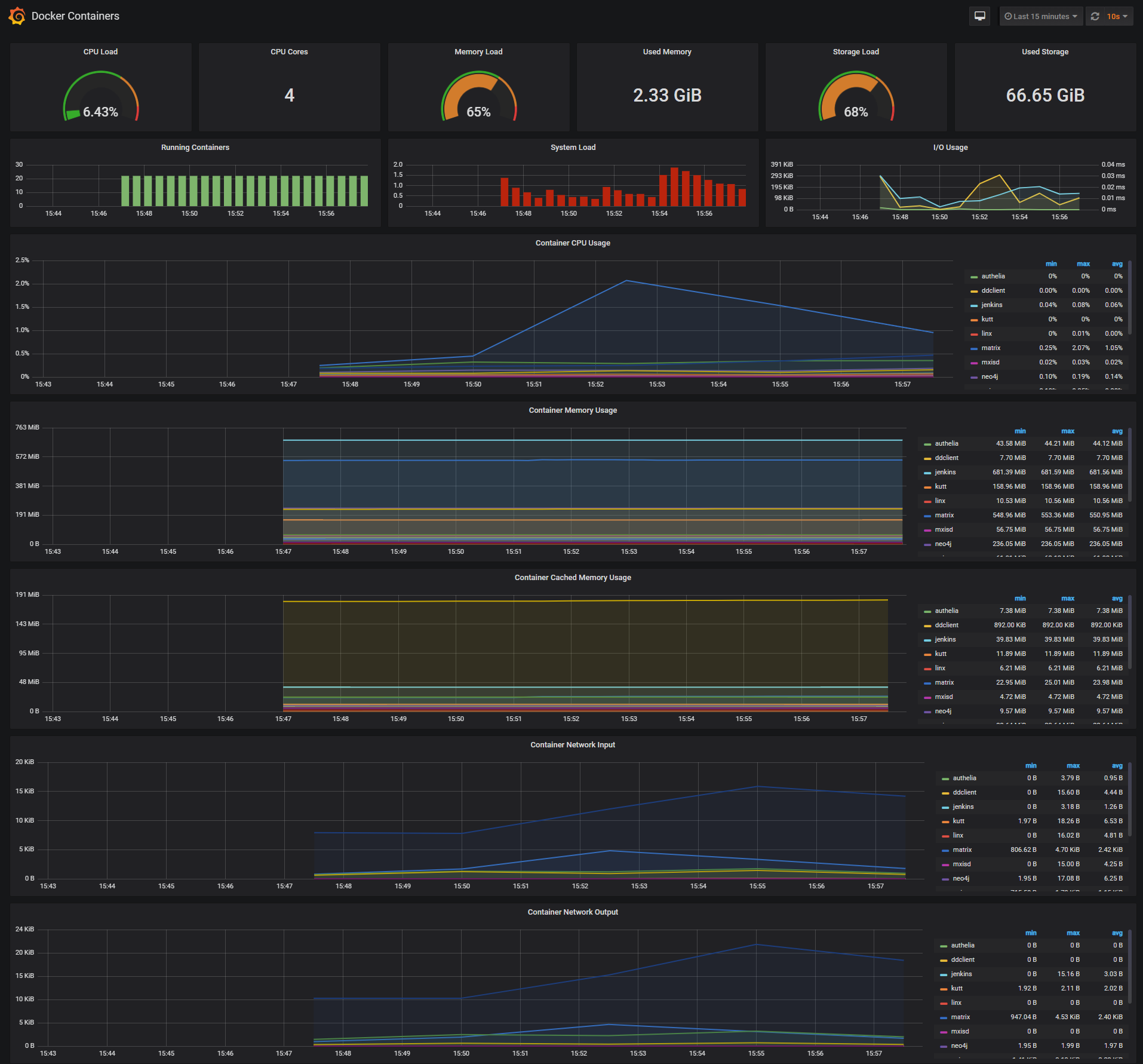

***Docker Containers Dashboard***

The Docker Containers Dashboard shows key metrics for monitoring running containers:

* Total containers CPU load, memory and storage usage

* Running containers graph, system load graph, IO usage graph

* Container CPU usage graph

* Container memory usage graph

* Container cached memory usage graph

* Container network inbound usage graph

* Container network outbound usage graph

Note that this dashboard doesn't show the containers that are part of the monitoring stack.

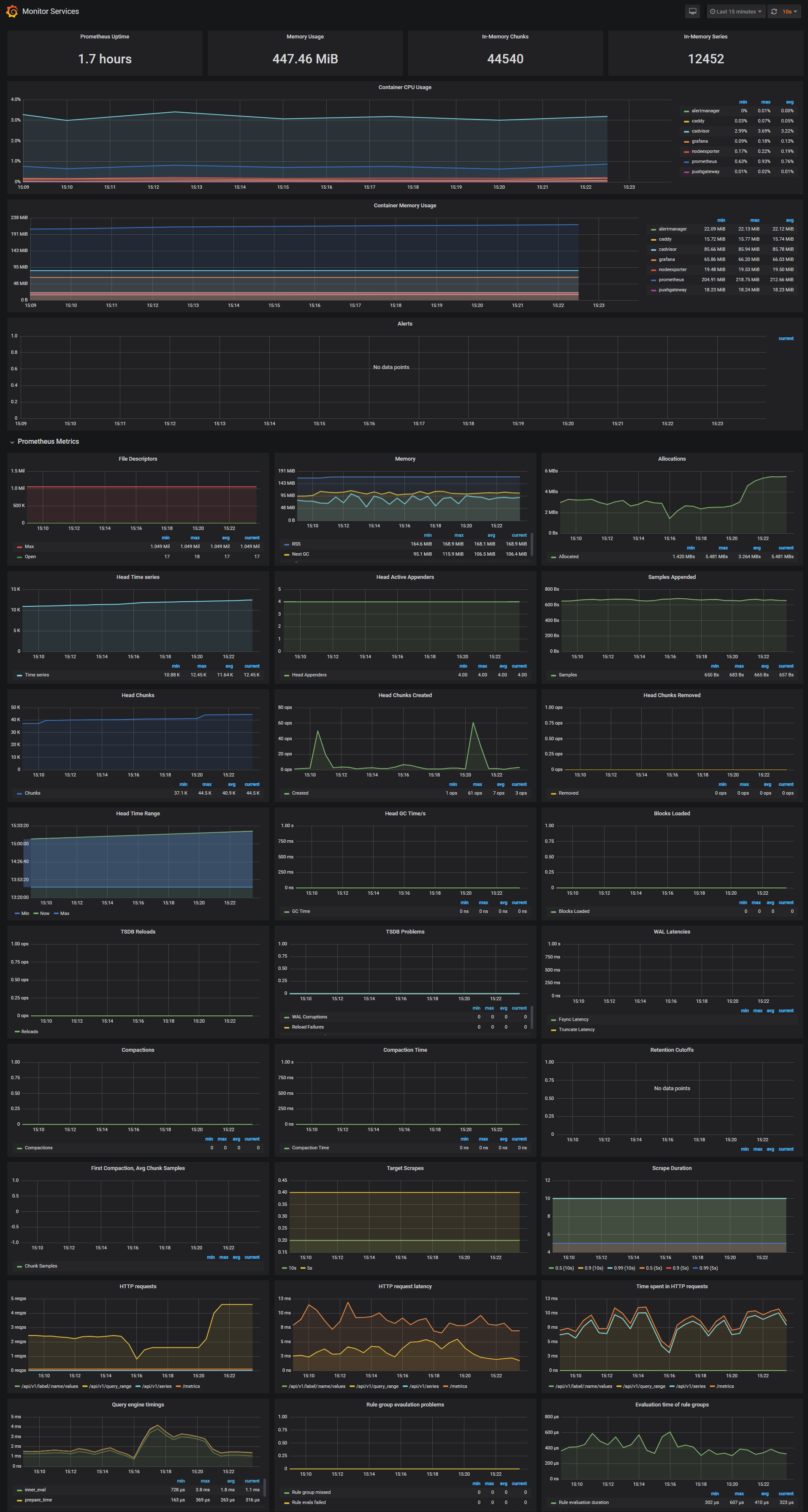

***Monitor Services Dashboard***

The Monitor Services Dashboard shows key metrics for monitoring the containers that make up the monitoring stack:

* Prometheus container uptime, monitoring stack total memory usage, Prometheus local storage memory chunks and series

* Container CPU usage graph

* Container memory usage graph

* Prometheus chunks to persist and persistence urgency graphs

* Prometheus chunks ops and checkpoint duration graphs

* Prometheus samples ingested rate, target scrapes and scrape duration graphs

* Prometheus HTTP requests graph

* Prometheus alerts graph

I've set the Prometheus retention period to 200h and the heap size to 1GB, you can change these values in the compose file.

```yaml

prometheus:

image: prom/prometheus

command:

- '-storage.local.target-heap-size=1073741824'

- '-storage.local.retention=200h'

```

Make sure you set the heap size to a maximum of 50% of the total physical memory.

## Define alerts

I've setup three alerts configuration files:

* Monitoring services alerts [targets.rules](https://github.com/stefanprodan/dockprom/blob/master/prometheus/targets.rules)

* Docker Host alerts [host.rules](https://github.com/stefanprodan/dockprom/blob/master/prometheus/host.rules)

* Docker Containers alerts [containers.rules](https://github.com/stefanprodan/dockprom/blob/master/prometheus/containers.rules)

You can modify the alert rules and reload them by making a HTTP POST call to Prometheus:

```

curl -X POST http://admin:admin@:9090/-/reload

```

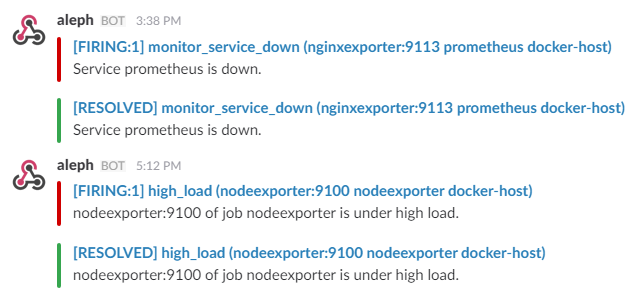

***Monitoring services alerts***

Trigger an alert if any of the monitoring targets (node-exporter and cAdvisor) are down for more than 30 seconds:

```yaml

ALERT monitor_service_down

IF up == 0

FOR 30s

LABELS { severity = "critical" }

ANNOTATIONS {

summary = "Monitor service non-operational",

description = "{{ $labels.instance }} service is down.",

}

```

***Docker Host alerts***

Trigger an alert if the Docker host CPU is under hight load for more than 30 seconds:

```yaml

ALERT high_cpu_load

IF node_load1 > 1.5

FOR 30s

LABELS { severity = "warning" }

ANNOTATIONS {

summary = "Server under high load",

description = "Docker host is under high load, the avg load 1m is at {{ $value}}. Reported by instance {{ $labels.instance }} of job {{ $labels.job }}.",

}

```

Modify the load threshold based on your CPU cores.

Trigger an alert if the Docker host memory is almost full:

```yaml

ALERT high_memory_load

IF (sum(node_memory_MemTotal) - sum(node_memory_MemFree + node_memory_Buffers + node_memory_Cached) ) / sum(node_memory_MemTotal) * 100 > 85

FOR 30s

LABELS { severity = "warning" }

ANNOTATIONS {

summary = "Server memory is almost full",

description = "Docker host memory usage is {{ humanize $value}}%. Reported by instance {{ $labels.instance }} of job {{ $labels.job }}.",

}

```

Trigger an alert if the Docker host storage is almost full:

```yaml

ALERT hight_storage_load

IF (node_filesystem_size{fstype="aufs"} - node_filesystem_free{fstype="aufs"}) / node_filesystem_size{fstype="aufs"} * 100 > 85

FOR 30s

LABELS { severity = "warning" }

ANNOTATIONS {

summary = "Server storage is almost full",

description = "Docker host storage usage is {{ humanize $value}}%. Reported by instance {{ $labels.instance }} of job {{ $labels.job }}.",

}

```

***Docker Containers alerts***

Trigger an alert if a container is down for more than 30 seconds:

```yaml

ALERT jenkins_down

IF absent(container_memory_usage_bytes{name="jenkins"})

FOR 30s

LABELS { severity = "critical" }

ANNOTATIONS {

summary= "Jenkins down",

description= "Jenkins container is down for more than 30 seconds."

}

```

Trigger an alert if a container is using more than 10% of total CPU cores for more than 30 seconds:

```yaml

ALERT jenkins_high_cpu

IF sum(rate(container_cpu_usage_seconds_total{name="jenkins"}[1m])) / count(node_cpu{mode="system"}) * 100 > 10

FOR 30s

LABELS { severity = "warning" }

ANNOTATIONS {

summary= "Jenkins high CPU usage",

description= "Jenkins CPU usage is {{ humanize $value}}%."

}

```

Trigger an alert if a container is using more than 1,2GB of RAM for more than 30 seconds:

```yaml

ALERT jenkins_high_memory

IF sum(container_memory_usage_bytes{name="jenkins"}) > 1200000000

FOR 30s

LABELS { severity = "warning" }

ANNOTATIONS {

summary = "Jenkins high memory usage",

description = "Jenkins memory consumption is at {{ humanize $value}}.",

}

```

## Setup alerting

The AlertManager service is responsible for handling alerts sent by Prometheus server.

AlertManager can send notifications via email, Pushover, Slack, HipChat or any other system that exposes a webhook interface.

A complete list of integrations can be found [here](https://prometheus.io/docs/alerting/configuration).

You can view and silence notifications by accessing `http://:9093`.

The notification receivers can be configured in [alertmanager/config.yml](https://github.com/stefanprodan/dockprom/blob/master/alertmanager/config.yml) file.

To receive alerts via Slack you need to make a custom integration by choose ***incoming web hooks*** in your Slack team app page.

You can find more details on setting up Slack integration [here](http://www.robustperception.io/using-slack-with-the-alertmanager/).

Copy the Slack Webhook URL into the ***api_url*** field and specify a Slack ***channel***.

```yaml

route:

receiver: 'slack'

receivers:

- name: 'slack'

slack_configs:

- send_resolved: true

text: "{{ .CommonAnnotations.description }}"

username: 'Prometheus'

channel: '#'

api_url: 'https://hooks.slack.com/services/'

```