https://github.com/monk1337/nanopeft

The simplest repository & Neat implementation of different Lora methods for training/fine-tuning Transformer-based models (i.e., BERT, GPTs). [ Research purpose ]

https://github.com/monk1337/nanopeft

huggingface llama llm lora low-rank-adaptation mistral peft qlora quantization

Last synced: 7 months ago

JSON representation

The simplest repository & Neat implementation of different Lora methods for training/fine-tuning Transformer-based models (i.e., BERT, GPTs). [ Research purpose ]

- Host: GitHub

- URL: https://github.com/monk1337/nanopeft

- Owner: monk1337

- License: apache-2.0

- Created: 2024-03-07T18:22:25.000Z (over 1 year ago)

- Default Branch: main

- Last Pushed: 2024-04-02T13:23:58.000Z (over 1 year ago)

- Last Synced: 2025-03-07T02:35:29.579Z (7 months ago)

- Topics: huggingface, llama, llm, lora, low-rank-adaptation, mistral, peft, qlora, quantization

- Language: Jupyter Notebook

- Homepage:

- Size: 68.4 KB

- Stars: 6

- Watchers: 1

- Forks: 2

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

NanoPeft

The simplest repository & Neat implementation of different Lora methods for training/fine-tuning Transformer-based models (i.e., BERT, GPTs).

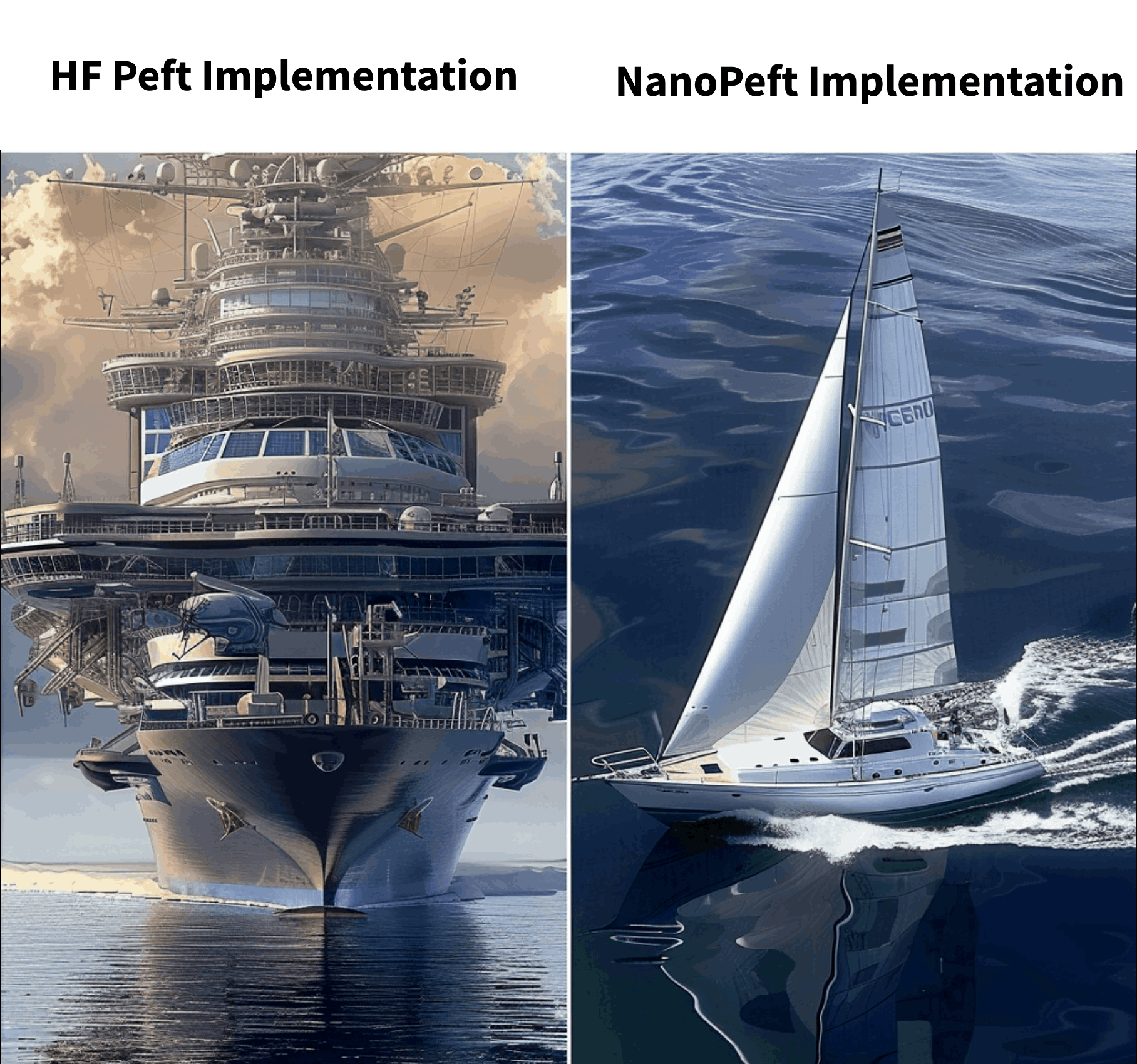

# Why NanoPeft?

- PEFT & LitGit are great libraries However, Hacking the Hugging Face PEFT (Parameter-Efficient Fine-Tuning) or LitGit packages seems like a lot of work to integrate a new LoRA method quickly and benchmark it.

- By keeping the code so simple, it is very easy to hack to your needs, add new LoRA methods from papers in the layers/ directory, and fine-tune easily as per your needs.

- This is mostly for experimental/research purposes, not for scalable solutions.

## Installation

### With pip

You should install NanoPeft using Pip command

```bash

pip3 install git+https://github.com/monk1337/NanoPeft.git

```