https://github.com/neelr/favicon-diffusion

run diffusion models in the browser! webgpu hippo diffusion kernels in >2k lines of vanilla js

https://github.com/neelr/favicon-diffusion

Last synced: 3 months ago

JSON representation

run diffusion models in the browser! webgpu hippo diffusion kernels in >2k lines of vanilla js

- Host: GitHub

- URL: https://github.com/neelr/favicon-diffusion

- Owner: neelr

- License: mit

- Created: 2025-02-14T03:57:09.000Z (3 months ago)

- Default Branch: main

- Last Pushed: 2025-02-18T23:11:11.000Z (3 months ago)

- Last Synced: 2025-02-19T00:21:24.143Z (3 months ago)

- Language: Jupyter Notebook

- Homepage:

- Size: 6.16 MB

- Stars: 0

- Watchers: 1

- Forks: 0

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

# favicon diffusor: a high-performance browser diffusion transformer

Ever wanted fast diffusion on device? Struggled with compatibility and libraries? Worry no more—favicon diffusor is here! Using WebGPU, its supported on almost any device (that can run chrome) and can diffuse hippos anywhere (even as a favicon)!

A quick weekend project where I hacked on a bunch of WebGPU kernels from scratch and tried to optimize them. Building on my [last "from scratch DiT"](github.com/neelr/scratche-dit) this starts at the kernel level and rewrites diffusion transformers using WGSL. A subsecond 32-step diffusion inference time allows for an awesome demo of actually _diffusing the favicon of a website realtime_ in ~0.7s with a ~11M parameter model

https://notebook.neelr.dev/stories/in-browser-favicon-diffusion-scratch-dit-pt-2

## perf

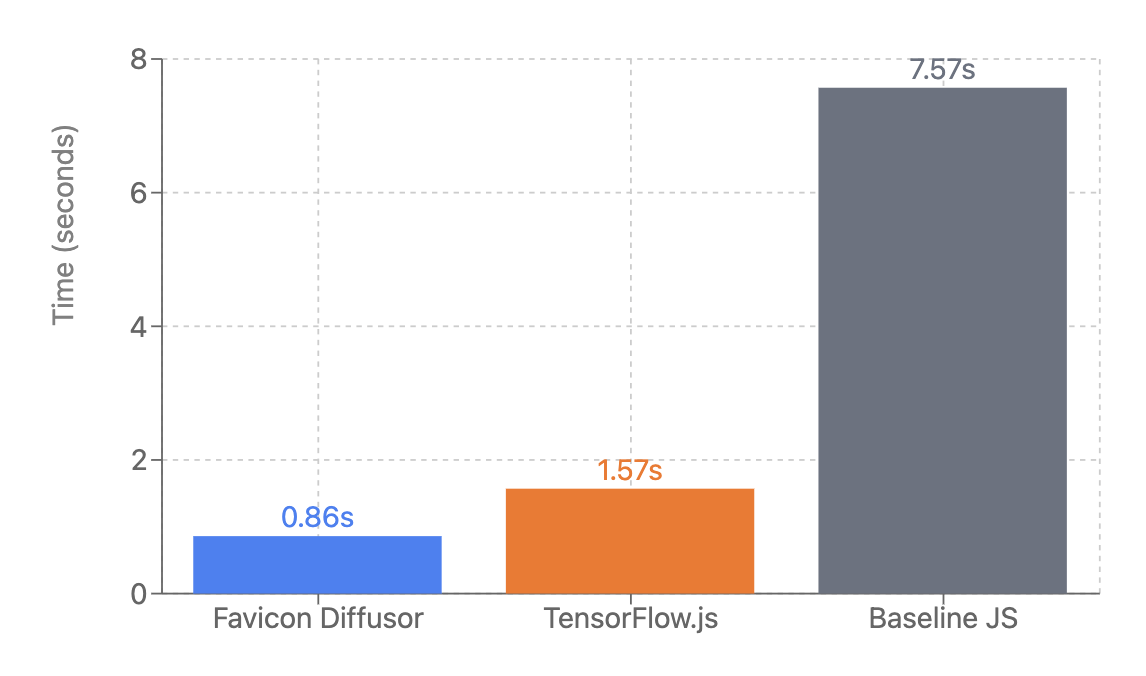

Of course.... here are the approximate numbers on an M1 Pro! Currently faster than tf.js and (of course) baseline JS—transformers.js doesn't support custom layer building, so I didn't include it.

| Implementation | Time (s) | vs Baseline | vs TensorFlow.js |

|----------------|-----------|-------------|------------------|

| Favicon Diffusor | 0.86 | 88.6% faster | 45.2% faster |

| TensorFlow.js | 1.57 | 79.3% faster | baseline |

| Baseline JS | 7.57 | baseline | 382% slower |

## tech

The implementation includes several key optimizations:

- Custom WGSL shaders for core operations

- Efficient memory management and tensor operations

- Optimized attention mechanisms

- Streamlined data pipelining

## getting started

- you need a browser with WebGPU support (Chrome Canary or other modern browsers with WebGPU flags enabled)

### installation

1. clone the repository:

```bash

git clone https://github.com/neelr/favicon-diffusor.git

cd favicon-diffusor

```

2. Run a development server:

```bash

npx http-server

```

## development

The project structure includes:

- `dit.py` - PyTorch reference implementation

- `dit.js` - JavaScript implementation

- `shaders/` - WebGPU shader implementations

- `train.py` - Training scripts

- `compile.sh` - Compile the shaders into a single file

- Various utility and testing files

Open to contributions! `dit.js` and `shaders/shaders.js` are the only files you really need for the demo and the rest are just for training and testing. Those two combined are only ~2k lines of code.

## TODO

- [x] implement patchify and unpatchify as shaders

- [x] modularize all shaders into separate files

- [x] create benchmarks against relevant other categories

- [x] add transpose matmul optimization

- [x] implement flashattention from scratch

- [ ] implement multi-head attention

- [ ] try implementing a "next scale prediction" VAR https://arxiv.org/abs/2404.02905

- [ ] port over a full stable diffusion checkpoint

- [ ] add text latents + possibly conditioning?

- [ ] create an easy porting script

## resources

- [WebGPU](https://webgpu.org/)

- [Stable Diffusion for Distillation!](https://github.com/CompVis/stable-diffusion)