Ecosyste.ms: Awesome

An open API service indexing awesome lists of open source software.

https://github.com/oaleeapp/swifteyes

An easy way to access OpenCV library from Swift.

https://github.com/oaleeapp/swifteyes

ios-swift opencv swift

Last synced: about 1 month ago

JSON representation

An easy way to access OpenCV library from Swift.

- Host: GitHub

- URL: https://github.com/oaleeapp/swifteyes

- Owner: oaleeapp

- License: other

- Created: 2017-02-03T15:32:27.000Z (almost 8 years ago)

- Default Branch: master

- Last Pushed: 2017-03-24T19:15:38.000Z (over 7 years ago)

- Last Synced: 2024-10-11T16:22:48.321Z (about 1 month ago)

- Topics: ios-swift, opencv, swift

- Language: Objective-C++

- Homepage: https://oaleeapp.github.io/SwiftEyes/

- Size: 787 KB

- Stars: 8

- Watchers: 2

- Forks: 1

- Open Issues: 10

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

# SwiftEyes

[](https://travis-ci.org/oaleeapp/SwiftEyes)

[](http://cocoapods.org/pods/SwiftEyes)

[](http://cocoapods.org/pods/SwiftEyes)

[](http://cocoapods.org/pods/SwiftEyes)

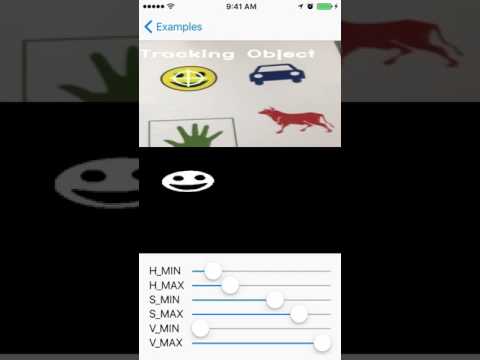

## Example

To run the example project, clone the repo, and run `pod install` from the Example directory first.

> If this is first time you use [OpenCV-Dynamic](https://github.com/Legoless/OpenCV-Dynamic) as the dependency, it'll take time to install.

## How to Use

Just import the framework

`import SwiftEyes`

In class `SEMotionTrackViewController`, you can see the example.

Simply use the code below can identify the motion blob.

```Swift

func processImage(_ image: OCVMat!) {

// Parameters

let blurSize = 10

let sensitivityValue = 20.0

var differenceMat = OCVMat(cgSize: CGSize(width: image.size.width, height: image.size.height), type: image.type, channels: image.channels)

// from BGR to RGB

OCVOperation.convertColor(fromSource: image, toDestination: image, with: .typeBGRA2RGBA)

// set current mat

currentMat = image.clone()

// convert current mat from RGB to GrayScale

OCVOperation.convertColor(fromSource: currentMat, toDestination: currentMat, with: .typeRGBA2GRAY)

if previousMat != nil {

OCVOperation.absoluteDifference(onFirstSource: currentMat, andSecondSource: previousMat, toDestination: differenceMat)

OCVOperation.blur(onSource: differenceMat, toDestination: differenceMat, with: OCVSize(width: blurSize, height: blurSize))

OCVOperation.threshold(onSource: differenceMat, toDestination: differenceMat, withThresh: sensitivityValue, withMaxValue: 255.0, with: .binary)

}

DispatchQueue.main.sync {

cameraImageView.image = image.image()

displayImageView.image = differenceMat.image()

}

// use previous mat(frame) to compare with next mat(frame)

previousMat = currentMat

}

```

## Videos

[](https://youtu.be/i3xDONms4u4)

[](https://youtu.be/EDMr6cGkV0Y)

## Requirements

If you didn't install CocoaPods yet, you can install from [here](https://cocoapods.org/).

And before you run `pod install`, please be sure that you have install `cmake` as well.

To download it, simply use [Homebrew](https://brew.sh/) to install:

```ruby

brew install cmake

```

## Installation

SwiftEyes is available through [CocoaPods](http://cocoapods.org). To install

it, simply add the following line to your Podfile:

```ruby

pod "SwiftEyes"

```

## Author

Victor Lee, [email protected]

## License

BSD license, respect OpenCV license as well.