https://github.com/openai/openai-agents-python

A lightweight, powerful framework for multi-agent workflows

https://github.com/openai/openai-agents-python

agents ai framework llm openai python

Last synced: 1 day ago

JSON representation

A lightweight, powerful framework for multi-agent workflows

- Host: GitHub

- URL: https://github.com/openai/openai-agents-python

- Owner: openai

- License: mit

- Created: 2025-03-11T03:42:36.000Z (12 months ago)

- Default Branch: main

- Last Pushed: 2025-04-29T16:30:55.000Z (10 months ago)

- Last Synced: 2025-04-29T17:31:39.123Z (10 months ago)

- Topics: agents, ai, framework, llm, openai, python

- Language: Python

- Homepage: https://openai.github.io/openai-agents-python/

- Size: 4.47 MB

- Stars: 9,631

- Watchers: 132

- Forks: 1,268

- Open Issues: 149

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

- awesome-ai-agents - OpenAI Agents (Python) - agents-python) | Lightweight, provider-agnostic agent framework | (🚀 Specialized Agents / 🗣️ Programming Language Agents)

- awesome-ChatGPT-repositories - openai-agents-python - A lightweight, powerful framework for multi-agent workflows (NLP)

- Awesome-Vibe-Coding - OpenAI Agents SDK

- StarryDivineSky - openai/openai-agents-python - agents-python是一个轻量级且强大的多智能体工作流框架。它旨在简化构建复杂的多智能体系统的过程,允许开发者轻松创建和协调多个智能体之间的交互。该框架的核心优势在于其灵活性和可扩展性,可以适应各种不同的应用场景。通过该框架,开发者可以定义智能体的角色、目标和行为,并设计它们之间的通信协议。该项目提供了丰富的工具和示例,帮助开发者快速上手并构建自己的多智能体应用。它支持各种不同的智能体类型,包括基于语言模型的智能体和基于规则的智能体。该框架还提供了强大的调试和监控功能,方便开发者诊断和优化智能体系统的性能。总之,openai/openai-agents-python为开发者提供了一个高效且易用的平台,用于构建和部署复杂的多智能体系统。 (A01_文本生成_文本对话 / 大语言对话模型及数据)

- awesome-generative-ai-data-scientist - OpenAI Agents - agent workflows. | [GitHub](https://github.com/openai/openai-agents-python) | (LLM Providers)

- awesome-local-llm - openai-agents-python - a lightweight, powerful framework for multi-agent workflows (Tools / Agent Frameworks)

- AiTreasureBox - openai/openai-agents-python - 11-03_17019_1](https://img.shields.io/github/stars/openai/openai-agents-python.svg)|A lightweight, powerful framework for multi-agent workflows| (Repos)

- awesome-hacking-lists - openai/openai-agents-python - A lightweight, powerful framework for multi-agent workflows (Python)

- awesome-data-analysis - openai-agents-python - Official OpenAI framework for building AI agents. (🧠 AI Applications & Platforms / Tools)

- awesome-ai-agents - openai/openai-agents-python - The OpenAI Agents SDK is a lightweight and powerful framework for building, orchestrating, and tracing multi-agent workflows using large language models with configurable instructions, tools, and safety features. (AI Agent Frameworks & SDKs / Multi-Agent Collaboration Systems)

- Awesome-LLMOps - OpenAI Agents SDK - agent workflows.    (Orchestration / Agent Framework)

- awesome-production-genai - OpenAI Agents SDK - agents-python.svg?cacheSeconds=86400) - The OpenAI Agents SDK is a lightweight yet powerful framework for building multi-agent workflows. It is provider-agnostic, supporting the OpenAI Responses and Chat Completions APIs, as well as 100+ other LLMs. (Agent Development)

- awesome - openai/openai-agents-python - A lightweight, powerful framework for multi-agent workflows (<a name="Python"></a>Python)

README

# OpenAI Agents SDK [](https://pypi.org/project/openai-agents/)

The OpenAI Agents SDK is a lightweight yet powerful framework for building multi-agent workflows. It is provider-agnostic, supporting the OpenAI Responses and Chat Completions APIs, as well as 100+ other LLMs.

> [!NOTE]

> Looking for the JavaScript/TypeScript version? Check out [Agents SDK JS/TS](https://github.com/openai/openai-agents-js).

### Core concepts:

1. [**Agents**](https://openai.github.io/openai-agents-python/agents): LLMs configured with instructions, tools, guardrails, and handoffs

2. [**Handoffs**](https://openai.github.io/openai-agents-python/handoffs/): A specialized tool call used by the Agents SDK for transferring control between agents

3. [**Guardrails**](https://openai.github.io/openai-agents-python/guardrails/): Configurable safety checks for input and output validation

4. [**Sessions**](#sessions): Automatic conversation history management across agent runs

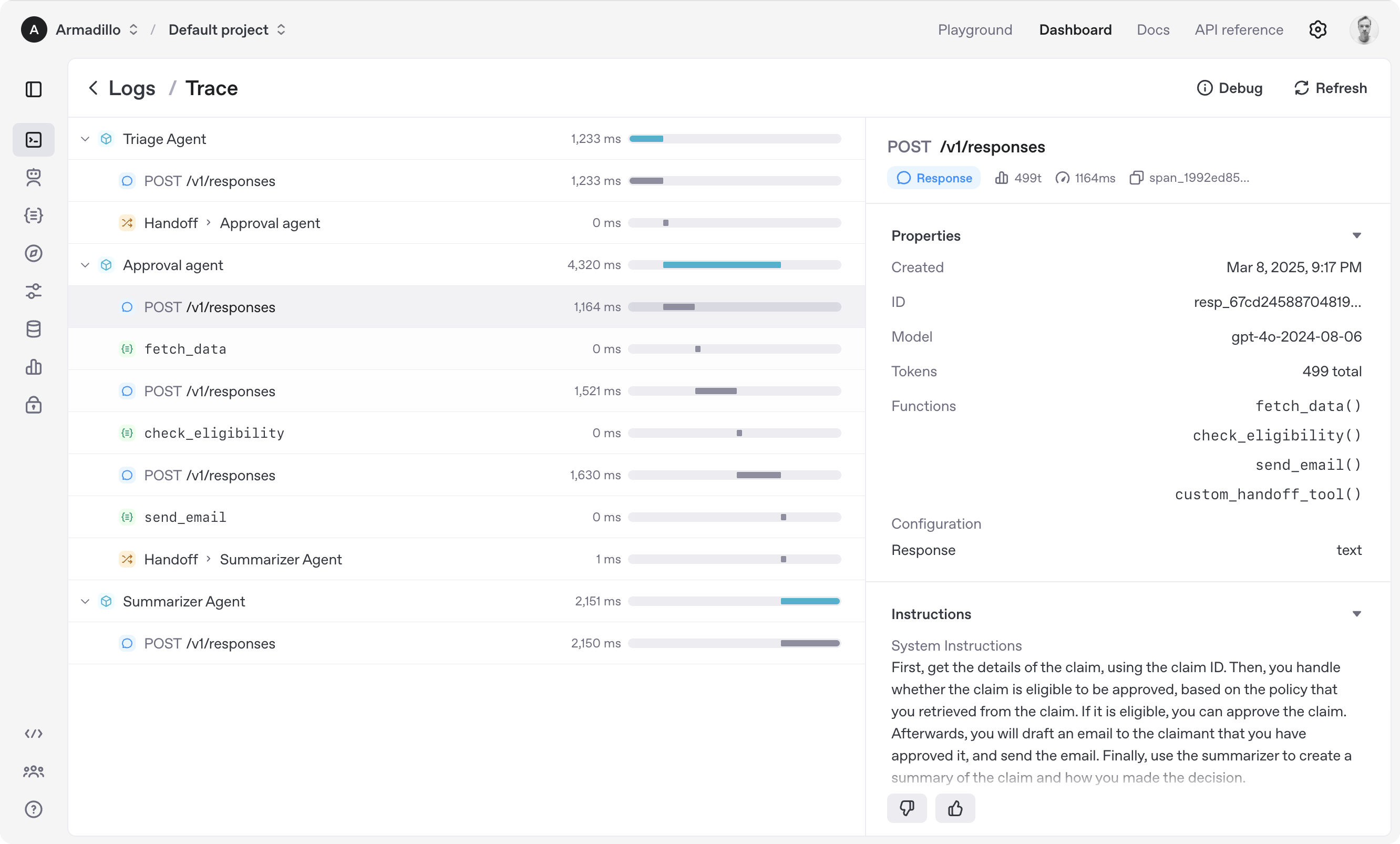

5. [**Tracing**](https://openai.github.io/openai-agents-python/tracing/): Built-in tracking of agent runs, allowing you to view, debug and optimize your workflows

Explore the [examples](examples) directory to see the SDK in action, and read our [documentation](https://openai.github.io/openai-agents-python/) for more details.

## Get started

To get started, set up your Python environment (Python 3.10 or newer required), and then install OpenAI Agents SDK package.

### venv

```bash

python -m venv .venv

source .venv/bin/activate # On Windows: .venv\Scripts\activate

pip install openai-agents

```

For voice support, install with the optional `voice` group: `pip install 'openai-agents[voice]'`.

For Redis session support, install with the optional `redis` group: `pip install 'openai-agents[redis]'`.

### uv

If you're familiar with [uv](https://docs.astral.sh/uv/), installing the package would be even easier:

```bash

uv init

uv add openai-agents

```

For voice support, install with the optional `voice` group: `uv add 'openai-agents[voice]'`.

For Redis session support, install with the optional `redis` group: `uv add 'openai-agents[redis]'`.

## Hello world example

```python

from agents import Agent, Runner

agent = Agent(name="Assistant", instructions="You are a helpful assistant")

result = Runner.run_sync(agent, "Write a haiku about recursion in programming.")

print(result.final_output)

# Code within the code,

# Functions calling themselves,

# Infinite loop's dance.

```

(_If running this, ensure you set the `OPENAI_API_KEY` environment variable_)

(_For Jupyter notebook users, see [hello_world_jupyter.ipynb](examples/basic/hello_world_jupyter.ipynb)_)

## Handoffs example

```python

from agents import Agent, Runner

import asyncio

spanish_agent = Agent(

name="Spanish agent",

instructions="You only speak Spanish.",

)

english_agent = Agent(

name="English agent",

instructions="You only speak English",

)

triage_agent = Agent(

name="Triage agent",

instructions="Handoff to the appropriate agent based on the language of the request.",

handoffs=[spanish_agent, english_agent],

)

async def main():

result = await Runner.run(triage_agent, input="Hola, ¿cómo estás?")

print(result.final_output)

# ¡Hola! Estoy bien, gracias por preguntar. ¿Y tú, cómo estás?

if __name__ == "__main__":

asyncio.run(main())

```

## Functions example

```python

import asyncio

from agents import Agent, Runner, function_tool

@function_tool

def get_weather(city: str) -> str:

return f"The weather in {city} is sunny."

agent = Agent(

name="Hello world",

instructions="You are a helpful agent.",

tools=[get_weather],

)

async def main():

result = await Runner.run(agent, input="What's the weather in Tokyo?")

print(result.final_output)

# The weather in Tokyo is sunny.

if __name__ == "__main__":

asyncio.run(main())

```

## The agent loop

When you call `Runner.run()`, we run a loop until we get a final output.

1. We call the LLM, using the model and settings on the agent, and the message history.

2. The LLM returns a response, which may include tool calls.

3. If the response has a final output (see below for more on this), we return it and end the loop.

4. If the response has a handoff, we set the agent to the new agent and go back to step 1.

5. We process the tool calls (if any) and append the tool responses messages. Then we go to step 1.

There is a `max_turns` parameter that you can use to limit the number of times the loop executes.

### Final output

Final output is the last thing the agent produces in the loop.

1. If you set an `output_type` on the agent, the final output is when the LLM returns something of that type. We use [structured outputs](https://platform.openai.com/docs/guides/structured-outputs) for this.

2. If there's no `output_type` (i.e. plain text responses), then the first LLM response without any tool calls or handoffs is considered as the final output.

As a result, the mental model for the agent loop is:

1. If the current agent has an `output_type`, the loop runs until the agent produces structured output matching that type.

2. If the current agent does not have an `output_type`, the loop runs until the current agent produces a message without any tool calls/handoffs.

## Common agent patterns

The Agents SDK is designed to be highly flexible, allowing you to model a wide range of LLM workflows including deterministic flows, iterative loops, and more. See examples in [`examples/agent_patterns`](examples/agent_patterns).

## Tracing

The Agents SDK automatically traces your agent runs, making it easy to track and debug the behavior of your agents. Tracing is extensible by design, supporting custom spans and a wide variety of external destinations, including [Logfire](https://logfire.pydantic.dev/docs/integrations/llms/openai/#openai-agents), [AgentOps](https://docs.agentops.ai/v1/integrations/agentssdk), [Braintrust](https://braintrust.dev/docs/guides/traces/integrations#openai-agents-sdk), [Scorecard](https://docs.scorecard.io/docs/documentation/features/tracing#openai-agents-sdk-integration), [Keywords AI](https://docs.keywordsai.co/integration/development-frameworks/openai-agent), and many more. For more details about how to customize or disable tracing, see [Tracing](http://openai.github.io/openai-agents-python/tracing), which also includes a larger list of [external tracing processors](http://openai.github.io/openai-agents-python/tracing/#external-tracing-processors-list).

## Long running agents & human-in-the-loop

There are several options for long-running agents. Refer to [the documentation](https://openai.github.io/openai-agents-python/running_agents/#long-running-agents-human-in-the-loop) for details.

## Sessions

The Agents SDK provides built-in session memory to automatically maintain conversation history across multiple agent runs, eliminating the need to manually handle `.to_input_list()` between turns.

### Quick start

```python

from agents import Agent, Runner, SQLiteSession

# Create agent

agent = Agent(

name="Assistant",

instructions="Reply very concisely.",

)

# Create a session instance

session = SQLiteSession("conversation_123")

# First turn

result = await Runner.run(

agent,

"What city is the Golden Gate Bridge in?",

session=session

)

print(result.final_output) # "San Francisco"

# Second turn - agent automatically remembers previous context

result = await Runner.run(

agent,

"What state is it in?",

session=session

)

print(result.final_output) # "California"

# Also works with synchronous runner

result = Runner.run_sync(

agent,

"What's the population?",

session=session

)

print(result.final_output) # "Approximately 39 million"

```

### Session options

- **No memory** (default): No session memory when session parameter is omitted

- **`session: Session = DatabaseSession(...)`**: Use a Session instance to manage conversation history

```python

from agents import Agent, Runner, SQLiteSession

# SQLite - file-based or in-memory database

session = SQLiteSession("user_123", "conversations.db")

# Redis - for scalable, distributed deployments

# from agents.extensions.memory import RedisSession

# session = RedisSession.from_url("user_123", url="redis://localhost:6379/0")

agent = Agent(name="Assistant")

# Different session IDs maintain separate conversation histories

result1 = await Runner.run(

agent,

"Hello",

session=session

)

result2 = await Runner.run(

agent,

"Hello",

session=SQLiteSession("user_456", "conversations.db")

)

```

### Custom session implementations

You can implement your own session memory by creating a class that follows the `Session` protocol:

```python

from agents.memory import Session

from typing import List

class MyCustomSession:

"""Custom session implementation following the Session protocol."""

def __init__(self, session_id: str):

self.session_id = session_id

# Your initialization here

async def get_items(self, limit: int | None = None) -> List[dict]:

# Retrieve conversation history for the session

pass

async def add_items(self, items: List[dict]) -> None:

# Store new items for the session

pass

async def pop_item(self) -> dict | None:

# Remove and return the most recent item from the session

pass

async def clear_session(self) -> None:

# Clear all items for the session

pass

# Use your custom session

agent = Agent(name="Assistant")

result = await Runner.run(

agent,

"Hello",

session=MyCustomSession("my_session")

)

```

## Development (only needed if you need to edit the SDK/examples)

0. Ensure you have [`uv`](https://docs.astral.sh/uv/) installed.

```bash

uv --version

```

1. Install dependencies

```bash

make sync

```

2. (After making changes) lint/test

```

make check # run tests linter and typechecker

```

Or to run them individually:

```

make tests # run tests

make mypy # run typechecker

make lint # run linter

make format-check # run style checker

```

Format code if `make format-check` fails above by running:

```

make format

```

## Acknowledgements

We'd like to acknowledge the excellent work of the open-source community, especially:

- [Pydantic](https://docs.pydantic.dev/latest/) (data validation) and [PydanticAI](https://ai.pydantic.dev/) (advanced agent framework)

- [LiteLLM](https://github.com/BerriAI/litellm) (unified interface for 100+ LLMs)

- [MkDocs](https://github.com/squidfunk/mkdocs-material)

- [Griffe](https://github.com/mkdocstrings/griffe)

- [uv](https://github.com/astral-sh/uv) and [ruff](https://github.com/astral-sh/ruff)

We're committed to continuing to build the Agents SDK as an open source framework so others in the community can expand on our approach.