https://github.com/ornl/tx2

Transformer eXplainability and eXploration

https://github.com/ornl/tx2

Last synced: about 2 months ago

JSON representation

Transformer eXplainability and eXploration

- Host: GitHub

- URL: https://github.com/ornl/tx2

- Owner: ORNL

- License: bsd-3-clause

- Created: 2020-12-02T20:36:44.000Z (over 4 years ago)

- Default Branch: main

- Last Pushed: 2024-10-24T13:53:16.000Z (8 months ago)

- Last Synced: 2025-04-04T05:40:33.880Z (2 months ago)

- Language: Python

- Size: 8.35 MB

- Stars: 19

- Watchers: 3

- Forks: 2

- Open Issues: 4

-

Metadata Files:

- Readme: README.md

- Changelog: CHANGELOG.md

- Contributing: CONTRIBUTING.md

- License: LICENSE

Awesome Lists containing this project

README

# TX2

[](https://github.com/psf/black)

[](https://badge.fury.io/py/tx2)

[](https://joss.theoj.org/papers/b7c161917e5a31af052a597bf98f0e94)

[](https://github.com/ORNL/tx2/actions/workflows/tests.yml)

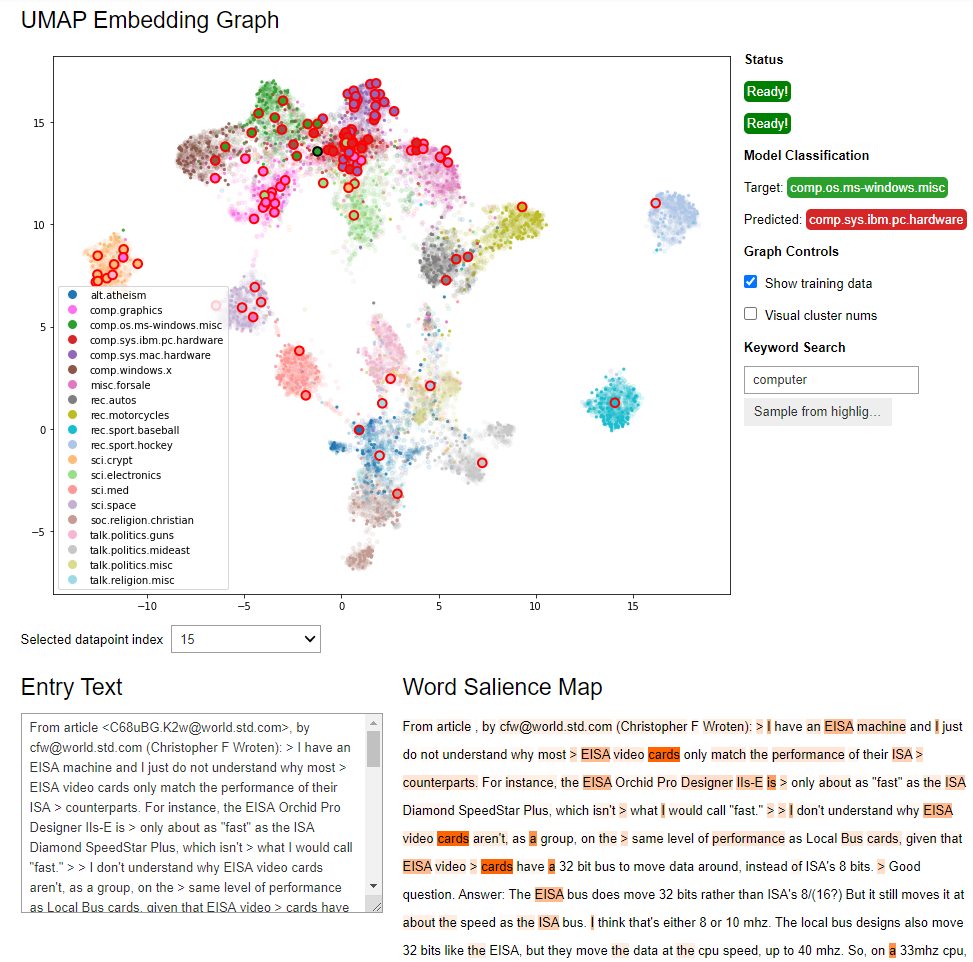

Welcome to TX2! This library is intended to aid in the explorability and explainability of

transformer classification networks, or transformer language models with sequence classification

heads. The basic function of this library is to take a trained transformer and

test/train dataset and produce an ipywidget dashboard as seen in the screenshot below,

which can be displayed in a jupyter notebook or jupyter lab.

NOTE: Currently this library's implementation is partially torch-dependent, and so will

not work with tensorflow/keras models - we hope to address this limitation in the future!

## Installation

You can install this package from pypi:

```bash

pip install tx2

```

NOTE: depending on the environment, it may be better to install some of the dependencies separately before

pip installing tx2, e.g. in conda:

```bash

conda install pytorch pandas scikit-learn=1.1 numpy=1.22 -c conda-forge

```

## Examples

Example jupyter notebooks demonstrating and testing the usage of this library can be

found in the [examples

folder](https://github.com/ORNL/tx2/tree/master/examples).

Note that these notebooks can take a while to run the first time, especially

if a GPU is not in use.

Packages you'll need to install for the notebooks to work (in addition to the

conda installs above):

```bash

pip install tqdm transformers==4.21 datasets==2.4

```

Running through each full notebook will produce the ipywidget dashboard near the

end.

The tests in this repository do not depend on transformers, so raw library

functionality can be tested by running `pytest` in the project root.

## Documentation

The documentation can be viewed at [https://ornl.github.io/tx2/](https://ornl.github.io/tx2/).

The documentation can also be built from scratch with sphinx as needed.

Install all required dependencies:

```bash

pip install -r requirements.txt

```

Build documentation:

```bash

cd docs

make html

```

The `docs/build/html` folder will now contain an `index.html`

Two notebooks demonstrating the dashboard and how to use TX2 are included

in the `examples` folder, highlighting the default and custom approaches

as discussed in the Basic Usage page of the documentation.

## Citation

To cite usage of TX2 in a publication, the DOI for this code is [https://doi.org/10.21105/joss.03652](https://doi.org/10.21105/joss.03652)

bibtex:

```bibtex

@article{Martindale2021,

doi = {10.21105/joss.03652},

url = {https://doi.org/10.21105/joss.03652},

year = {2021},

publisher = {The Open Journal},

volume = {6},

number = {68},

pages = {3652},

author = {Nathan Martindale and Scott L. Stewart},

title = {TX$^2$: Transformer eXplainability and eXploration},

journal = {Journal of Open Source Software}

}

```