https://github.com/paulfitz/segmenty

training convnets to segment visual patterns without annotated data

https://github.com/paulfitz/segmenty

computer-vision convolutional-neural-networks keras segmentation

Last synced: 10 days ago

JSON representation

training convnets to segment visual patterns without annotated data

- Host: GitHub

- URL: https://github.com/paulfitz/segmenty

- Owner: paulfitz

- Created: 2017-06-29T06:37:53.000Z (about 8 years ago)

- Default Branch: master

- Last Pushed: 2017-08-11T02:12:09.000Z (almost 8 years ago)

- Last Synced: 2025-04-02T22:51:12.429Z (3 months ago)

- Topics: computer-vision, convolutional-neural-networks, keras, segmentation

- Language: Python

- Homepage: http://paulfitz.github.io/2017/03/26/convnets-without-tears.html

- Size: 17.6 KB

- Stars: 5

- Watchers: 3

- Forks: 1

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

Computer vision is in some ways the inverse problem to computer graphics.

Neural nets are pretty good at solving inverse problems, given enough

examples. Computer graphics can generate a lot of examples. If the

examples are realistic enough (a big if), then to solve the computer vision

problem all you need to do is write computer graphics code and throw

a neural network at it.

This is an experiment in recognizing printed patterns in real images

based on training on generated images.

Synthetic training data

-----------------------

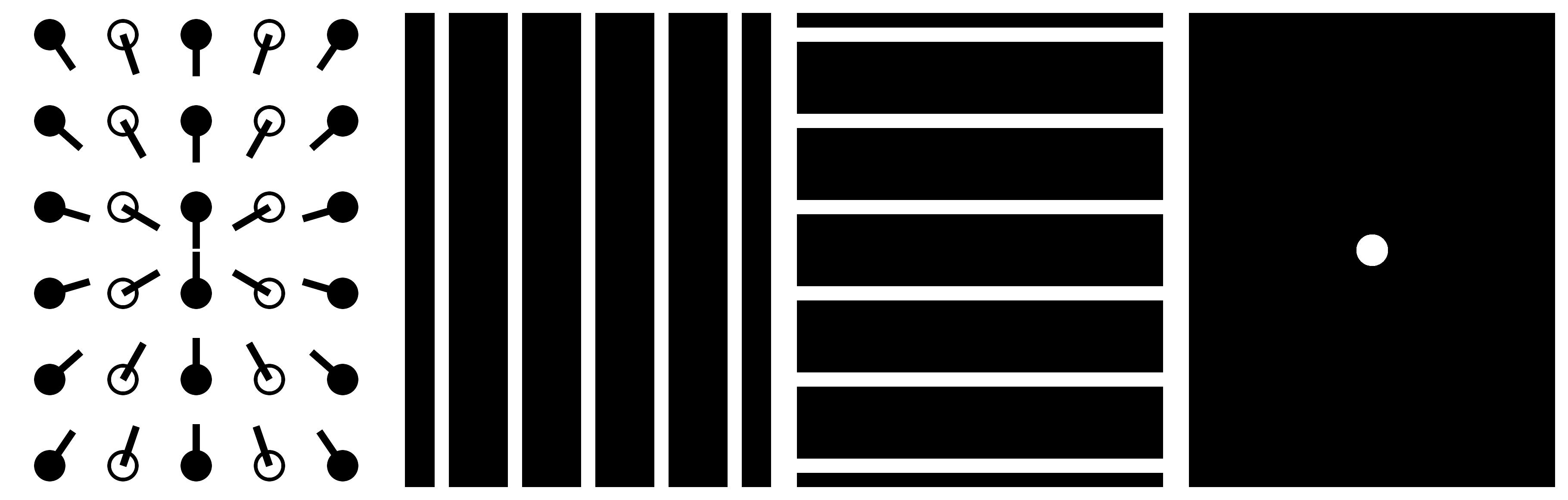

Generated images on left. Masks that we want the network to output

are shown on the right. There are masks for the full pattern, for vertical and

horizontal grid lines, and for the center of the pattern (if visible).

Occasional images without the target pattern are also included.

Here are how the images are generated:

* Grab a large number of random images from anywhere to use as backgrounds.

* Shade the pattern with random gradients.

This is a crude simulation of lighting effects.

* Overlay the pattern on a background, with a random affine transformation.

This is a crude simulation of perspective.

* Draw random blobs here and there across the image.

This is a crude simulation of occlusion.

* Overlay another random image on the result so far, with a random level

of transparency. This is a crude simulation of reflections, shadows,

more lighting effects.

* Distort the image randomly.

This is a crude simulation of camera projection effects.

* Apply a random directional blur to the image.

This is a crude simulation of motion blur.

* Sometimes, just leave the pattern out of all this, and leave the mask

empty, if you want to let the network know the pattern may not

always be present.

* Randomize the overall lighting.

The precise details are hopefully not too important,

the key is to leave nothing reliable except the pattern you want learned.

To classic computer vision eyes, this all looks crazy. There's occlusion!

Sometimes the pattern is only partially in view! Sometimes its edges are

all smeared out! Relax about that. It is not our problem anymore.

The mechanics of training doesn't actually depend much on the actual

pattern to detect and the masks to return (although the quality of the

result may). For this run the pattern and masks were:

Progress on real data

---------------------

Here are some real photos of the target pattern. The network never

gets to see photos like this during training. This animation shows

network output at every 10th epoch across a training run.

How to train

------------

* Install opencv, opencv python wrappers, and imagemagick

* `pip install pixplz[parallel] svgwrite tensorflow-gpu keras Pillow`

* `./fetch_backgrounds.sh`

* `./make_patterns.sh`

* `./make_samples.py validation 200`

* `./make_samples.py training 3000`

* `./train_segmenter.py`

* While that is running, also run `./freshen_samples.sh` in a separate console.

This will replace the training examples periodically to combat overfitting.

If you don't want to do this, use a much, much bigger number than 3000

for the number of training images.

* Run `./test_segmenter.py /tmp/model.thing validation scratch` from time to

time and look at visual quality of results in `scratch`.

* Kill training when results are acceptable.

Summary of model

----------------

* Take in the image, 256x256x3 in this case.

* Apply a series of 2D convolutions and downsampling via max-pooling. This is pretty much what a simple classification network would do. I don’t try anything clever here, and the filter counts are quite random.

* Once we’re down at low resolution, go fully connected for a layer or two, again just like a classification network. At this point we’ve distilled down our qualitative analysis of the image, at coarse spatial resolution. The dense layers can be trivially converted back to convolutional if you want to run all this convolutionally across larger images.

* Now we start upsampling, to get back towards a mask image of the same resolution as our input.

* Each time we upsample we apply some 2D convolutions and blend in data from filters at the same scale during downsampling. This is a neat trick used in some segmentation networks that lets you successively refine a segmentation using a blend of lower resolution context with higher resolution feature maps. (Random example: https://arxiv.org/abs/1703.00551).