https://github.com/raravindds/charllms

Implementing easy to use "Character Level Language Models" 🕺🏽

https://github.com/raravindds/charllms

llms nlp pytorch research-paper

Last synced: 4 months ago

JSON representation

Implementing easy to use "Character Level Language Models" 🕺🏽

- Host: GitHub

- URL: https://github.com/raravindds/charllms

- Owner: RAravindDS

- License: apache-2.0

- Created: 2023-06-09T08:40:08.000Z (over 2 years ago)

- Default Branch: main

- Last Pushed: 2024-01-15T03:07:51.000Z (about 2 years ago)

- Last Synced: 2024-03-15T08:50:32.571Z (almost 2 years ago)

- Topics: llms, nlp, pytorch, research-paper

- Language: Python

- Homepage: https://pypi.org/project/charLLM/

- Size: 77.1 KB

- Stars: 6

- Watchers: 2

- Forks: 1

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

# **Character-Level Language Models Repo 🕺🏽**

This repository contains multiple character-level language models (charLLM). Each language model is designed to generate text at the character level, providing a granular level of control and flexibility.

## 🌟 Available Language Models

- **Character-Level MLP LLM (First MLP LLM)**

- **GPT-2 (under process)**

## Character-Level MLP

The Character-Level MLP language model is implemented based on the approach described in the paper "[A Neural Probabilistic Language Model](https://www.jmlr.org/papers/volume3/bengio03a/bengio03a.pdf)" by Bential et al. (2002).

It utilizes a multilayer perceptron architecture to generate text at the character level.

## Installation

### With PIP

This repository is tested on Python 3.8+, and PyTorch 2.0.0+.

First, create a **virtual environment** with the version of Python you're going to use and activate it.

Then, you will need to install **PyTorch**.

When backends has been installed, CharLLMs can be installed using pip as follows:

```python

pip install charLLM

```

### With GIT

CharLLMs can be installed using conda as follows:

```zsh

git clone https://github.com/RAravindDS/Neural-Probabilistic-Language-Model.git

```

### Quick Tour

To use the Character-Level MLP language model, follow these steps:

1. Install the package dependencies.

2. Import the `CharMLP` class from the `charLLM` module.

3. Create an instance of the `CharMLP` class.

4. Train the model on a suitable dataset.

5. Generate text using the trained model.

**Demo for NPLM** (A Neural Probabilistic Language Model)

```python

# Import the class

>>> from charLLM import NPLM # Neural Probabilistic Language Model

>>> text_path = "path-to-text-file.txt"

>>> model_parameters = {

"block_size" :3,

"train_size" :0.8,

'epochs' :10000,

'batch_size' :32,

'hidden_layer' :100,

'embedding_dimension' :50,

'learning_rate' :0.1

}

>>> obj = NPLM(text_path, model_parameters) # Initialize the class

>>> obj.train_model()

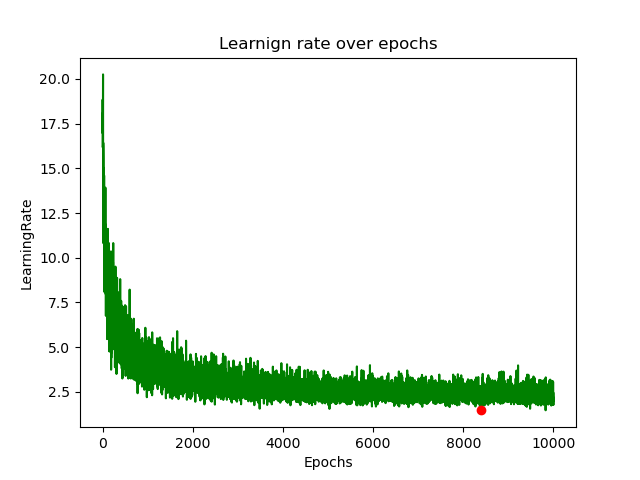

## It outputs the val_loss and image

>>> obj.sampling(words_needed=10) #It samples 10 tokens.

```

**Model Output Graph**

Feel free to explore the repository and experiment with the different language models provided.

## Contributions

Contributions to this repository are welcome. If you have implemented a novel character-level language model or would like to enhance the existing models, please consider contributing to the project. Thank you !

## License

This repository is licensed under the [MIT License](https://raw.githubusercontent.com/RAravindDS/CharLLMs/main/LICENCE).