https://github.com/reasoning-machines/pal

PaL: Program-Aided Language Models (ICML 2023)

https://github.com/reasoning-machines/pal

commonsense-reasoning few-shot-learning language-generation language-model large-language-models reasoning

Last synced: 3 months ago

JSON representation

PaL: Program-Aided Language Models (ICML 2023)

- Host: GitHub

- URL: https://github.com/reasoning-machines/pal

- Owner: reasoning-machines

- License: apache-2.0

- Created: 2022-11-18T14:48:18.000Z (almost 3 years ago)

- Default Branch: main

- Last Pushed: 2023-06-30T12:57:39.000Z (over 2 years ago)

- Last Synced: 2025-04-12T19:17:59.147Z (6 months ago)

- Topics: commonsense-reasoning, few-shot-learning, language-generation, language-model, large-language-models, reasoning

- Language: Python

- Homepage: https://reasonwithpal.com

- Size: 2.15 MB

- Stars: 488

- Watchers: 9

- Forks: 60

- Open Issues: 8

-

Metadata Files:

- Readme: README.md

- License: LICENSE

- Citation: CITATION.cff

Awesome Lists containing this project

- StarryDivineSky - reasoning-machines/pal - Aided Language Models)是一个利用程序辅助大型语言模型解决复杂推理问题的项目,它通过生成包含文本和代码的推理链来解决涉及复杂算术和程序性任务的推理问题。PaL将代码的执行委托给程序运行时(例如Python解释器),并使用少样本提示方法实现。该项目提供了一个交互式实现,并支持ChatGPT API,包含GSM-hard数据集,以及用于推理的脚本。用户可以通过简单的接口类使用PaL,并根据提示设置代码执行表达式。该项目在多个推理任务上取得了显著成果,包括数学推理、日期理解和颜色物体识别。 (A01_文本生成_文本对话 / 大语言对话模型及数据)

README

# PaL: Program-Aided Language Model

Repo for the paper [PaL: Program-Aided Language Models](https://arxiv.org/pdf/2211.10435.pdf).

In PaL, Large Language Model solves reasoning problems that involve complex arithmetic and procedural tasks by generating reasoning chains of **text and code**. This offloads the execution of the code to a program runtime, in our case, a Python interpreter. In our paper, we implement PaL using a few-shot prompting approach.

This repo provides an interactive implementation of PAL.

## News 📢

[Mar 2023] We have added supports for ChatGPT APIs (e.g., gpt-3.5-turbo). We expect a smooth transition for PAL over the codex API shutdown. Checkout a beta script `scripts/gsm_chatgpt.py` for Math reasoning.

[Jan 2023] We release [GSM-hard](https://github.com/reasoning-machines/pal/blob/main/datasets/gsmhardv2.jsonl), a harder version of GSM8k we created. Also avaliable on [Huggingface 🤗](https://huggingface.co/datasets/reasoning-machines/gsm-hard)

```python

import datasets

gsm_hard = datasets.load_dataset("reasoning-machines/gsm-hard")

```

## Installation

Clone this repo and install with `pip`.

```

git clone https://github.com/luyug/pal

pip install -e ./pal

```

Before running the scripts, set the OpenAI key,

```export OPENAI_API_KEY='sk-...'```

## Interactive Usage

The core components of the `pal` package are the Interface classes. Specifically, `ProgramInterface` connects the LLM backend, a Python backend and user prompts.

```

import pal

from pal.prompt import math_prompts

interface = pal.interface.ProgramInterface(

model='code-davinci-002',

stop='\n\n\n', # stop generation str for Codex API

get_answer_expr='solution()' # python expression evaluated after generated code to obtain answer

)

question = 'xxxxx'

prompt = math_prompts.MATH_PROMPT.format(question=question)

answer = interface.run(prompt)

```

Here, the `interface` 's `run` method will run generation with the OpenAI API, run the generated snippet and then evaluate `get_answer_expr` (here `solution()`) to obtain the final answer.

User should set `get_answer_expr` based on the prompt.

## Inference Loop

We provide simple inference loops in the `scripts/` folder.

```

mkdir eval_results

python scripts/{colored_objects|gsm|date_understanding|penguin}_eval.py

```

We have a beta release of a **ChatGPT** dedicated script for math reasoning.

```

python scripts/gsm_chatgpt.py

```

For running bulk inference, we used the generic prompting library [prompt-lib](https://github.com/madaan/prompt-lib) and recommend it for running CoT inferenence on all tasks used in our work.

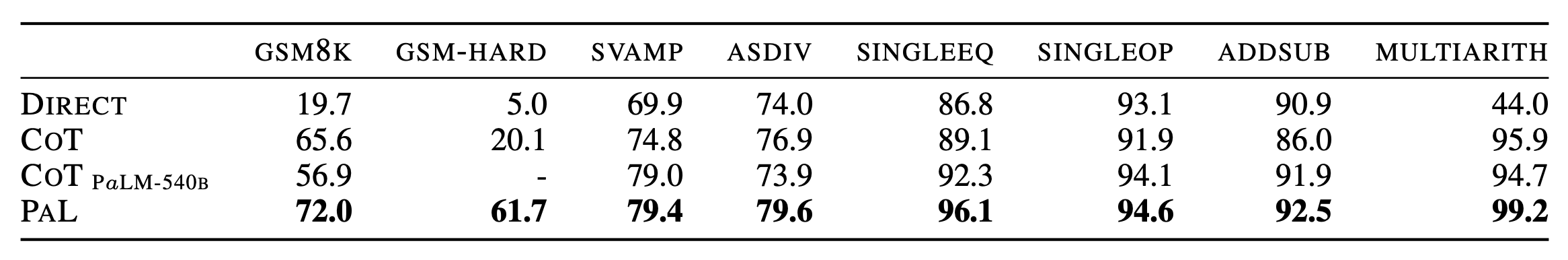

## Results

For the complete details of the results, see the [paper](https://arxiv.org/pdf/2211.10435.pdf) .

## Citation

```

@article{gao2022pal,

title={PAL: Program-aided Language Models},

author={Gao, Luyu and Madaan, Aman and Zhou, Shuyan and Alon, Uri and Liu, Pengfei and Yang, Yiming and Callan, Jamie and Neubig, Graham},

journal={arXiv preprint arXiv:2211.10435},

year={2022}

}

```