https://github.com/redhatinsights/ros-backend

Backend for Resource Optimization Service

https://github.com/redhatinsights/ros-backend

hacktoberfest

Last synced: about 2 months ago

JSON representation

Backend for Resource Optimization Service

- Host: GitHub

- URL: https://github.com/redhatinsights/ros-backend

- Owner: RedHatInsights

- License: apache-2.0

- Created: 2020-10-21T11:01:37.000Z (over 4 years ago)

- Default Branch: main

- Last Pushed: 2025-04-12T06:23:15.000Z (about 2 months ago)

- Last Synced: 2025-04-12T07:26:41.844Z (about 2 months ago)

- Topics: hacktoberfest

- Language: Python

- Homepage:

- Size: 16.8 MB

- Stars: 12

- Watchers: 14

- Forks: 25

- Open Issues: 11

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

# ROS-backend

Backend for Resource Optimization Service

The Red Hat Insights resource optimization service enables RHEL customers to assess and monitor their public cloud usage and optimization. The service exposes workload metrics for CPU, memory, and disk-usage and compares them to resource limits recommended by the public cloud provider.

Currently ROS only provides suggestions for AWS RHEL instances. To enable ROS, a customer needs to perform a few prerequisite steps on targeted systems via Ansible playbook.

Underneath, ROS uses Performance Co-Pilot (PCP) to monitor and report workload metrics.

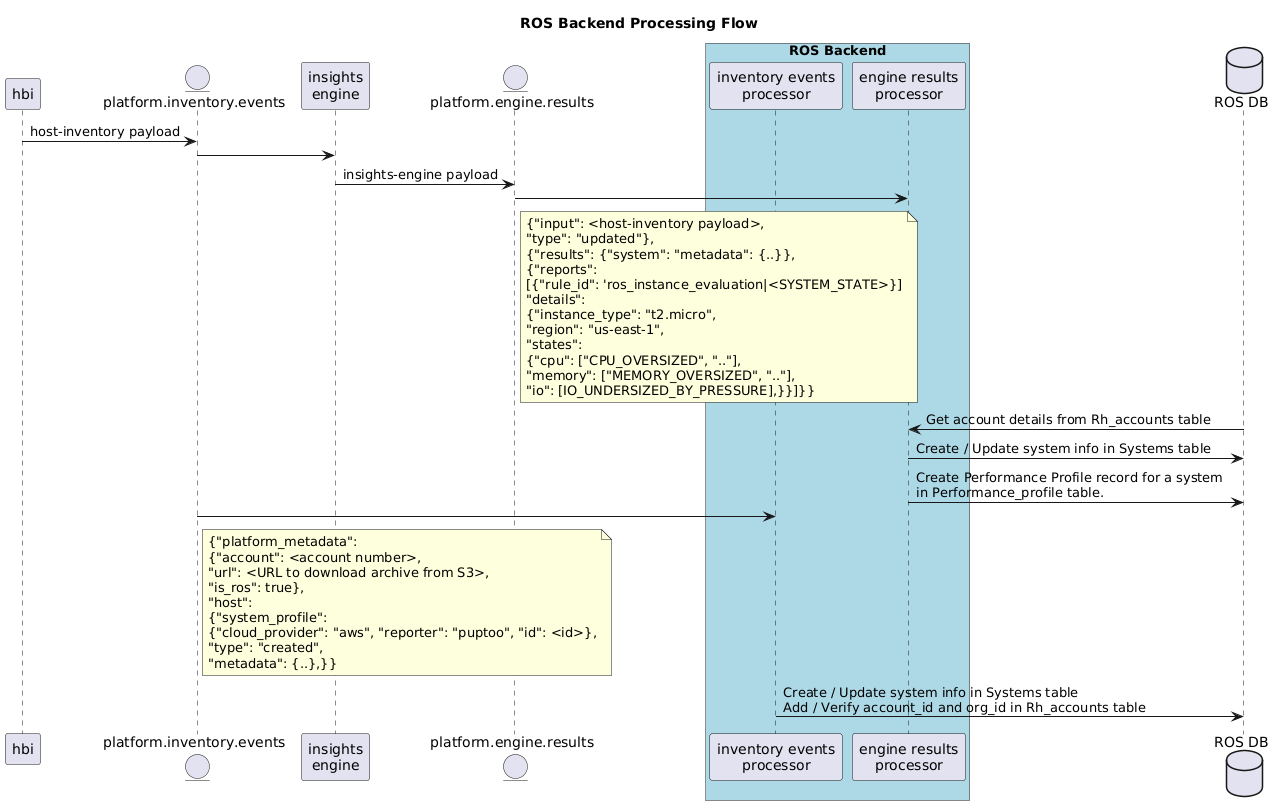

## How it works

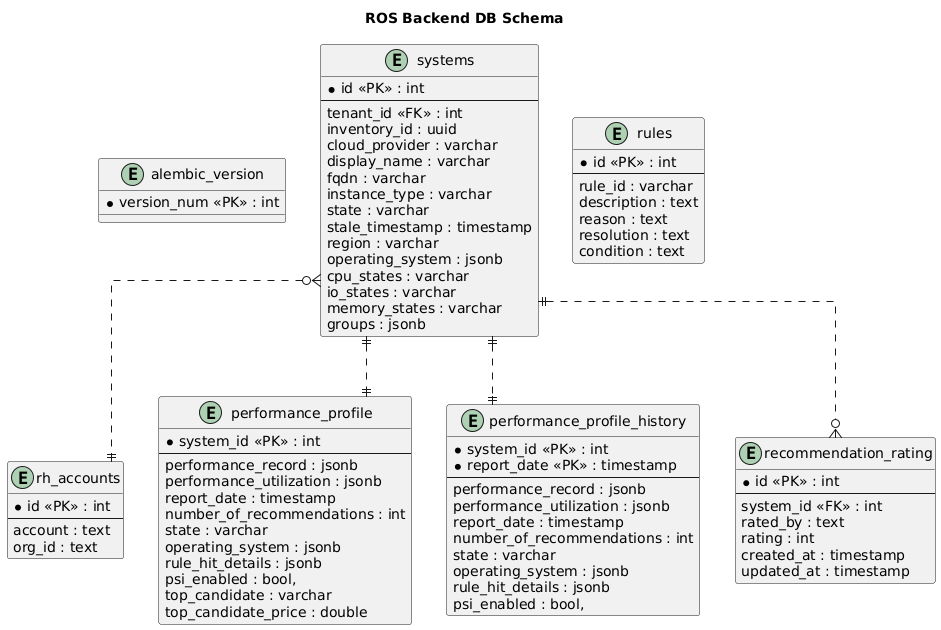

## DB Schema

## Getting Started

This project uses poetry to manage the development and production environments.

Once you have poetry installed, do the following:

The latest version is supported on Python 3.11, install it and then switch to 3.11 version:

```bash

poetry env use python3.11

```

There are some package dependencies, install those:

```bash

dnf install tar gzip gcc python3.11-devel libpq-devel

```

Install the required dependencies:

```bash

poetry install

```

Afterwards you can activate the virtual environment by running:

```bash

poetry shell

```

A list of configurable environment variables is present inside `.env.example` file.

### Dependencies

The application depends on several parts of the insights platform. These dependencies are provided by the

`docker-compose.yml` file in the *scripts* directory.

To run the dependencies, just run following command:

```bash

cd scripts && docker-compose up insights-inventory-mq db-ros insights-engine

```

## Running the ROS application

### Within docker

To run the full application ( With ros components within docker)

```bash

docker-compose up ros-processor ros-api

```

### On host machine

In order to properly run the application from the host machine, you need to have modified your `/etc/hosts` file. Check the

README.md file in scripts directory.

#### Initialize the database

Run the following commands to execute the db migration scripts.

```bash

export FLASK_APP=manage.py

flask db upgrade

flask seed

```

#### Running the processor locally

The processor component connects to kafka, and listens on topics for system archive uploads/ system deletion messages.

```bash

python -m ros.processor.main

```

#### Running the web api locally

The web api component provides a REST api view of the app database.

```bash

python -m ros.api.main

```

#### Running the Tests

It is possible to run the tests using pytest:

```bash

poetry install

poetry run pytest --cov=ros tests

```

## Running Inventory API with xjoin pipeline

To run full inventory api with xjoin , run the following command:

```bash

docker-compose up insights-inventory-web xjoin

make configure-xjoin

```

Note - Before running the above commands make sure kafka and db-host-inventory containers are up and running.

## Available API endpoints

Resource Optimization REST API documentation can be found at `/api/ros`. It is accessible at raw OpenAPI definition [here](https://raw.githubusercontent.com/RedHatInsights/ros-backend/refs/heads/main/ros/openapi/openapi.json).

On a local instance it can be accessed on http://localhost:8000/api/ros/v1/openapi.json.

For local development setup, remember to use the `x-rh-identity` header encoded from account number and org_id, the one used while running `make insights-upload-data` command.