https://github.com/roshan-r/cherava

Cherava, a web based scraper environment that focuses on simplicity.

https://github.com/roshan-r/cherava

Last synced: 5 months ago

JSON representation

Cherava, a web based scraper environment that focuses on simplicity.

- Host: GitHub

- URL: https://github.com/roshan-r/cherava

- Owner: Roshan-R

- Created: 2023-03-03T18:28:41.000Z (almost 3 years ago)

- Default Branch: main

- Last Pushed: 2025-03-14T06:45:20.000Z (11 months ago)

- Last Synced: 2025-04-12T05:29:00.458Z (10 months ago)

- Language: JavaScript

- Homepage: https://cherava.vercel.app

- Size: 210 KB

- Stars: 13

- Watchers: 1

- Forks: 1

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

# Cherava

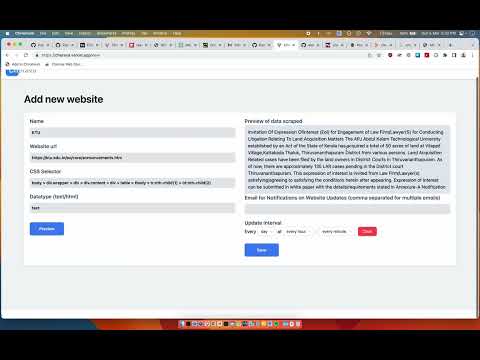

An open source, zero-code web scraping automation tool.

Its purpose is to create alert systems for various tasks such as monitoring university notice boards, e-commerce site price alerts, product alerts, and more.

## Features that work

- GUI-based scraper task creation with zero code required for any website.

- Users can add workflows, which are saved into a database based on the user's session.

- Automation of scraping tasks with cron job-like scheduling.

- Notification system that alerts the user when the contents of a specific HTML selector changes over time using email.

## Future proposed features

- Use a preview of the website within the UI itself to pick the CSS selector.

- More notification provider options.

- UI-based or script engine features to further process the data received from scraping.

## How it works

- Workflows for scraping automation tasks are created using a browser session as an ID. Future plans are to use proper authentication.

- The Cheerio Node.js package is used to run the web scraping tasks, and a preview is generated for the URL and CSS selector specified in the add workflow UI. The CSS selector needs to be taken from the website using Inspect Element.

- When a workflow is created, it is added into the PostgreSQL database hosted on Railway.app, along with the notification recipient emails and the interval for checking updates on the website.

- The Node-cron package is used for scheduling the scraping workflow.

- The Nodemailer package then sends an email to the recipients when the workflow detects a change in the CSS selector's contents on the website versus the contents stored in the database during the first run when the workflow was added.

## Timeline

- Initially, the web scraper and the basic UI for adding a workflow were implemented.

- Next, the workflow UI was connected to the PostgreSQL database.

- Next, the Nodemailer notifier module was added.

- Next, the cron job module was added.

- Finally, all of these components were integrated together.

## Demo Video

[](https://youtu.be/Eqarz4dFGnU)

## Built by

## To run locally

Clone the Repo

#### Frontend

```

cd frontend

npm i

npm run dev

```

#### Backend

```

cd backend

npm i

npm run dev

```

#### Env file format for frontend

#### Env file format for backend