https://github.com/s1998/all-but-the-top

https://github.com/s1998/all-but-the-top

abusive-language davidson deep-learning embeddings f1-acc hate-speech iclr iclr2018 natural-language-processing word-embeddings

Last synced: 6 months ago

JSON representation

- Host: GitHub

- URL: https://github.com/s1998/all-but-the-top

- Owner: s1998

- Created: 2019-09-29T23:44:14.000Z (about 6 years ago)

- Default Branch: master

- Last Pushed: 2019-11-26T15:14:38.000Z (almost 6 years ago)

- Last Synced: 2025-02-01T18:27:53.349Z (8 months ago)

- Topics: abusive-language, davidson, deep-learning, embeddings, f1-acc, hate-speech, iclr, iclr2018, natural-language-processing, word-embeddings

- Language: Python

- Size: 80.1 KB

- Stars: 2

- Watchers: 3

- Forks: 1

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

# All-but-the-top

Implementation of the paper [All-but-the-top](https://openreview.net/forum?id=HkuGJ3kCb) from ICLR 2018.

## Instructions to use

To run, use the file runner.py

Libraries used: Keras with tensorflow backend.

### Sample plot

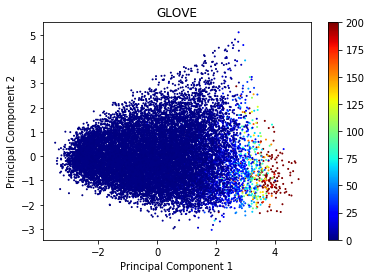

Two principal components of the embeddings with color map for frequency.

## Results

Results obtained on Davidson et al (2017) using two different embeddings.

Model

Preprocessed

Postprocessed

P

R

F1

Acc

P

R

F1

Acc

AvgPool

0.811

0.721

0.756

0.762

0.855

0.887

0.862

0.887

MaxPool

0.779

0.834

0.792

0.787

0.888

0.903

0.884

0.903

CNN

0.885

0.903

0.880

0.903

0.890

0.905

0.892

0.905

GRU

0.894

0.907

0.898

0.907

0.899

0.914

0.902

0.914

Effects of using post processing on Glove Embeddings on Davidson et Al(2017)

Model

Preprocessed

Postprocessed

P

R

F1

Acc

P

R

F1

Acc

AvgPool

0.787

0.732

0.754

0.782

0.898

0.893

0.868

0.883

MaxPool

0.703

0.756

0.724

0.751

0.891

0.887

0.872

0.887

CNN

0.838

0.887

0.861

0.887

0.875

0.893

0.875

0.891

GRU

0.854

0.904

0.878

0.904

0.910

0.903

0.881

0.903

Effects of using post processing on Word2Vec Embeddings on Davidson et Al(2017)