https://github.com/salesforce/multihopkg

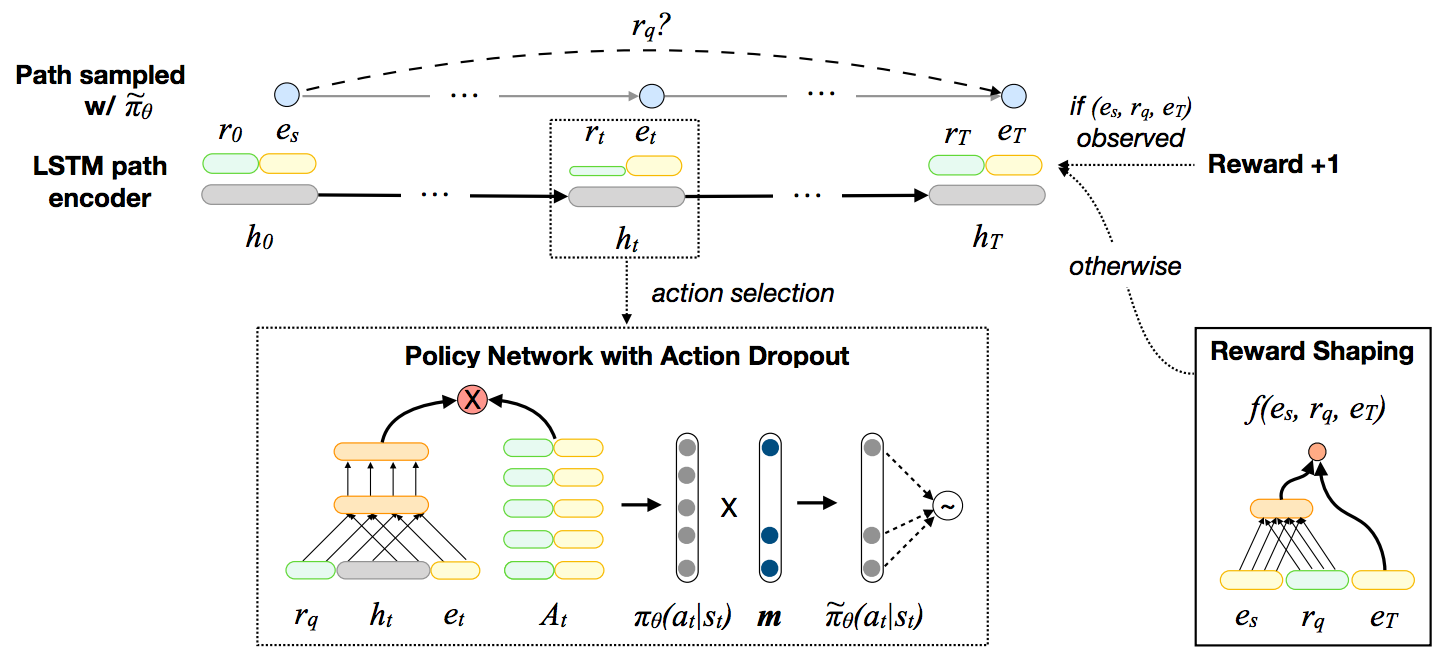

Multi-hop knowledge graph reasoning learned via policy gradient with reward shaping and action dropout

https://github.com/salesforce/multihopkg

action-dropout knowledge-graph multi-hop-reasoning policy-gradient pytorch reinforcement-learning reward-shaping

Last synced: 23 days ago

JSON representation

Multi-hop knowledge graph reasoning learned via policy gradient with reward shaping and action dropout

- Host: GitHub

- URL: https://github.com/salesforce/multihopkg

- Owner: salesforce

- License: bsd-3-clause

- Created: 2018-09-26T20:53:30.000Z (over 6 years ago)

- Default Branch: master

- Last Pushed: 2025-03-04T08:16:22.000Z (3 months ago)

- Last Synced: 2025-04-01T15:11:44.979Z (2 months ago)

- Topics: action-dropout, knowledge-graph, multi-hop-reasoning, policy-gradient, pytorch, reinforcement-learning, reward-shaping

- Language: Jupyter Notebook

- Homepage: https://arxiv.org/abs/1808.10568

- Size: 24.2 MB

- Stars: 308

- Watchers: 14

- Forks: 80

- Open Issues: 8

-

Metadata Files:

- Readme: README.md

- License: LICENSE

- Codeowners: CODEOWNERS

Awesome Lists containing this project

README

# Multi-Hop Knowledge Graph Reasoning with Reward Shaping

⚠️ REPO NO LONGER MAINTAINED, RESEARCH CODE PROVIDED AS IT IS

This is the official code release of the following paper:

Xi Victoria Lin, Richard Socher and Caiming Xiong. [Multi-Hop Knowledge Graph Reasoning with Reward Shaping](https://arxiv.org/abs/1808.10568). EMNLP 2018.

## Quick Start

### Environment variables & dependencies

#### Use Docker

Build the docker image

```

docker build -< Dockerfile -t multi_hop_kg:v1.0

```

Spin up a docker container and run experiments inside it.

```

nvidia-docker run -v `pwd`:/workspace/MultiHopKG -it multi_hop_kg:v1.0

```

*The rest of the readme assumes that one works interactively inside a container. If you prefer to run experiments outside a container, please change the commands accordingly.*

#### Mannually set up

Alternatively, you can install Pytorch (>=0.4.1) manually and use the Makefile to set up the rest of the dependencies.

```

make setup

```

### Process data

First, unpack the data files

```

tar xvzf data-release.tgz

```

and run the following command to preprocess the datasets.

```

./experiment.sh configs/.sh --process_data

```

`` is the name of any dataset folder in the `./data` directory. In our experiments, the five datasets used are: `umls`, `kinship`, `fb15k-237`, `wn18rr` and `nell-995`.

`` is a non-negative integer number representing the GPU index.

### Train models

Then the following commands can be used to train the proposed models and baselines in the paper. By default, dev set evaluation results will be printed when training terminates.

1. Train embedding-based models

```

./experiment-emb.sh configs/-.sh --train

```

The following embedding-based models are implemented: `distmult`, `complex` and `conve`.

2. Train RL models (policy gradient)

```

./experiment.sh configs/.sh --train

```

3. Train RL models (policy gradient + reward shaping)

```

./experiment-rs.sh configs/-rs.sh --train

```

* Note: To train the RL models using reward shaping, make sure 1) you have pre-trained the embedding-based models and 2) set the file path pointers to the pre-trained embedding-based models correctly ([example configuration file](configs/umls-rs.sh)).

### Evaluate pretrained models

To generate the evaluation results of a pre-trained model, simply change the `--train` flag in the commands above to `--inference`.

For example, the following command performs inference with the RL models (policy gradient + reward shaping) and prints the evaluation results (on both dev and test sets).

```

./experiment-rs.sh configs/-rs.sh --inference

```

To print the inference paths generated by beam search during inference, use the `--save_beam_search_paths` flag:

```

./experiment-rs.sh configs/-rs.sh --inference --save_beam_search_paths

```

* Note for the NELL-995 dataset:

On this dataset we split the original training data into `train.triples` and `dev.triples`, and the final model to test has to be trained with these two files combined.

1. To obtain the correct test set results, you need to add the `--test` flag to all data pre-processing, training and inference commands.

```

# You may need to adjust the number of training epochs based on the dev set development.

./experiment.sh configs/nell-995.sh --process_data --test

./experiment-emb.sh configs/nell-995-conve.sh --train --test

./experiment-rs.sh configs/NELL-995-rs.sh --train --test

./experiment-rs.sh configs/NELL-995-rs.sh --inference --test

```

2. Leave out the `--test` flag during development.

### Change the hyperparameters

To change the hyperparameters and other experiment set up, start from the [configuration files](configs).

### More on implementation details

We use mini-batch training in our experiments. To save the amount of paddings (which can cause memory issues and slow down computation for knowledge graphs that contain nodes with large fan-outs),

we group the action spaces of different nodes into buckets based on their sizes. Description of the bucket implementation can be found

[here](https://github.com/salesforce/MultiHopKG/blob/master/src/rl/graph_search/pn.py#L193) and

[here](https://github.com/salesforce/MultiHopKG/blob/master/src/knowledge_graph.py#L164).

## Citation

If you find the resource in this repository helpful, please cite

```

@inproceedings{LinRX2018:MultiHopKG,

author = {Xi Victoria Lin and Richard Socher and Caiming Xiong},

title = {Multi-Hop Knowledge Graph Reasoning with Reward Shaping},

booktitle = {Proceedings of the 2018 Conference on Empirical Methods in Natural

Language Processing, {EMNLP} 2018, Brussels, Belgium, October

31-November 4, 2018},

year = {2018}

}

```