https://github.com/sauljabin/kaskade

kaskade is a kafka text user interface that allows you to interact with kafka and consume topics from your terminal in style!

https://github.com/sauljabin/kaskade

cli kafka kafka-cli kafka-tools kafka-tui kafka-ui kafka-utils python tui

Last synced: about 2 months ago

JSON representation

kaskade is a kafka text user interface that allows you to interact with kafka and consume topics from your terminal in style!

- Host: GitHub

- URL: https://github.com/sauljabin/kaskade

- Owner: sauljabin

- License: mit

- Created: 2021-09-15T22:09:16.000Z (about 4 years ago)

- Default Branch: main

- Last Pushed: 2023-12-25T16:17:26.000Z (almost 2 years ago)

- Last Synced: 2024-04-26T03:44:05.928Z (over 1 year ago)

- Topics: cli, kafka, kafka-cli, kafka-tools, kafka-tui, kafka-ui, kafka-utils, python, tui

- Language: Python

- Homepage:

- Size: 2.28 MB

- Stars: 91

- Watchers: 6

- Forks: 6

- Open Issues: 10

-

Metadata Files:

- Readme: README.md

- Changelog: CHANGELOG.md

- Funding: .github/FUNDING.yml

- License: LICENSE

Awesome Lists containing this project

- awesome-tuis - kaskade

- awesome-cli-apps-in-a-csv - kaskade - TUI for kafka, which allows you to interact and consume topics from your terminal in style. (<a name="monitor"></a>System monitoring)

- awesome-cli-apps - kaskade - TUI for kafka, which allows you to interact and consume topics from your terminal in style. (<a name="monitor"></a>System monitoring)

README

## Kaskade

Kaskade is a text user interface (TUI) for Apache Kafka, built with [Textual](https://github.com/Textualize/textual)

by [Textualize](https://www.textualize.io/).

It includes features like:

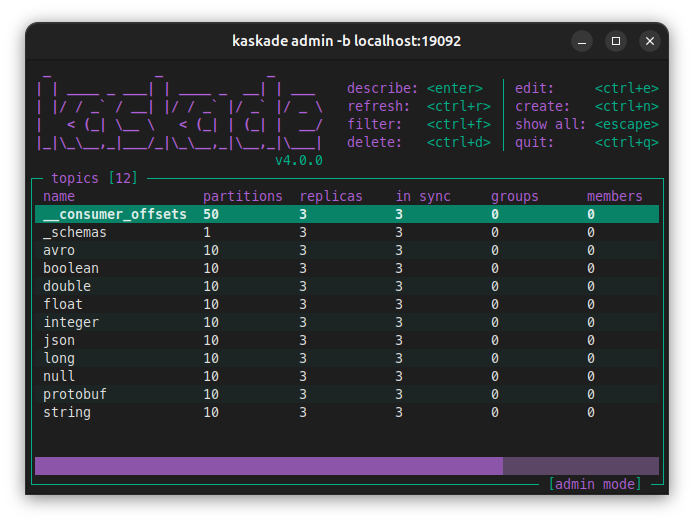

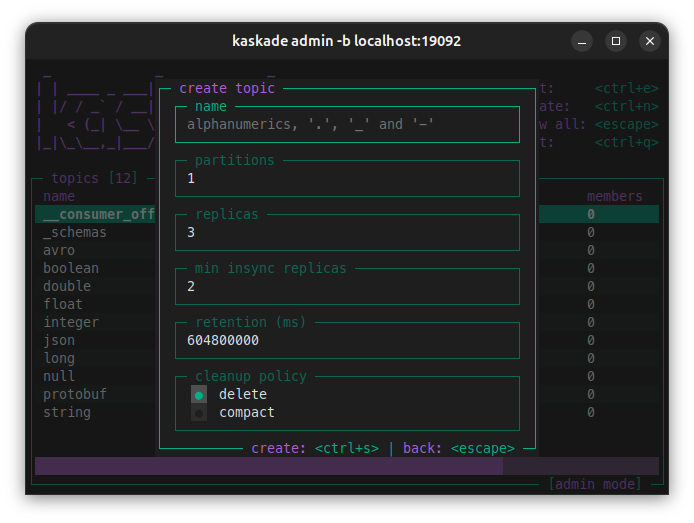

### Admin

- List topics, partitions, groups and group members.

- Topic information like lag, replicas and records count.

- Create, edit and delete topics.

- Filter topics by name.

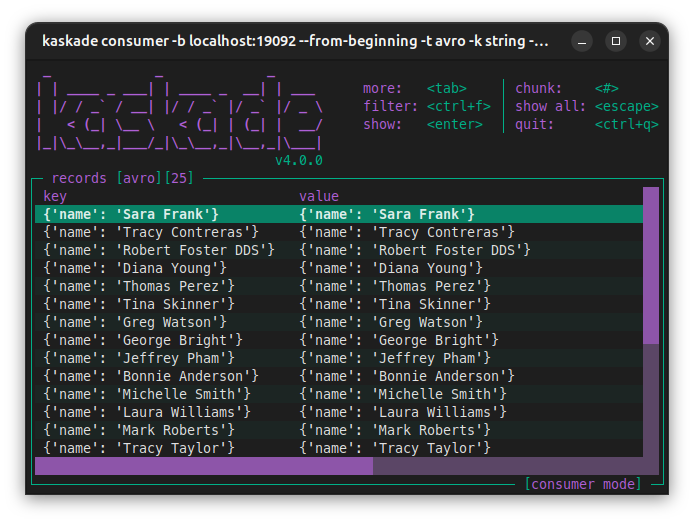

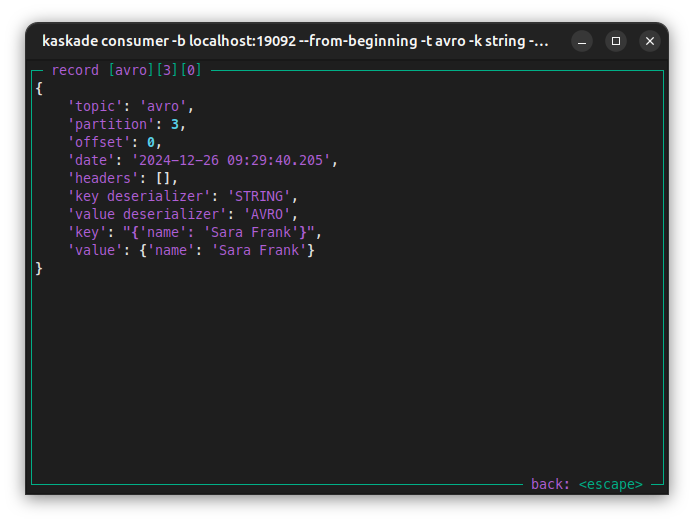

### Consumer

- Json, string, integer, long, float, boolean and double deserialization.

- Filter by key, value, header and/or partition.

- Schema Registry support for avro and json.

- Protobuf deserialization support without Schema Registry.

- Avro deserialization without Schema Registry.

## Limitations

Kaskade does not include:

- Schema Registry for protobuf.

- Runtime auto-refresh.

## Screenshots

## Installation

#### Install it with `brew`:

```bash

brew install kaskade

```

[brew installation](https://brew.sh/).

#### Install it with `pipx`:

```bash

pipx install kaskade

```

[pipx installation](https://pipx.pypa.io/stable/installation/).

## Running kaskade

#### Admin view:

```bash

kaskade admin -b my-kafka:9092

```

#### Consumer view:

```bash

kaskade consumer -b my-kafka:9092 -t my-topic

```

## Configuration examples

#### Multiple bootstrap servers:

```bash

kaskade admin -b my-kafka:9092,my-kafka:9093

```

#### Consume and deserialize:

```bash

kaskade consumer -b my-kafka:9092 -t my-json-topic -k json -v json

```

> Supported deserializers `[bytes, boolean, string, long, integer, double, float, json, avro, protobuf, registry]`

#### Consuming from the beginning:

```bash

kaskade consumer -b my-kafka:9092 -t my-topic --from-beginning

```

#### Schema registry simple connection deserializer:

```bash

kaskade consumer -b my-kafka:9092 -t my-avro-topic \

-k registry -v registry \

--registry url=http://my-schema-registry:8081

```

> For more information about Schema Registry configurations go

> to: [Confluent Schema Registry client](https://docs.confluent.io/platform/current/clients/confluent-kafka-python/html/index.html#schemaregistry-client).

#### Apicurio registry:

```bash

kaskade consumer -b my-kafka:9092 -t my-avro-topic \

-k registry -v registry \

--registry url=http://my-apicurio-registry:8081/apis/ccompat/v7

```

> For more about apicurio go to: [Apicurio registry](https://github.com/apicurio/apicurio-registry).

#### SSL encryption example:

```bash

kaskade admin -b my-kafka:9092 -c security.protocol=SSL

```

> For more information about SSL encryption and SSL authentication go

> to: [Configure librdkafka client](https://github.com/edenhill/librdkafka/wiki/Using-SSL-with-librdkafka#configure-librdkafka-client).

#### Confluent cloud admin and consumer:

```bash

kaskade admin -b ${BOOTSTRAP_SERVERS} \

-c security.protocol=SASL_SSL \

-c sasl.mechanism=PLAIN \

-c sasl.username=${CLUSTER_API_KEY} \

-c sasl.password=${CLUSTER_API_SECRET}

```

```bash

kaskade consumer -b ${BOOTSTRAP_SERVERS} -t my-avro-topic \

-k string -v registry \

-c security.protocol=SASL_SSL \

-c sasl.mechanism=PLAIN \

-c sasl.username=${CLUSTER_API_KEY} \

-c sasl.password=${CLUSTER_API_SECRET} \

--registry url=${SCHEMA_REGISTRY_URL} \

--registry basic.auth.user.info=${SR_API_KEY}:${SR_API_SECRET}

```

> More about confluent cloud configuration

> at: [Kafka client quick start for Confluent Cloud](https://docs.confluent.io/cloud/current/client-apps/config-client.html).

#### Running with docker:

```bash

docker run --rm -it --network my-networtk sauljabin/kaskade:latest \

admin -b my-kafka:9092

```

```bash

docker run --rm -it --network my-networtk sauljabin/kaskade:latest \

consumer -b my-kafka:9092 -t my-topic

```

#### Avro consumer:

Consume using `my-schema.avsc` file:

```bash

kaskade consumer -b my-kafka:9092 --from-beginning \

-k string -v avro \

-t my-avro-topic \

--avro value=my-schema.avsc

```

#### Protobuf consumer:

Install `protoc` command:

```bash

brew install protobuf

```

Generate a _Descriptor Set_ file from your `.proto` file:

```bash

protoc --include_imports \

--descriptor_set_out=my-descriptor.desc \

--proto_path=${PROTO_PATH} \

${PROTO_PATH}/my-proto.proto

```

Consume using `my-descriptor.desc` file:

```bash

kaskade consumer -b my-kafka:9092 --from-beginning \

-k string -v protobuf \

-t my-protobuf-topic \

--protobuf descriptor=my-descriptor.desc \

--protobuf value=mypackage.MyMessage

```

> More about protobuf and `FileDescriptorSet` at: [Protocol Buffers documentation](https://protobuf.dev/programming-guides/techniques/#self-description).

## Questions

For Q&A go to [GitHub Discussions](https://github.com/sauljabin/kaskade/discussions/categories/q-a).

## Development

For development instructions see [DEVELOPMENT.md](https://github.com/sauljabin/kaskade/blob/main/DEVELOPMENT.md).