https://github.com/sniklaus/sepconv-slomo

an implementation of Video Frame Interpolation via Adaptive Separable Convolution using PyTorch

https://github.com/sniklaus/sepconv-slomo

cuda cupy deep-learning python pytorch

Last synced: 8 days ago

JSON representation

an implementation of Video Frame Interpolation via Adaptive Separable Convolution using PyTorch

- Host: GitHub

- URL: https://github.com/sniklaus/sepconv-slomo

- Owner: sniklaus

- Created: 2017-09-10T05:58:15.000Z (over 7 years ago)

- Default Branch: master

- Last Pushed: 2025-01-06T01:01:19.000Z (4 months ago)

- Last Synced: 2025-04-14T18:03:59.149Z (8 days ago)

- Topics: cuda, cupy, deep-learning, python, pytorch

- Language: Python

- Homepage:

- Size: 14.2 MB

- Stars: 1,015

- Watchers: 42

- Forks: 169

- Open Issues: 1

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

This work has now been superseded by: https://github.com/sniklaus/revisiting-sepconv

# sepconv-slomo

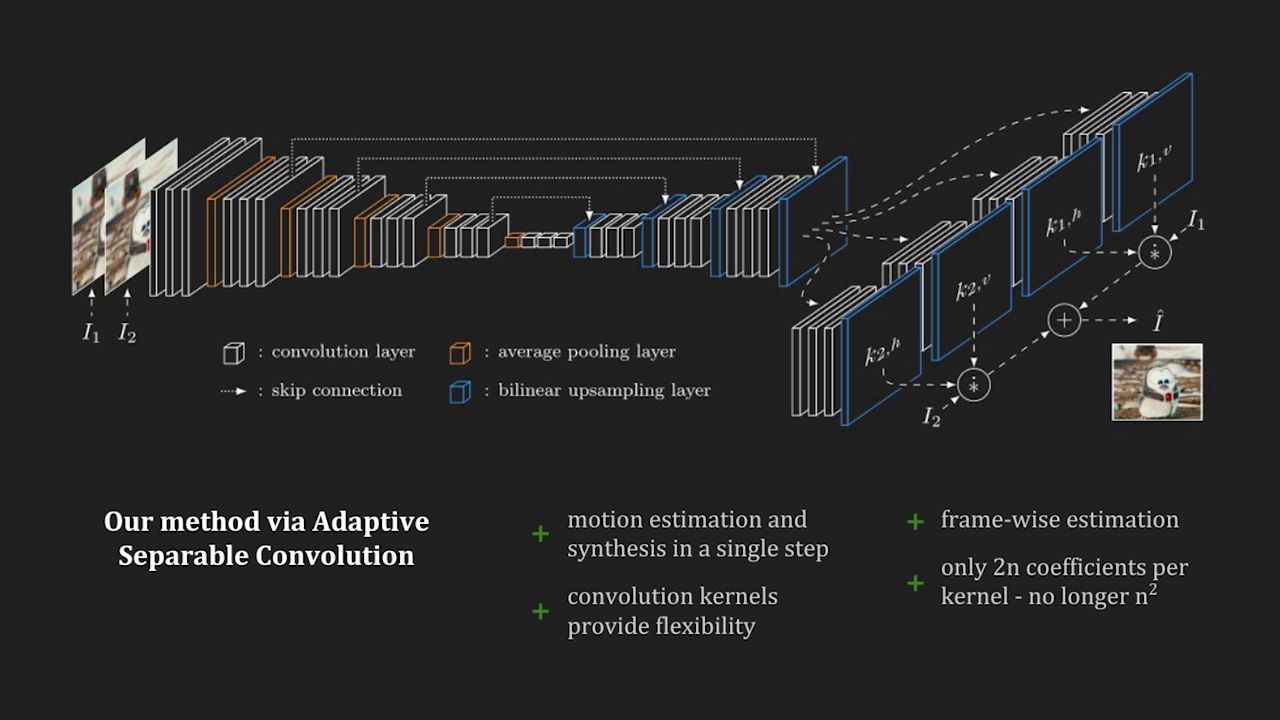

This is a reference implementation of Video Frame Interpolation via Adaptive Separable Convolution [1] using PyTorch. Given two frames, it will make use of [adaptive convolution](http://sniklaus.com/papers/adaconv) [2] in a separable manner to interpolate the intermediate frame. Should you be making use of our work, please cite our paper [1].

For a reimplemntation of our work, see: https://github.com/martkartasev/sepconv

And for another adaptation, consider: https://github.com/HyeongminLEE/pytorch-sepconv

For softmax splatting, please see: https://github.com/sniklaus/softmax-splatting

## setup

The separable convolution layer is implemented in CUDA using CuPy, which is why CuPy is a required dependency. It can be installed using `pip install cupy` or alternatively using one of the provided [binary packages](https://docs.cupy.dev/en/stable/install.html#installing-cupy) as outlined in the CuPy repository.

If you plan to process videos, then please also make sure to have `pip install moviepy` installed.

## usage

To run it on your own pair of frames, use the following command. You can either select the `l1` or the `lf` model, please see our paper for more details. In short, the `l1` model should be used for quantitative evaluations and the `lf` model for qualitative comparisons.

```

python run.py --model lf --one ./images/one.png --two ./images/two.png --out ./out.png

```

To run in on a video, use the following command.

```

python run.py --model lf --video ./videos/car-turn.mp4 --out ./out.mp4

```

For a quick benchmark using examples from the Middlebury benchmark for optical flow, run `python benchmark.py`. You can use it to easily verify that the provided implementation runs as expected.

## license

The provided implementation is strictly for academic purposes only. Should you be interested in using our technology for any commercial use, please feel free to contact us.

## references

```

[1] @inproceedings{Niklaus_ICCV_2017,

author = {Simon Niklaus and Long Mai and Feng Liu},

title = {Video Frame Interpolation via Adaptive Separable Convolution},

booktitle = {IEEE International Conference on Computer Vision},

year = {2017}

}

```

```

[2] @inproceedings{Niklaus_CVPR_2017,

author = {Simon Niklaus and Long Mai and Feng Liu},

title = {Video Frame Interpolation via Adaptive Convolution},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition},

year = {2017}

}

```

## acknowledgment

This work was supported by NSF IIS-1321119. The video above uses materials under a Creative Common license or with the owner's permission, as detailed at the end.