https://github.com/spatie/http-status-check

CLI tool to crawl a website and check HTTP status codes

https://github.com/spatie/http-status-check

curl health http seo statuscode

Last synced: 9 months ago

JSON representation

CLI tool to crawl a website and check HTTP status codes

- Host: GitHub

- URL: https://github.com/spatie/http-status-check

- Owner: spatie

- License: mit

- Created: 2015-10-22T06:57:28.000Z (over 10 years ago)

- Default Branch: main

- Last Pushed: 2023-12-18T14:24:14.000Z (about 2 years ago)

- Last Synced: 2025-04-14T19:59:37.407Z (10 months ago)

- Topics: curl, health, http, seo, statuscode

- Language: PHP

- Homepage: https://freek.dev/308-building-a-crawler-in-php

- Size: 117 KB

- Stars: 595

- Watchers: 20

- Forks: 89

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- Changelog: CHANGELOG.md

- License: LICENSE.md

Awesome Lists containing this project

README

# Check the HTTP status code of all links on a website

[](https://packagist.org/packages/spatie/http-status-check)

[](LICENSE.md)

[](https://packagist.org/packages/spatie/http-status-check)

This repository provides a tool to check the HTTP status code of every link on a given website.

## Support us

[ ](https://spatie.be/github-ad-click/http-status-check)

](https://spatie.be/github-ad-click/http-status-check)

We invest a lot of resources into creating [best in class open source packages](https://spatie.be/open-source). You can support us by [buying one of our paid products](https://spatie.be/open-source/support-us).

We highly appreciate you sending us a postcard from your hometown, mentioning which of our package(s) you are using. You'll find our address on [our contact page](https://spatie.be/about-us). We publish all received postcards on [our virtual postcard wall](https://spatie.be/open-source/postcards).

## Installation

This package can be installed via Composer:

```bash

composer global require spatie/http-status-check

```

## Usage

This tool will scan all links on a given website:

```bash

http-status-check scan https://example.com

```

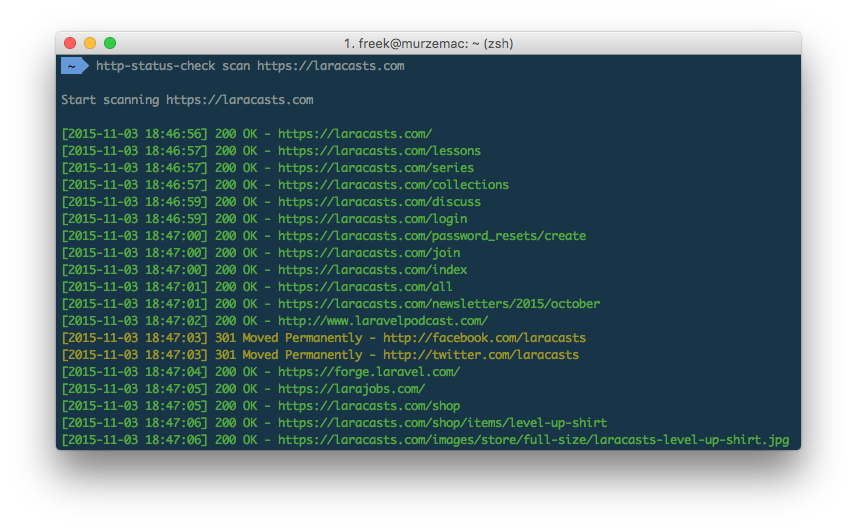

It outputs a line per link found. Here's an example on Laracast website scan:

When the crawling process is finished a summary will be shown.

By default the crawler uses 10 concurrent connections to speed up the crawling process. You can change that number by passing a different value to the `--concurrency` option:

```bash

http-status-check scan https://example.com --concurrency=20

```

You can also write all urls that gave a non-2xx or non-3xx response to a file:

```bash

http-status-check scan https://example.com --output=log.txt

```

When the crawler finds a link to an external website it will by default crawl that link as well. If you don't want the crawler to crawl such external urls use the `--dont-crawl-external-links` option:

```bash

http-status-check scan https://example.com --dont-crawl-external-links

```

By default, requests timeout after 10 seconds. You can change this by passing the number of seconds to the `--timeout` option:

```bash

http-status-check scan https://example.com --timeout=30

```

By default, the crawler will respect robots data. You can ignore them though with the `--ignore-robots` option:

```bash

http-status-check scan https://example.com --ignore-robots

```

If your site requires a basic authentification, you can use the `--auth` option:

```bash

http-status-check scan https://example.com --auth=username:password

```

## Testing

To run the tests, first make sure you have [Node.js](https://nodejs.org/) installed. Then start the included node based server in a separate terminal window:

```bash

cd tests/server

npm install

node server.js

```

With the server running, you can start testing:

```bash

vendor/bin/phpunit

```

## Changelog

Please see [CHANGELOG](CHANGELOG.md) for more information on what has changed recently.

## Contributing

Please see [CONTRIBUTING](https://github.com/spatie/.github/blob/main/CONTRIBUTING.md) for details.

## Security

If you've found a bug regarding security please mail [security@spatie.be](mailto:security@spatie.be) instead of using the issue tracker.

## Credits

- [Freek Van der Herten](https://github.com/freekmurze)

- [Sebastian De Deyne](https://github.com/sebastiandedeyne)

- [All Contributors](../../contributors)

## License

The MIT License (MIT). Please see [License File](LICENSE.md) for more information.