https://github.com/sylphai-inc/adalflow

AdalFlow: The library to build & auto-optimize LLM applications.

https://github.com/sylphai-inc/adalflow

agent ai auto-prompting bm25 chatbot faiss framework generative-ai information-retrieval llm machine-learning nlp optimizer python question-answering rag reranker retriever summarization trainer

Last synced: 5 months ago

JSON representation

AdalFlow: The library to build & auto-optimize LLM applications.

- Host: GitHub

- URL: https://github.com/sylphai-inc/adalflow

- Owner: SylphAI-Inc

- License: mit

- Created: 2024-04-19T05:05:13.000Z (over 1 year ago)

- Default Branch: main

- Last Pushed: 2025-03-26T15:22:05.000Z (7 months ago)

- Last Synced: 2025-05-01T22:17:33.132Z (6 months ago)

- Topics: agent, ai, auto-prompting, bm25, chatbot, faiss, framework, generative-ai, information-retrieval, llm, machine-learning, nlp, optimizer, python, question-answering, rag, reranker, retriever, summarization, trainer

- Language: Python

- Homepage: http://adalflow.sylph.ai/

- Size: 101 MB

- Stars: 2,957

- Watchers: 22

- Forks: 256

- Open Issues: 34

-

Metadata Files:

- Readme: README.md

- License: LICENSE.md

Awesome Lists containing this project

README

⚡ Say Goodbye to Manual Prompting and Vendor Lock-In ⚡

AdalFlow is a PyTorch-like library to build and auto-optimize any LM workflows, from Chatbots, RAG, to Agents.

# Why AdalFlow

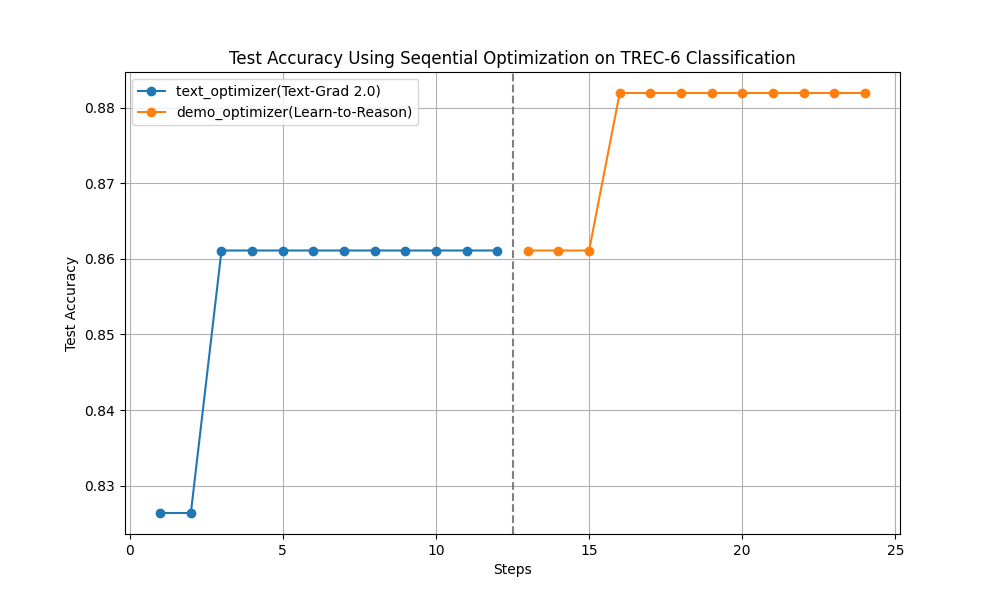

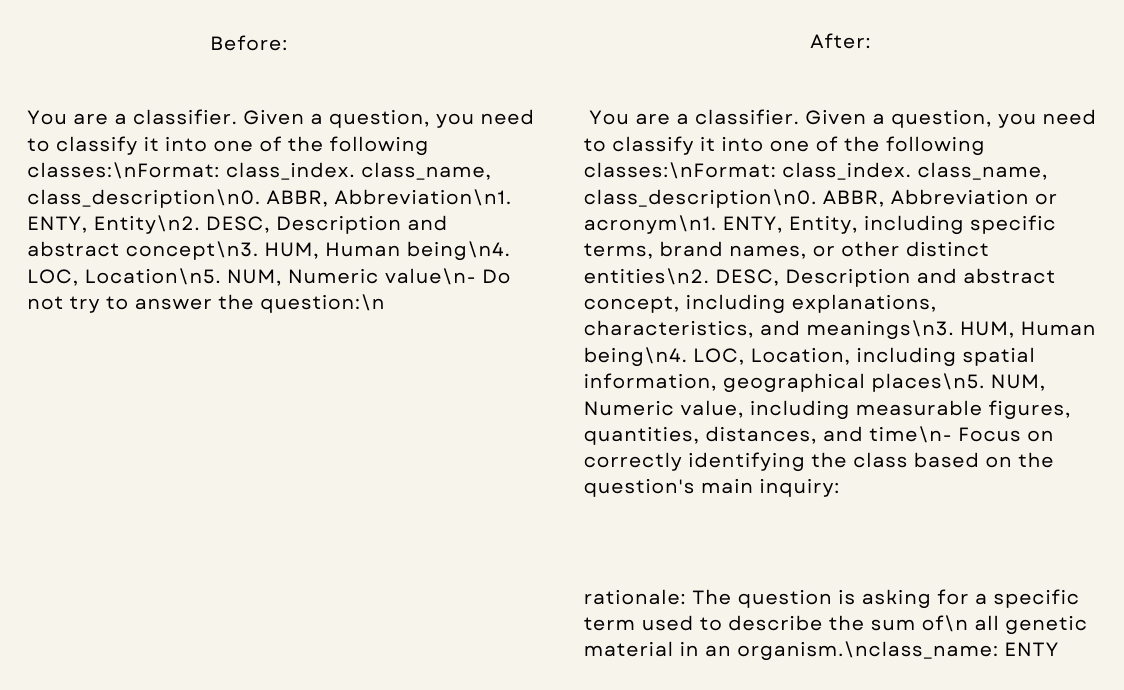

1. **Say goodbye to manual prompting**: AdalFlow provides a unified auto-differentiative framework for both zero-shot optimization and few-shot prompt optimization. Our research, ``LLM-AutoDiff`` and ``Learn-to-Reason Few-shot In Context Learning``, achieve the highest accuracy among all auto-prompt optimization libraries.

2. **Switch your LLM app to any model via a config**: AdalFlow provides `Model-agnostic` building blocks for LLM task pipelines, ranging from RAG, Agents to classical NLP tasks.

View [Documentation](https://adalflow.sylph.ai)

# Quick Start

Install AdalFlow with pip:

```bash

pip install adalflow

```

View [Quickstart](https://colab.research.google.com/drive/1_YnD4HshzPRARvishoU4IA-qQuX9jHrT?usp=sharing): Learn AdalFlow end-to-end experience in 15 mins.

# Research

[Jan 2025] [Auto-Differentiating Any LLM Workflow: A Farewell to Manual Prompting](https://arxiv.org/abs/2501.16673)

- LLM Applications as auto-differentiation graphs

- Token-efficient and better performance than DsPy

# Collaborations

We work closely with the [**VITA Group** at University of Texas at Austin](https://vita-group.github.io/), under the leadership of [Dr. Atlas Wang](https://www.ece.utexas.edu/people/faculty/atlas-wang), alongside [Dr. Junyuan Hong](https://jyhong.gitlab.io/), who provides valuable support in driving project initiatives.

For collaboration, contact [Li Yin](https://www.linkedin.com/in/li-yin-ai/).

# Documentation

AdalFlow full documentation available at [adalflow.sylph.ai](https://adalflow.sylph.ai/):

# AdalFlow: A Tribute to Ada Lovelace

AdalFlow is named in honor of [Ada Lovelace](https://en.wikipedia.org/wiki/Ada_Lovelace), the pioneering female mathematician who first recognized that machines could go beyond mere calculations. As a team led by a female founder, we aim to inspire more women to pursue careers in AI.

# Community & Contributors

The AdalFlow is a community-driven project, and we welcome everyone to join us in building the future of LLM applications.

Join our [Discord](https://discord.gg/ezzszrRZvT) community to ask questions, share your projects, and get updates on AdalFlow.

To contribute, please read our [Contributor Guide](https://adalflow.sylph.ai/contributor/index.html).

# Contributors

[](https://github.com/SylphAI-Inc/AdalFlow/graphs/contributors)

# Acknowledgements

Many existing works greatly inspired AdalFlow library! Here is a non-exhaustive list:

- 📚 [PyTorch](https://github.com/pytorch/pytorch/) for design philosophy and design pattern of ``Component``, ``Parameter``, ``Sequential``.

- 📚 [Micrograd](https://github.com/karpathy/micrograd): A tiny autograd engine for our auto-differentiative architecture.

- 📚 [Text-Grad](https://github.com/zou-group/textgrad) for the ``Textual Gradient Descent`` text optimizer.

- 📚 [DSPy](https://github.com/stanfordnlp/dspy) for inspiring the ``__{input/output}__fields`` in our ``DataClass`` and the bootstrap few-shot optimizer.

- 📚 [OPRO](https://github.com/google-deepmind/opro) for adding past text instructions along with its accuracy in the text optimizer.

- 📚 [PyTorch Lightning](https://github.com/Lightning-AI/pytorch-lightning) for the ``AdalComponent`` and ``Trainer``.