https://github.com/tiger-ai-lab/critiquefinetuning

Code for "Critique Fine-Tuning: Learning to Critique is More Effective than Learning to Imitate"

https://github.com/tiger-ai-lab/critiquefinetuning

fine-tuning languagemodel

Last synced: 4 months ago

JSON representation

Code for "Critique Fine-Tuning: Learning to Critique is More Effective than Learning to Imitate"

- Host: GitHub

- URL: https://github.com/tiger-ai-lab/critiquefinetuning

- Owner: TIGER-AI-Lab

- License: mit

- Created: 2025-01-29T14:03:53.000Z (8 months ago)

- Default Branch: main

- Last Pushed: 2025-06-04T22:38:01.000Z (4 months ago)

- Last Synced: 2025-06-05T03:30:02.683Z (4 months ago)

- Topics: fine-tuning, languagemodel

- Language: Python

- Homepage: https://tiger-ai-lab.github.io/CritiqueFineTuning/

- Size: 37.7 MB

- Stars: 151

- Watchers: 2

- Forks: 10

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

# CritiqueFineTuning

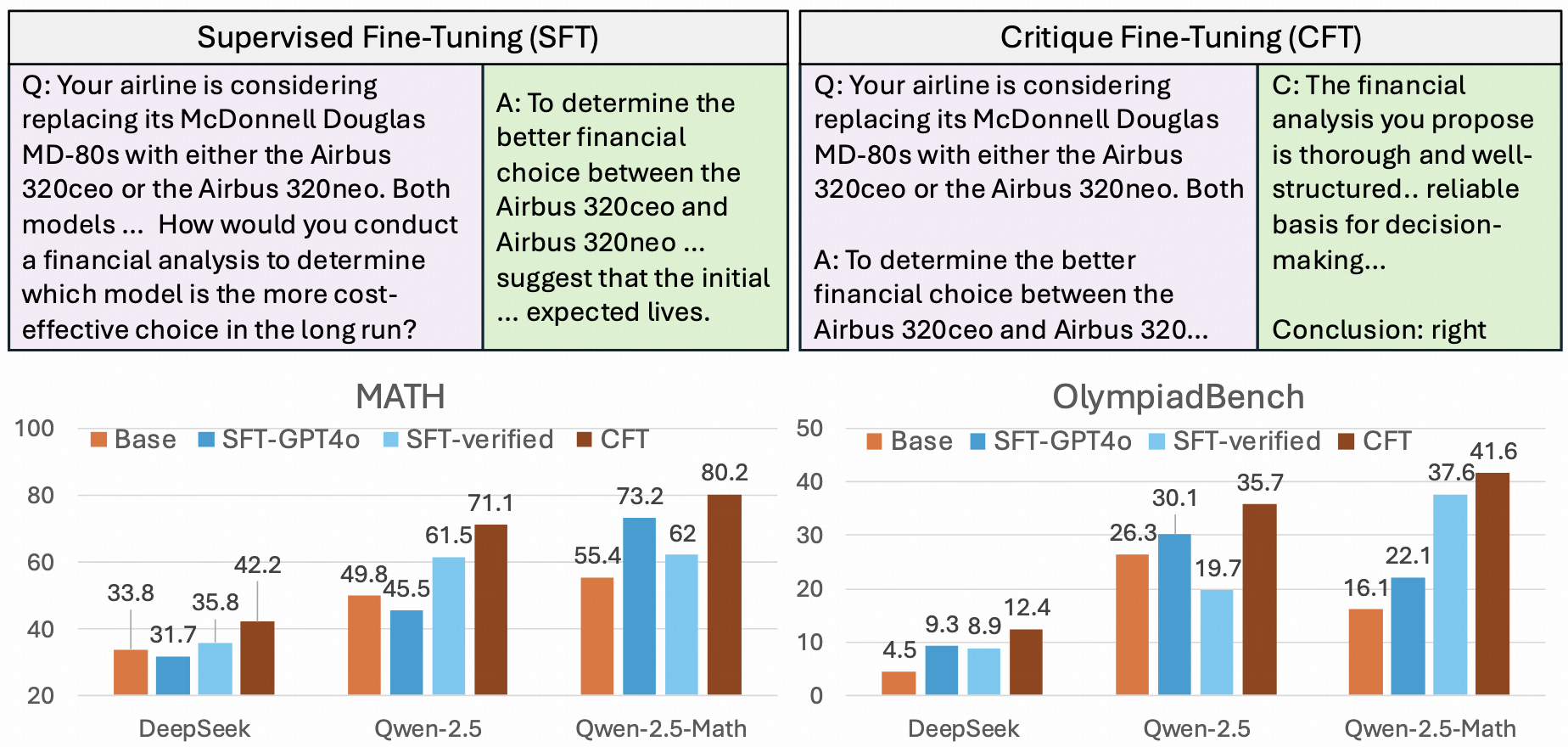

This repo contains the code for [Critique Fine-Tuning: Learning to Critique is More Effective than Learning to Imitate](https://arxiv.org/abs/2501.17703). In this paper, we introduce Critique Fine-Tuning (CFT) - a paradigm shift in LLM training where models learn to critique rather than imitate!

## Highlights

Our fine-tuning method can achieve on par results with RL training!

## News

- **[2025/01/30]** ⚡️ The paper, code, data, and model for CritiqueFineTuning are all available online.

## Getting Started

### Installation

1. First install LLaMA-Factory:

```bash

git clone --depth 1 https://github.com/hiyouga/LLaMA-Factory.git

cd LLaMA-Factory

pip install -e ".[torch,metrics]"

```

2. Install additional requirements:

pip install -r requirements.txt

### Training Steps

1. First, clone the repository and download the dataset:

```bash

git clone https://github.com/TIGER-AI-Lab/CritiqueFineTuning.git

cd tools/scripts

bash download_data.sh

```

2. Configure model paths in train/scripts/train_qwen2_5-math-7b-cft/qwen2.5-math-7b-cft-webinstruct-50k.yaml

3. Start training:

```bash

cd ../../train/scripts/train_qwen2_5-math-7b-cft

bash train.sh

```

For training the 32B model, follow a similar process but refer to the configuration in train/scripts/train_qwen2_5-32b-instruct-cft/qwen2.5-32b-cft-webinstruct-4k.yaml.

Note: In our paper experiments, we used MATH-500 as the validation set to select the final checkpoint. After training is complete, run the following commands to generate validation scores:

```bash

cd train/Validation

bash start_validate.sh

```

This will create a validation_summary.txt file containing MATH-500 scores for each checkpoint. Select the checkpoint with the highest score as your final model.

## Evaluation

Fill in the model path and evaluation result save path in tools/scripts/evaluate.sh, then run:

```bash

cd tools/scripts

bash evaluate.sh

```

Hardware may have a slight impact on evaluation results based on our testing. To fully reproduce our results, we recommend testing on A6000 GPU with CUDA 12.4 and vllm==0.6.6. For more environment details, please refer to requirements.txt

Note: Our evaluation code is modified from [Qwen2.5-Math](https://github.com/QwenLM/Qwen2.5-Math) and [MAmmoTH](https://github.com/TIGER-AI-Lab/MAmmoTH).

## Construct Critique Data

To create your own critique data, you can use our data generation script:

```bash

cd tools/self_construct_critique_data

bash run.sh

```

Simply modify the model_name parameter in run.sh to specify which model you want to use as the critique teacher. The script will generate critique data following our paper's approach.

## Citation

Cite our paper as

```

@article{wang2025critique,

title={Critique fine-tuning: Learning to critique is more effective than learning to imitate},

author={Wang, Yubo and Yue, Xiang and Chen, Wenhu},

journal={arXiv preprint arXiv:2501.17703},

year={2025}

}

```