https://github.com/titu1994/Keras-ResNeXt

Implementation of ResNeXt models from the paper Aggregated Residual Transformations for Deep Neural Networks in Keras 2.0+.

https://github.com/titu1994/Keras-ResNeXt

Last synced: 8 months ago

JSON representation

Implementation of ResNeXt models from the paper Aggregated Residual Transformations for Deep Neural Networks in Keras 2.0+.

- Host: GitHub

- URL: https://github.com/titu1994/Keras-ResNeXt

- Owner: titu1994

- License: mit

- Created: 2017-06-09T16:25:11.000Z (over 8 years ago)

- Default Branch: master

- Last Pushed: 2021-04-17T10:05:32.000Z (over 4 years ago)

- Last Synced: 2025-03-24T08:05:38.300Z (8 months ago)

- Language: Python

- Homepage:

- Size: 630 KB

- Stars: 224

- Watchers: 8

- Forks: 79

- Open Issues: 8

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

- awesome-image-classification - unofficial-keras : https://github.com/titu1994/Keras-ResNeXt

- awesome-image-classification - unofficial-keras : https://github.com/titu1994/Keras-ResNeXt

README

# Keras ResNeXt

Implementation of ResNeXt models from the paper [Aggregated Residual Transformations for Deep Neural Networks](https://arxiv.org/pdf/1611.05431.pdf) in Keras 2.0+.

Contains code for building the general ResNeXt model (optimized for datasets similar to CIFAR) and ResNeXtImageNet (optimized for the ImageNet dataset).

# Salient Features

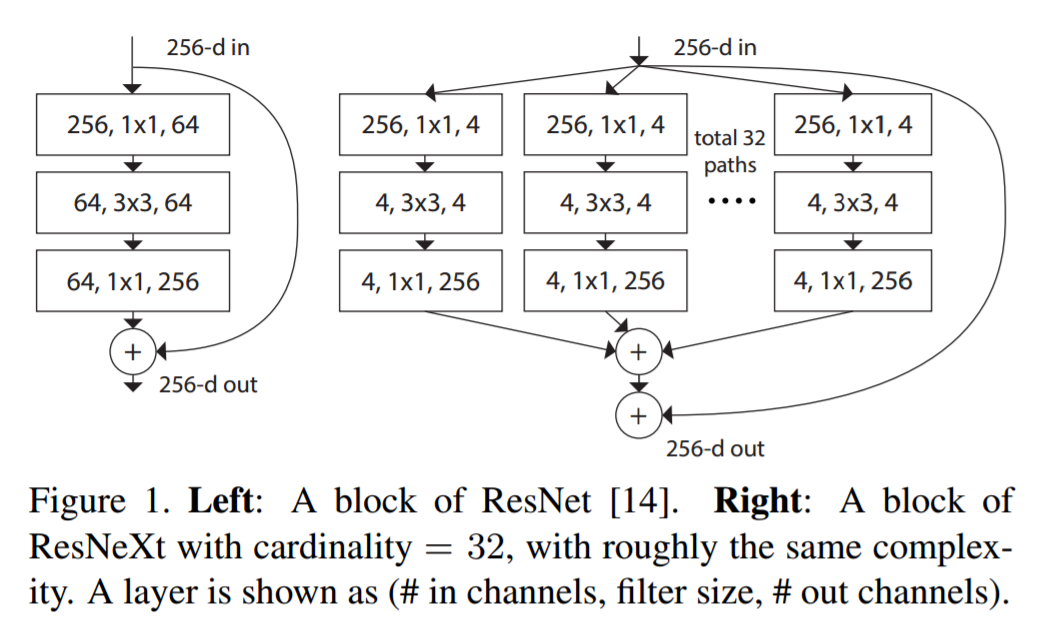

ResNeXt updates the ResNet block with a new expanded block architecture, which depends on the `cardinality` parameter. It can be further visualised in the below diagram from the paper.

---

However, since grouped convolutions are not directly available in Keras, an equivalent variant is used in this repository (see block 2)

# Usage

For the general ResNeXt model (for all datasets other than ImageNet),

```

from resnext import ResNext

model = ResNext(image_shape, depth, cardinality, width, weight_decay)

```

For the ResNeXt model which has been optimized for ImageNet,

```

from resnext import ResNextImageNet

image_shape = (112, 112, 3) if K.image_data_format() == 'channels_last' else (3, 112, 112)

model = ResNextImageNet(image_shape)

```

Note, there are other parameters such as depth, cardinality, width and weight_decay just as in the general model, however the defaults are set according to the paper.