https://github.com/titu1994/deep-columnar-convolutional-neural-network

Deep Columnar Convolutional Neural Network architecture, which is based on Multi Columnar DNN (Ciresan 2012).

https://github.com/titu1994/deep-columnar-convolutional-neural-network

Last synced: 6 months ago

JSON representation

Deep Columnar Convolutional Neural Network architecture, which is based on Multi Columnar DNN (Ciresan 2012).

- Host: GitHub

- URL: https://github.com/titu1994/deep-columnar-convolutional-neural-network

- Owner: titu1994

- Created: 2016-06-23T07:47:58.000Z (over 9 years ago)

- Default Branch: master

- Last Pushed: 2016-08-27T04:19:16.000Z (about 9 years ago)

- Last Synced: 2025-03-25T05:34:11.253Z (7 months ago)

- Language: Python

- Homepage: http://www.ijcaonline.org/archives/volume145/number12/25331-2016910772

- Size: 60.3 MB

- Stars: 24

- Watchers: 3

- Forks: 8

- Open Issues: 2

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

# Deep Columnar Convolutional Neural Network

DCCNN is a Convolutional neural network architecture which is inspired by the Multi Column Deep Neural Network of Ciresan (2012).

Using improvements from recent papers such as Batch Normalization, Leaky Relu, Inception BottleNeck blocks and Convolutional Subsampling,

the network uses very few parameters in order to acheive near state of the art performance on various datasets such as

MNIST, CIFAR 10/100, and SHVN.

Although it does not improve on the state of the art, it shows that smaller architectures with far fewer parameters can rival the performance of large ensemble networks.

Paper : "Deep Columnar Convolutional Neural Network"

# Architectures

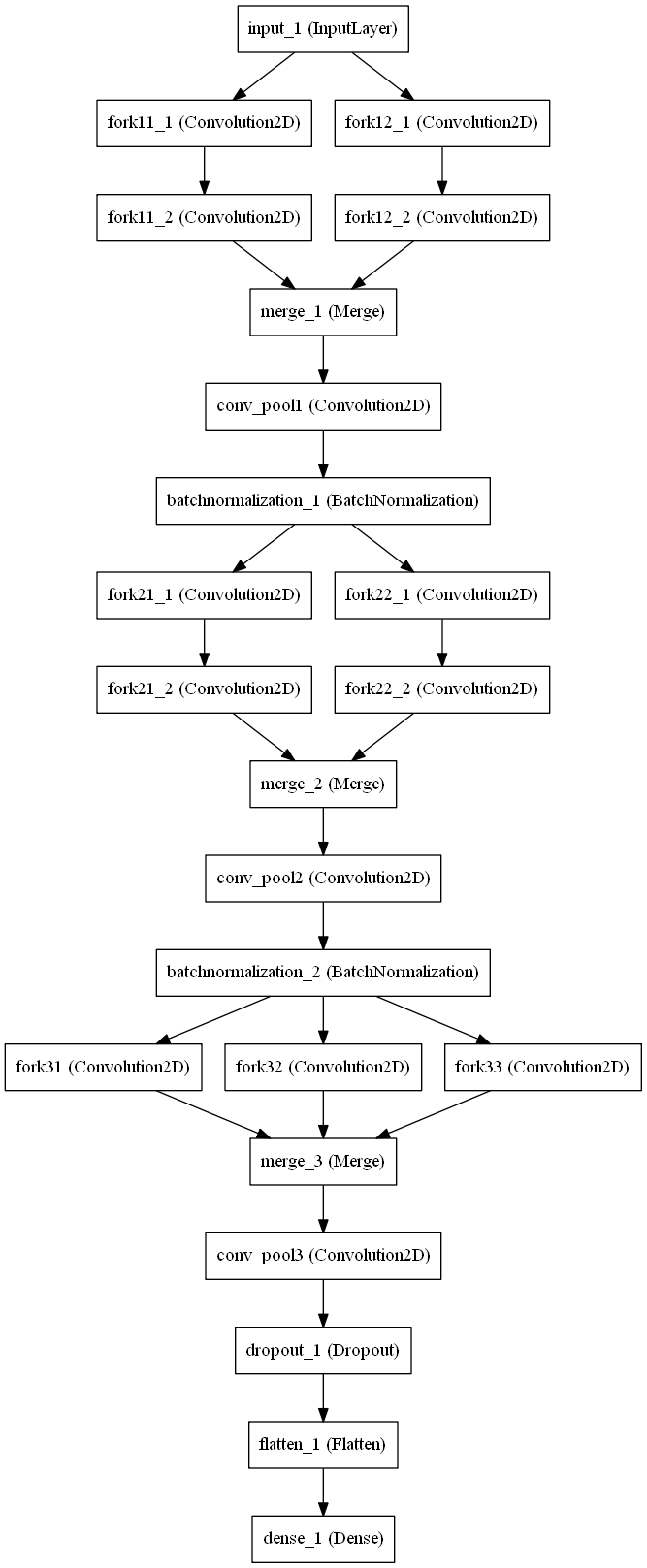

## DCCNN MNIST

This architecture is simple enough for the MNIST dataset, which contains small grayscale images. It acheives an error rate of 0.23% after 500 epochs.

The weights for this model are available in the weights folder.

## DCCNN SVHN

This architecture is similar to the MNIST dataset, but uses the SVHN dataset, of nearly 600,000 color images. It acheives an error rate of

1.92%. It was not possible to use the larger DCCNN CIFAR 100 architecture for this model, due to insufficient GPU memory.

Note that while similar, pooling is accomplished by using Convolutional Subsampling rather than Max Pooling.

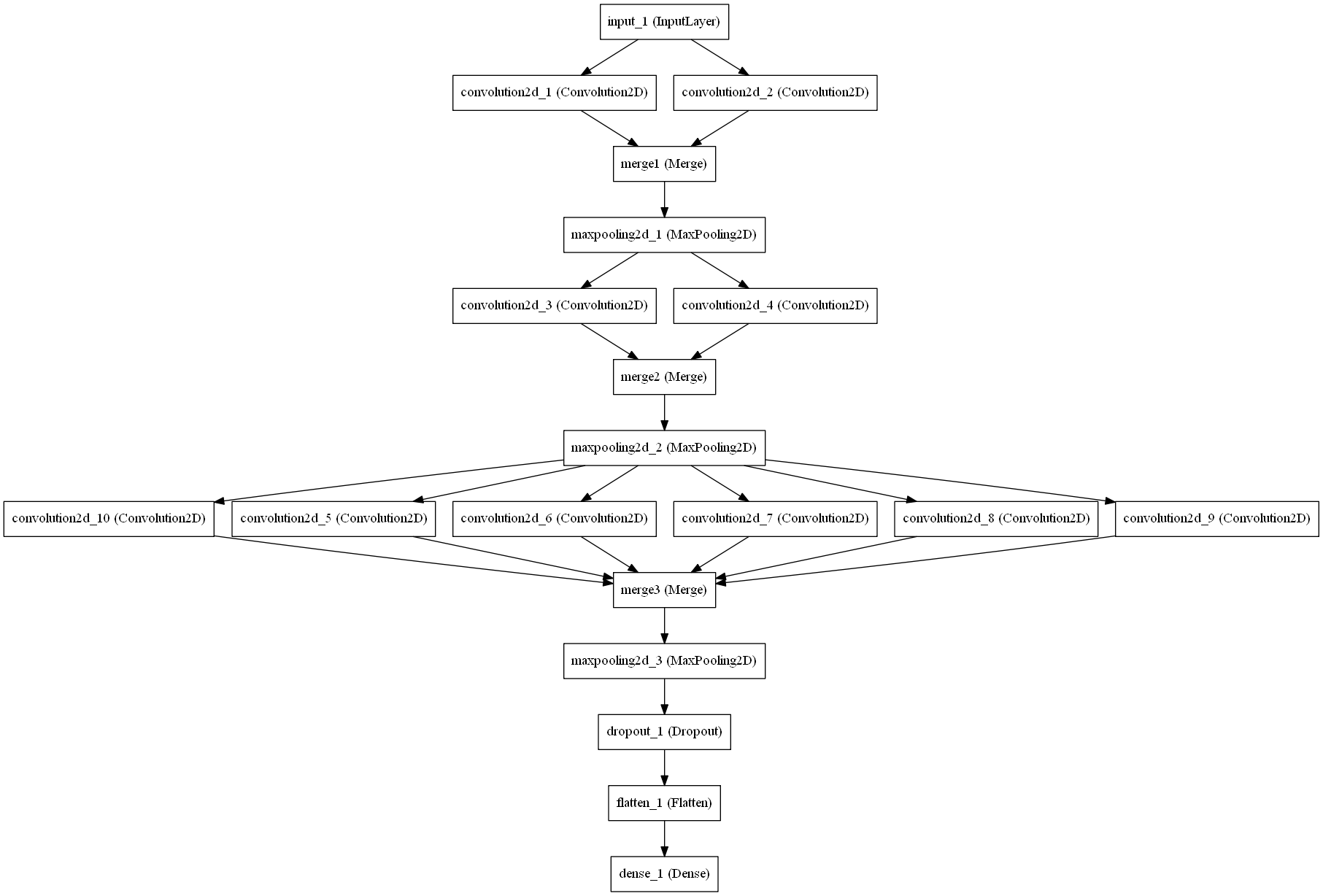

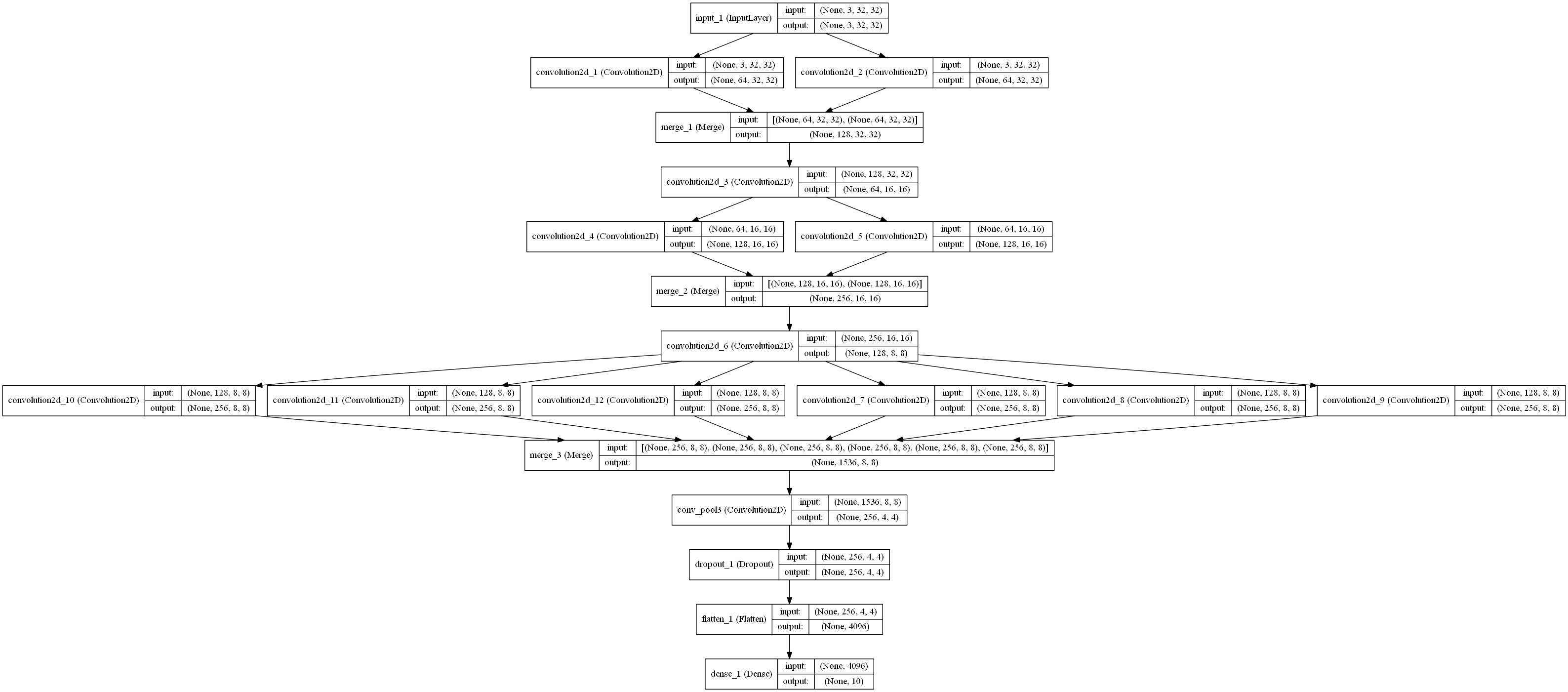

## DCCNN CIFAR 10

This architecture is different from the above two, and is used for the CIFAR 10 datasets. It acheives a low error rate of 6.90%.

This is higher than the state of the art 3.47% but that is a extremely deep 18 layer network.

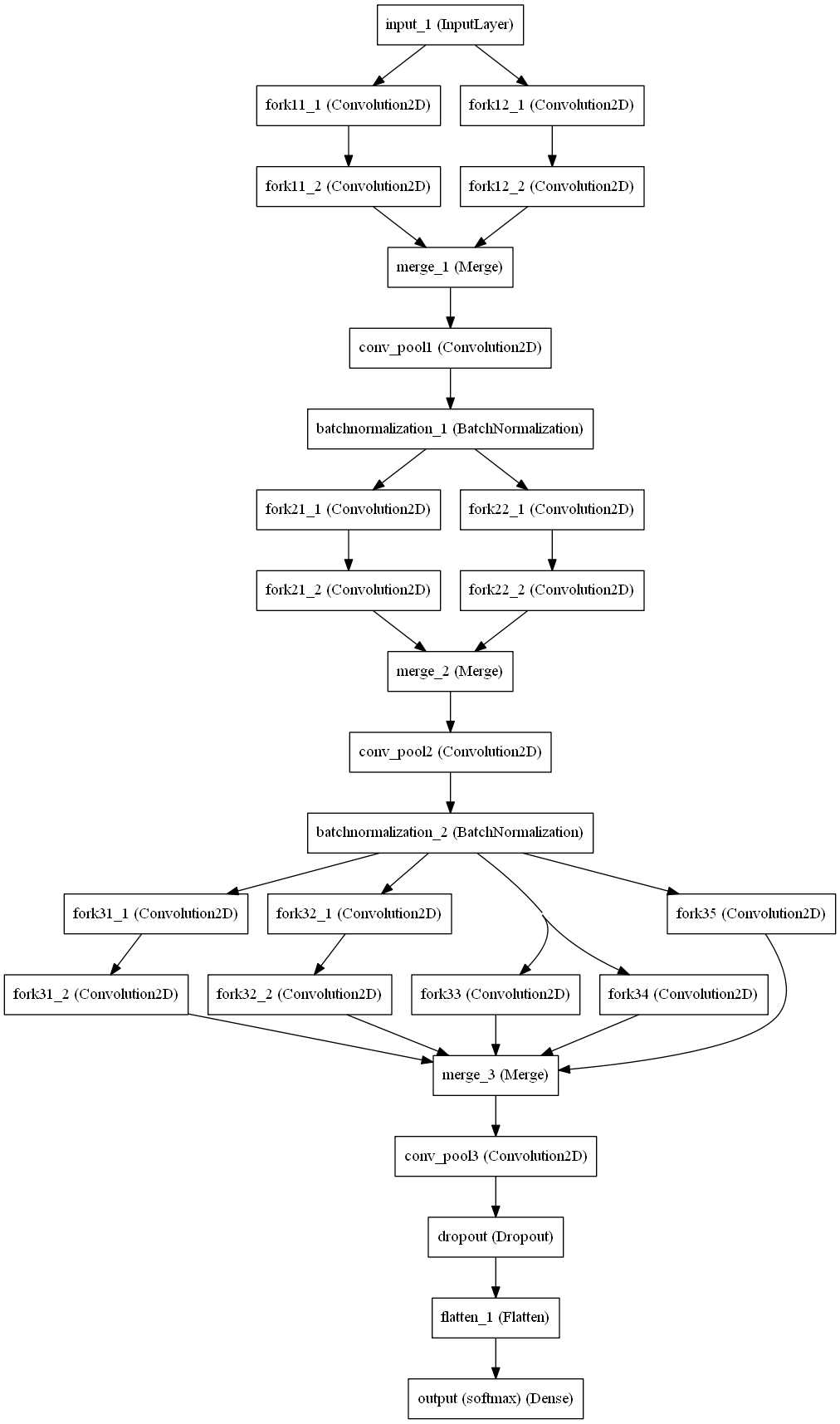

## DCCNN CIFAR 100

This architecture is similar to the one above, but has a more forks at the last level. It acheives a low error rate of 28.63%.

This is higher than the state of the art 24.28% which has 50 million parameters and requires over 160,000 epochs.