https://github.com/titu1994/keras-squeeze-excite-network

Implementation of Squeeze and Excitation Networks in Keras

https://github.com/titu1994/keras-squeeze-excite-network

deep-learning keras squeeze-excite

Last synced: 7 months ago

JSON representation

Implementation of Squeeze and Excitation Networks in Keras

- Host: GitHub

- URL: https://github.com/titu1994/keras-squeeze-excite-network

- Owner: titu1994

- License: mit

- Created: 2017-08-27T05:05:14.000Z (about 8 years ago)

- Default Branch: master

- Last Pushed: 2020-03-10T10:48:19.000Z (over 5 years ago)

- Last Synced: 2025-03-30T02:06:00.542Z (7 months ago)

- Topics: deep-learning, keras, squeeze-excite

- Language: Python

- Size: 479 KB

- Stars: 401

- Watchers: 14

- Forks: 119

- Open Issues: 7

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

# Squeeze and Excitation Networks in Keras

Implementation of [Squeeze and Excitation Networks](https://arxiv.org/pdf/1709.01507.pdf) in Keras 2.0.3+.

## Models

Current models supported :

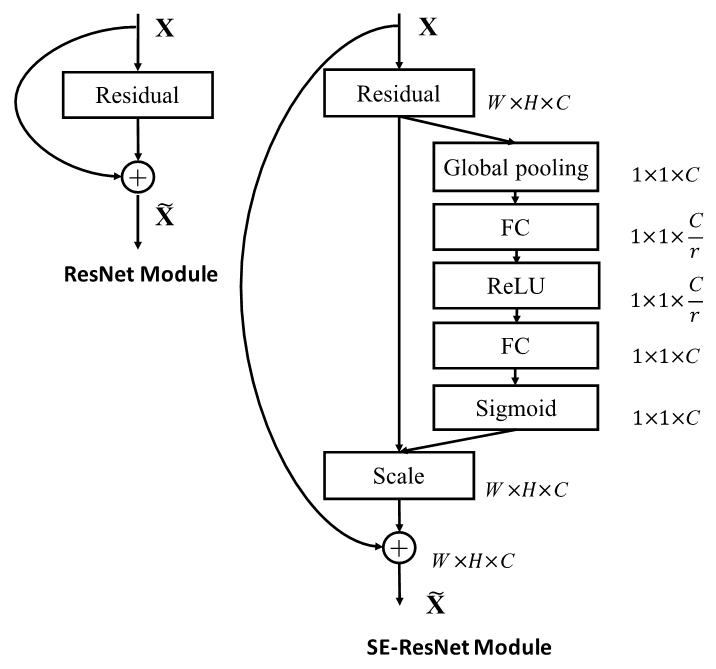

- SE-ResNet. Custom ResNets can be built using the `SEResNet` model builder, whereas prebuilt Resnet models such as `SEResNet50`, `SEResNet101` and `SEResNet154` can also be built directly.

- SE-InceptionV3

- SE-Inception-ResNet-v2

- SE-ResNeXt

Additional models (not from the paper, not verified if they improve performance)

- SE-MobileNets

- SE-DenseNet - Custom SE-DenseNets can be built using `SEDenseNet` model builder, whereas prebuilt SEDenseNet models such as `SEDenseNetImageNet121`, `SEDenseNetImageNet169`, `SEDenseNetImageNet161`, `SEDenseNetImageNet201` and `SEDenseNetImageNet264` can be build DenseNet in ImageNet configuration. To use SEDenseNet in CIFAR mode, use the `SEDenseNet` model builder.

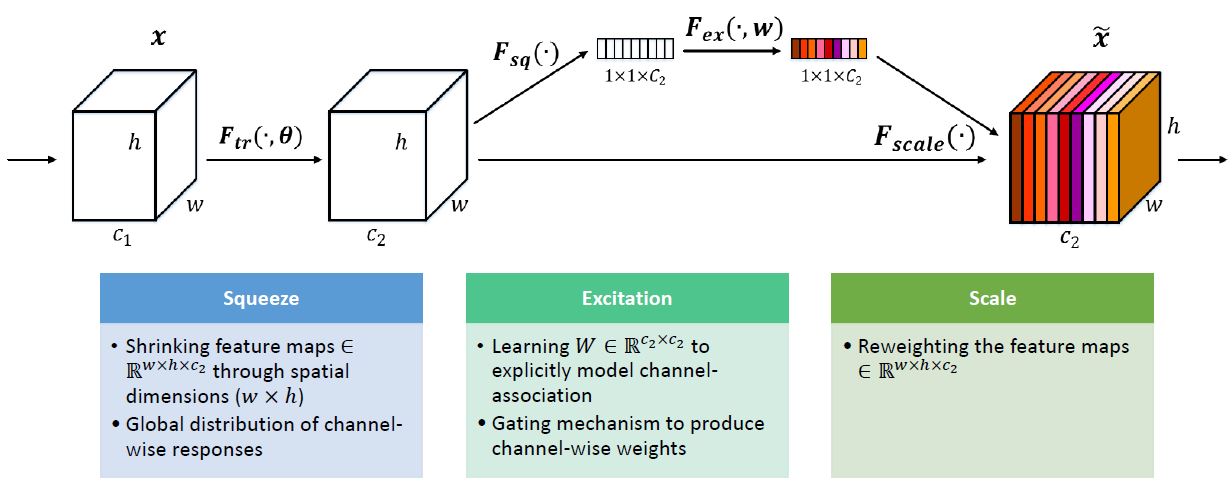

## Squeeze and Excitation block

The block is simple to implement in Keras. It composes of a GlobalAveragePooling2D, 2 Dense blocks and an elementwise multiplication.

Shape inference can be done automatically in Keras. It can be imported from `se.py`.

```python

from tensorflow.keras.layers import GlobalAveragePooling2D, Reshape, Dense, Permute, multiply

import tensorflow.keras.backend as K

def squeeze_excite_block(tensor, ratio=16):

init = tensor

channel_axis = 1 if K.image_data_format() == "channels_first" else -1

filters = init._keras_shape[channel_axis]

se_shape = (1, 1, filters)

se = GlobalAveragePooling2D()(init)

se = Reshape(se_shape)(se)

se = Dense(filters // ratio, activation='relu', kernel_initializer='he_normal', use_bias=False)(se)

se = Dense(filters, activation='sigmoid', kernel_initializer='he_normal', use_bias=False)(se)

if K.image_data_format() == 'channels_first':

se = Permute((3, 1, 2))(se)

x = multiply([init, se])

return x

```

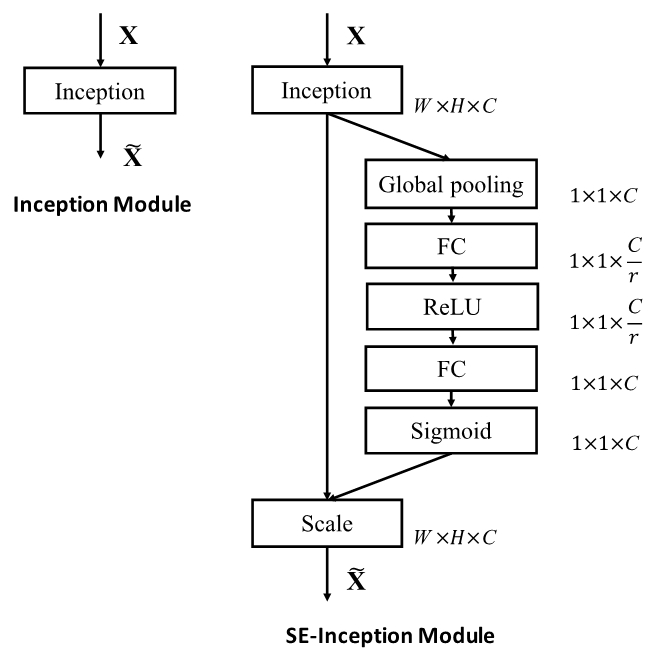

## Addition of Squeeze and Excitation blocks to Inception and ResNet blocks