https://github.com/v-sekai/godot-whisper

An GDExtension addon for the Godot Engine that enables realtime audio transcription, supports OpenCL for most platforms, Metal for Apple devices, and runs on a separate thread.

https://github.com/v-sekai/godot-whisper

godot

Last synced: 3 months ago

JSON representation

An GDExtension addon for the Godot Engine that enables realtime audio transcription, supports OpenCL for most platforms, Metal for Apple devices, and runs on a separate thread.

- Host: GitHub

- URL: https://github.com/v-sekai/godot-whisper

- Owner: V-Sekai

- License: mit

- Created: 2023-07-29T20:30:47.000Z (almost 2 years ago)

- Default Branch: main

- Last Pushed: 2024-08-15T10:21:22.000Z (9 months ago)

- Last Synced: 2025-02-06T13:16:14.480Z (3 months ago)

- Topics: godot

- Language: Metal

- Homepage:

- Size: 144 MB

- Stars: 76

- Watchers: 4

- Forks: 7

- Open Issues: 8

-

Metadata Files:

- Readme: README.md

- License: LICENSE

- Authors: AUTHORS.md

Awesome Lists containing this project

README

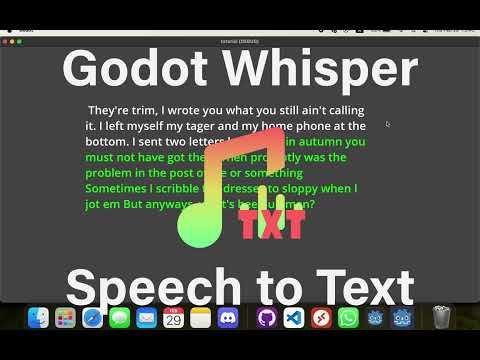

Godot Whisper

[](#contributors-)

## Features

- Realtime audio transcribe.

- Audio transcribe with recorded audio.

- Runs on separate thread.

- Metal for Apple devices.

- OpenCL for rest.

## How to install

Go to a github release, copy paste the addons folder to the samples folder. Restart godot editor.

## Requirements

- Sconstruct(if you want to build locally)

- A language model, can be downloaded in godot editor.

## AudioStreamToText

`AudioStreamToText` - this node can be used in editor to check transcribing. Simply add a WAV audio source and click start_transcribe button.

Normal times for this, using tiny.en model are about 0.3s. This only does transcribing.

NOTE: Currently this node supports only some .WAV files. The transcribe function takes as input a `PackedFloat32Array` buffer. Currently the only format supported is if the .WAV is `AudioStreamWAV.FORMAT_8_BITS` and `AudioStreamWAV.FORMAT_16_BITS`. For other it will simply not work and you will have to write a custom decoder for the .WAV file. Godot does support decoding it at runtime, check how CaptureStreamToText node works.

## CaptureStreamToText

This runs also resampling on the audio(in case mix rate is not exactly 16000 it will process the audio to 16000). Then it runs every transcribe_interval transcribe function.

## Initial Prompt

For Chinese, if you want to select between Traditional and Simplified, you need to provide an initial prompt with the one you want, and then the model should keep that same one going. See [Whisper Discussion #277](https://github.com/openai/whisper/discussions/277).

Also, if you have problems with punctuation, you can give it an initial prompt with punctuation. See [Whisper Discussion #194](https://github.com/openai/whisper/discussions/194).

## Language Model

Go to any `StreamToText` node, select a Language Model to Download and click Download. You might have to alt tab editor or restart for asset to appear. Then, select `language_model` property.

## Global settings

Go to Project -> Project Settings -> General -> Audio -> Input (Check Advance Settings).

You will see a bunch of settings there.

Also, as doing microphone transcribing requires the data to be at a 16000 sampling rate, you can change the audio driver mix rate to 16000: `audio/driver/mix_rate`. This way the resampling won't need to do any work, winning you some valuable 50-100ms for larger audio, but at the price of audio quality.

## Video Tutorial

[](https://www.youtube.com/watch?v=fAgjNkfBOKs&t=10s)

## How to build

```

scons target=template_release generate_bindings=no arch=universal precision=single

rm -rf samples/godot_whisper/addons

cp -rf bin/addons samples/godot_whisper/addons

```

## Contributors ✨

Thanks goes to these wonderful people ([emoji key](https://allcontributors.org/docs/en/emoji-key)):

Dragos Daian

💻

K. S. Ernest (iFire) Lee

💻

This project follows the [all-contributors](https://github.com/all-contributors/all-contributors) specification. Contributions of any kind welcome!