Ecosyste.ms: Awesome

An open API service indexing awesome lists of open source software.

https://github.com/vanessaaleung/support-ticket-nlp

Support Ticket Classification and Key Phrases Extraction

https://github.com/vanessaaleung/support-ticket-nlp

keras machine-learning python text-classification ticket-classification

Last synced: about 1 month ago

JSON representation

Support Ticket Classification and Key Phrases Extraction

- Host: GitHub

- URL: https://github.com/vanessaaleung/support-ticket-nlp

- Owner: vanessaaleung

- Created: 2020-05-20T02:04:34.000Z (over 4 years ago)

- Default Branch: master

- Last Pushed: 2020-06-24T01:14:43.000Z (over 4 years ago)

- Last Synced: 2024-11-10T22:14:14.254Z (3 months ago)

- Topics: keras, machine-learning, python, text-classification, ticket-classification

- Language: Jupyter Notebook

- Homepage:

- Size: 4.94 MB

- Stars: 4

- Watchers: 1

- Forks: 1

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- Support: Support_Ticket_NLP_Case.ipynb

Awesome Lists containing this project

README

# Support Ticket NLP

Support Ticket Classification and Key Phrases Extraction

- Identify the main issues in the ticket description

- Extract the key phrases in the ticket description

----------------

## Data

Support Ticket Classification

----------------

## Tasks

- Topic Modeling with LDA model

-

Preprocessing

- Divide text to tokens

- Remove stopwords, punctuations

- Lemmatization

- Compute coherence values to find the optimal number of topics

- Build the LDA model

- Utilize pyLDAvis to visualize the topics

- Key Phrases Extraction with pytextrank (combining spaCy and networkx)

- Construct a graph, sentence by sentence, based on the spaCy part-of-speech tags tags

- Use matplotlib to visualize the lemma graph

- Use PageRank – which is approximately eigenvalue centrality – to calculate ranks for each of the nodes in the lemma graph

- $a_{v,t}=1$ if vertex $v$ is linked to vertex $t$, and $a_{v,t}=0$ otherwise

- $M(v)$ is a set of the neighbors of $v$ and $\lambda$ is a constant

- Collect the top-ranked phrases from the lemma graph based on the noun chunks

- Find a minimum span for each phrase based on combinations of lemmas

permission 1 0.17555037929471423

requisitions 1 0.1742458175386728

recruiter 1 0.1416381454134179

----------------

## Terminologies

### Topic Coherence

Scores a single topic by measuring the degree of semantic similarity between high scoring words in the topic

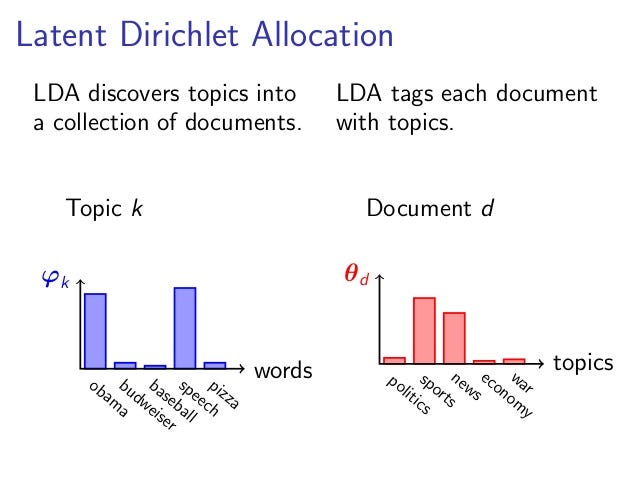

### Latent Dirichlet Allocation (LDA)

Given the # documents, # words, and # topics, output:

1. distribution of words for each topic K

2. distribution of topics for each document i