https://github.com/vietanhdev/samexporter

Export Segment Anything Models to ONNX

https://github.com/vietanhdev/samexporter

onnx segment-anything segment-anything-model

Last synced: 10 months ago

JSON representation

Export Segment Anything Models to ONNX

- Host: GitHub

- URL: https://github.com/vietanhdev/samexporter

- Owner: vietanhdev

- License: mit

- Created: 2023-05-11T07:59:31.000Z (over 2 years ago)

- Default Branch: main

- Last Pushed: 2024-08-03T04:35:28.000Z (over 1 year ago)

- Last Synced: 2025-03-28T14:06:45.727Z (10 months ago)

- Topics: onnx, segment-anything, segment-anything-model

- Language: Python

- Homepage: https://pypi.org/project/samexporter/

- Size: 8.32 MB

- Stars: 326

- Watchers: 8

- Forks: 39

- Open Issues: 18

-

Metadata Files:

- Readme: README.md

- Funding: .github/FUNDING.yml

- License: LICENSE

Awesome Lists containing this project

README

# SAM Exporter - Now with Segment Anything 2!~~

Exporting [Segment Anything](https://github.com/facebookresearch/segment-anything), [MobileSAM](https://github.com/ChaoningZhang/MobileSAM), and [Segment Anything 2](https://github.com/facebookresearch/segment-anything-2) into ONNX format for easy deployment.

[](https://badge.fury.io/py/samexporter)

[](https://pepy.tech/project/samexporter)

[](https://pepy.tech/project/samexporter)

[](https://pepy.tech/project/samexporter)

**Supported models:**

- Segment Anything 2 (Tiny, Small, Base, Large) - **Note:** Experimental. Only image input is supported for now.

- Segment Anything (SAM ViT-B, SAM ViT-L, SAM ViT-H)

- MobileSAM

## Installation

Requirements:

- Python 3.10+

From PyPi:

```bash

pip install torch==2.4.0 torchvision --index-url https://download.pytorch.org/whl/cpu

pip install samexporter

```

From source:

```bash

pip install torch==2.4.0 torchvision --index-url https://download.pytorch.org/whl/cpu

git clone https://github.com/vietanhdev/samexporter

cd samexporter

pip install -e .

```

## Convert Segment Anything, MobileSAM to ONNX

- Download Segment Anything from [https://github.com/facebookresearch/segment-anything](https://github.com/facebookresearch/segment-anything).

- Download MobileSAM from [https://github.com/ChaoningZhang/MobileSAM](https://github.com/ChaoningZhang/MobileSAM).

```text

original_models

+ sam_vit_b_01ec64.pth

+ sam_vit_h_4b8939.pth

+ sam_vit_l_0b3195.pth

+ mobile_sam.pt

...

```

- Convert encoder SAM-H to ONNX format:

```bash

python -m samexporter.export_encoder --checkpoint original_models/sam_vit_h_4b8939.pth \

--output output_models/sam_vit_h_4b8939.encoder.onnx \

--model-type vit_h \

--quantize-out output_models/sam_vit_h_4b8939.encoder.quant.onnx \

--use-preprocess

```

- Convert decoder SAM-H to ONNX format:

```bash

python -m samexporter.export_decoder --checkpoint original_models/sam_vit_h_4b8939.pth \

--output output_models/sam_vit_h_4b8939.decoder.onnx \

--model-type vit_h \

--quantize-out output_models/sam_vit_h_4b8939.decoder.quant.onnx \

--return-single-mask

```

Remove `--return-single-mask` if you want to return multiple masks.

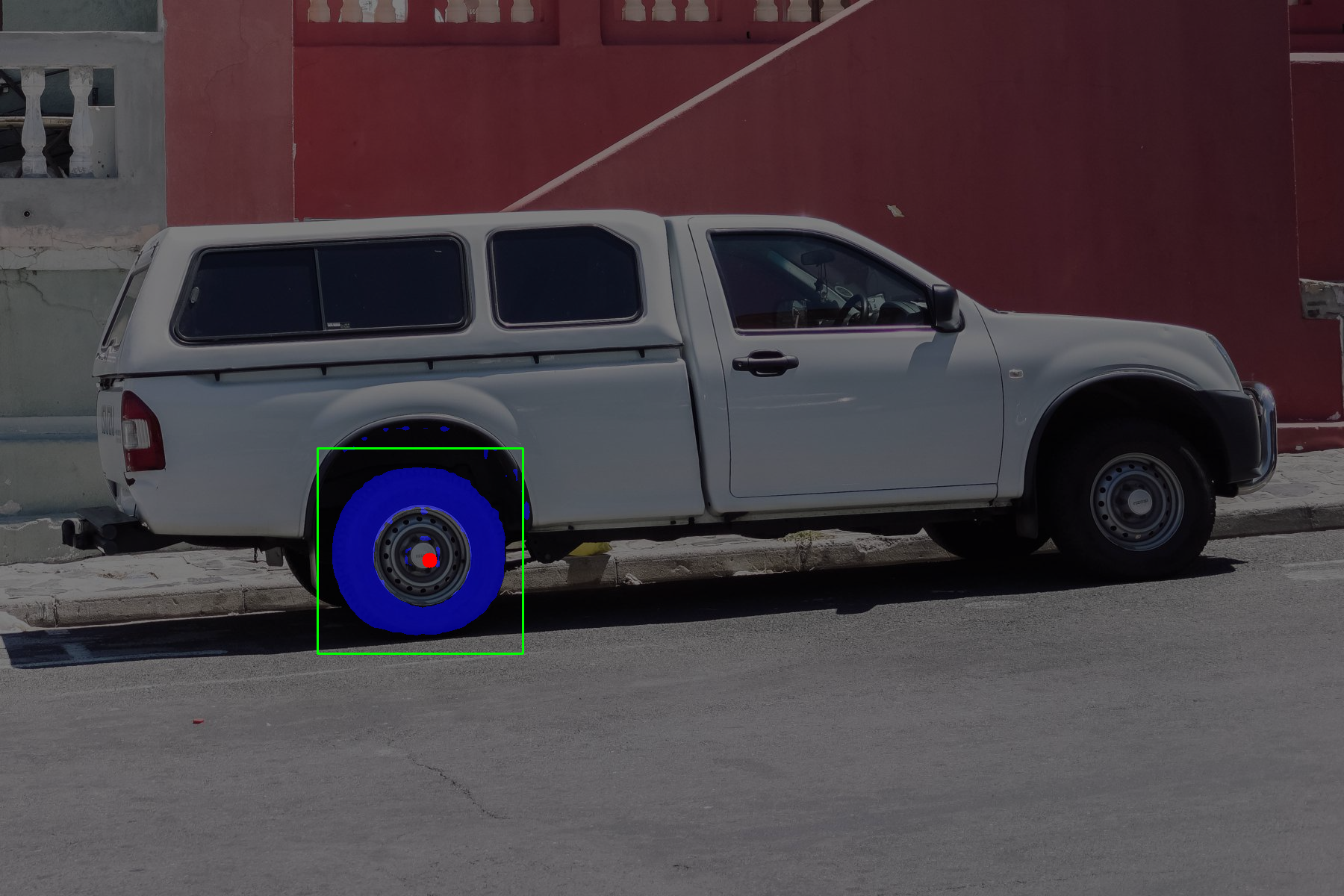

- Inference using the exported ONNX model:

```bash

python -m samexporter.inference \

--encoder_model output_models/sam_vit_h_4b8939.encoder.onnx \

--decoder_model output_models/sam_vit_h_4b8939.decoder.onnx \

--image images/truck.jpg \

--prompt images/truck_prompt.json \

--output output_images/truck.png \

--show

```

```bash

python -m samexporter.inference \

--encoder_model output_models/sam_vit_h_4b8939.encoder.onnx \

--decoder_model output_models/sam_vit_h_4b8939.decoder.onnx \

--image images/plants.png \

--prompt images/plants_prompt1.json \

--output output_images/plants_01.png \

--show

```

```bash

python -m samexporter.inference \

--encoder_model output_models/sam_vit_h_4b8939.encoder.onnx \

--decoder_model output_models/sam_vit_h_4b8939.decoder.onnx \

--image images/plants.png \

--prompt images/plants_prompt2.json \

--output output_images/plants_02.png \

--show

```

**Short options:**

- Convert all Segment Anything models to ONNX format:

```bash

bash convert_all_meta_sam.sh

```

- Convert MobileSAM to ONNX format:

```bash

bash convert_mobile_sam.sh

```

## Convert Segment Anything 2 to ONNX

- Download Segment Anything 2 from [https://github.com/facebookresearch/segment-anything-2.git](https://github.com/facebookresearch/segment-anything-2.git). You can do it by:

```bash

cd original_models

bash download_sam2.sh

```

The models will be downloaded to the `original_models` folder:

```text

original_models

+ sam2_hiera_tiny.pt

+ sam2_hiera_small.pt

+ sam2_hiera_base_plus.pt

+ sam2_hiera_large.pt

...

```

- Install dependencies:

```bash

pip install git+https://github.com/facebookresearch/segment-anything-2.git

```

- Convert all Segment Anything models to ONNX format:

```bash

bash convert_all_meta_sam2.sh

```

- Inference using the exported ONNX model (only image input is supported for now):

```bash

python -m samexporter.inference \

--encoder_model output_models/sam2_hiera_tiny.encoder.onnx \

--decoder_model output_models/sam2_hiera_tiny.decoder.onnx \

--image images/plants.png \

--prompt images/truck_prompt_2.json \

--output output_images/plants_prompt_2_sam2.png \

--sam_variant sam2 \

--show

```

## Tips

- Use "quantized" models for faster inference and smaller model size. However, the accuracy may be lower than the original models.

- SAM-B is the most lightweight model, but it has the lowest accuracy. SAM-H is the most accurate model, but it has the largest model size. SAM-M is a good trade-off between accuracy and model size.

## AnyLabeling

This package was originally developed for auto labeling feature in [AnyLabeling](https://github.com/vietanhdev/anylabeling) project. However, you can use it for other purposes.

[](https://youtu.be/5qVJiYNX5Kk)

## License

This project is licensed under the MIT License - see the [LICENSE](LICENSE) file for details.

## References

- ONNX-SAM2-Segment-Anything: [https://github.com/ibaiGorordo/ONNX-SAM2-Segment-Anything](https://github.com/ibaiGorordo/ONNX-SAM2-Segment-Anything).