https://github.com/weiji14/foss4g2023oceania

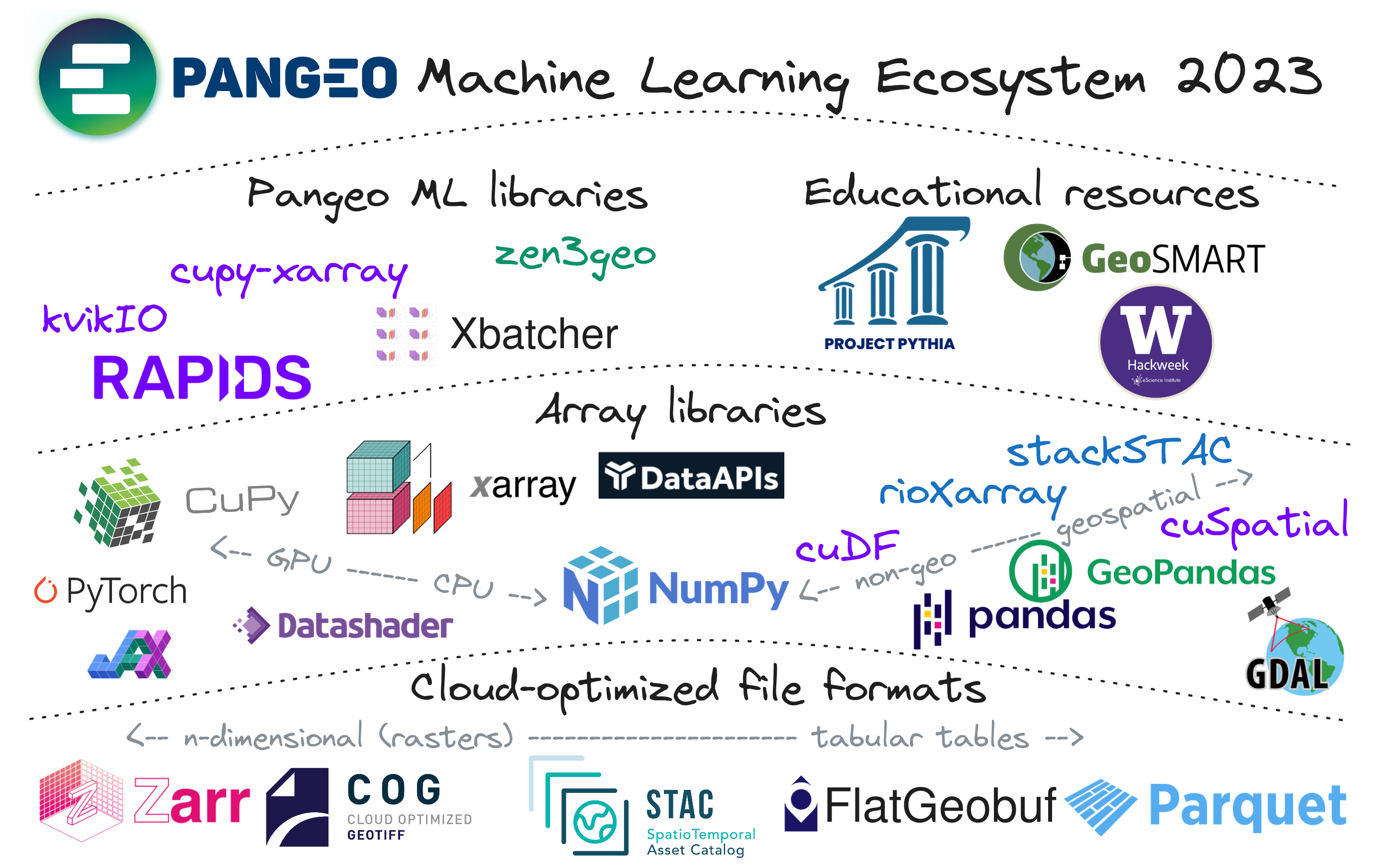

The ecosystem of geospatial machine learning tools in the Pangeo world.

https://github.com/weiji14/foss4g2023oceania

datapipe foss4g gpu-direct-storage kvikio machine-learning pangeo xbatcher zarr zen3geo

Last synced: 5 months ago

JSON representation

The ecosystem of geospatial machine learning tools in the Pangeo world.

- Host: GitHub

- URL: https://github.com/weiji14/foss4g2023oceania

- Owner: weiji14

- License: lgpl-3.0

- Created: 2023-10-01T00:15:59.000Z (about 2 years ago)

- Default Branch: main

- Last Pushed: 2025-03-17T21:19:34.000Z (7 months ago)

- Last Synced: 2025-05-07T16:02:38.195Z (5 months ago)

- Topics: datapipe, foss4g, gpu-direct-storage, kvikio, machine-learning, pangeo, xbatcher, zarr, zen3geo

- Language: Jupyter Notebook

- Homepage: https://hackmd.io/@weiji14/foss4g2023oceania

- Size: 1.37 MB

- Stars: 11

- Watchers: 2

- Forks: 1

- Open Issues: 3

-

Metadata Files:

- Readme: README.md

- License: LICENSE.md

Awesome Lists containing this project

README

# [FOSS4G SotM Oceania 2023 presentation](https://talks.osgeo.org/foss4g-sotm-oceania-2023/talk/YP3KPT)

[](https://github.com/weiji14/foss4g2023oceania/pull/5)

The ecosystem of geospatial machine learning tools in the

[Pangeo](https://pangeo.io) world.

**Presenter**: [Wei Ji Leong](https://github.com/weiji14)

**When**: [Wednesday, 18 October 2023, 13:50–14:15 (NZDT)](https://2023.foss4g-oceania.org/#/program)

**Where**: [Te Iringa (Wave Room - WG308), Auckland University of Technology (AUT)](https://2023.foss4g-oceania.org/#/attend/our-conference-venue), Auckland, New Zealand

**Website**: https://talks.osgeo.org/foss4g-sotm-oceania-2023/talk/YP3KPT/

[](https://www.youtube.com/watch?v=X2LBuUfSo5Q)

**Presentation slides**: https://hackmd.io/@weiji14/foss4g2023oceania

**Blog post (part 1)**: https://weiji14.xyz/blog/the-pangeo-machine-learning-ecosystem-in-2023

**Blog post (part 2)**: https://weiji14.xyz/blog/when-cloud-native-geospatial-meets-gpu-native-machine-learning

## Abstract

Several open source tools are enabling the shift to cloud-native geospatial

Machine Learning workflows. Stream data from STAC APIs, generate Machine

Learning ready chips on-the-fly and train models for different downstream

tasks! Find out about advances in the Pangeo ML community towards scalable

GPU-native workflows.

### Long description

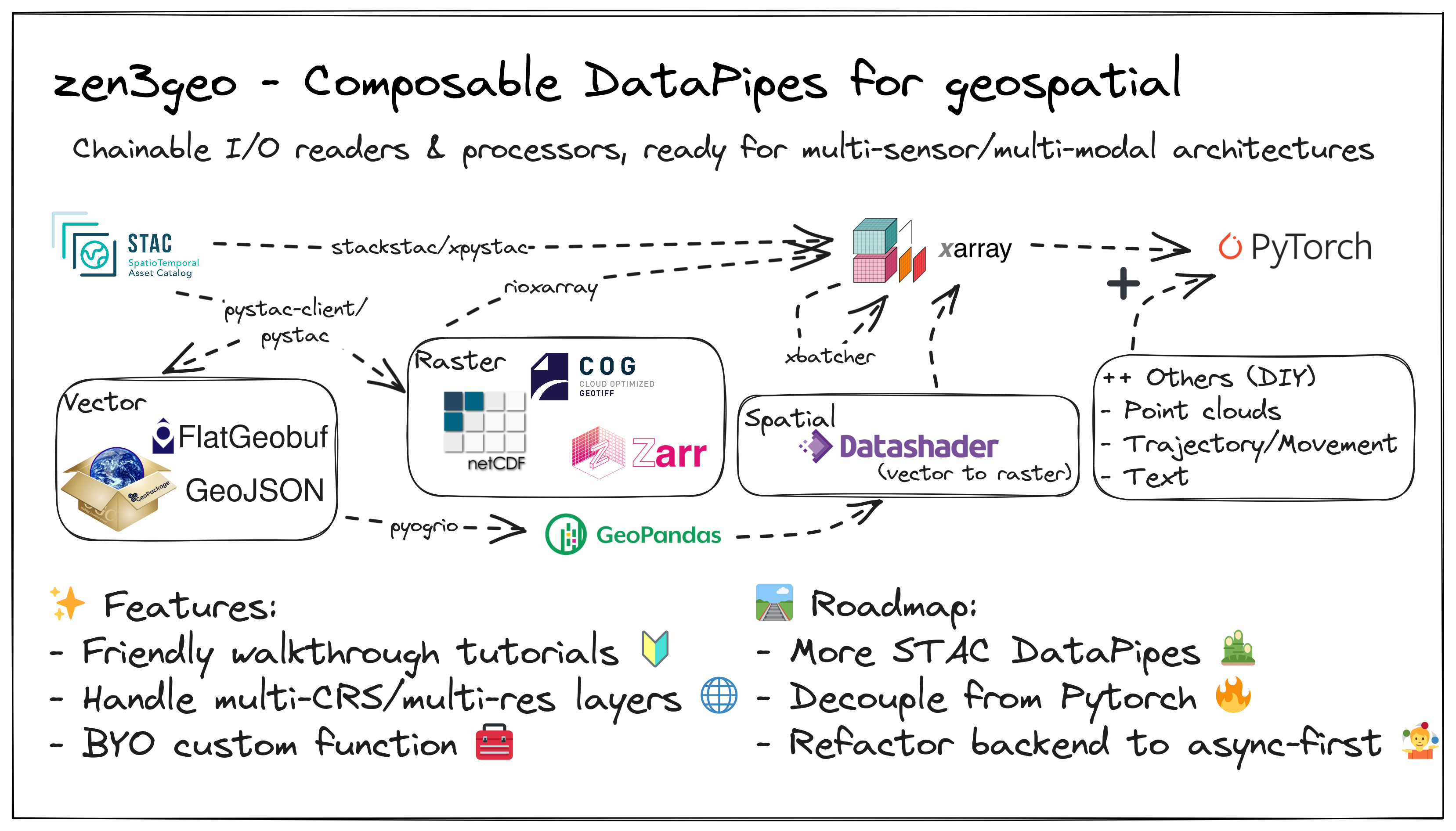

An overview of open source Python packages in the Pangeo (big data geoscience)

Machine Learning community will be presented. On read/write,

[kvikIO](https://github.com/rapidsai/kvikio) allows low-latency data transfers

from Zarr archives via NVIDIA GPU Direct Storage. With tensors loaded in xarray

data structures, [xbatcher](https://github.com/xarray-contrib/xbatcher) enables

efficient slicing of arrays in an iterative fashion. To connect the pieces,

[zen3geo](https://github.com/weiji14/zen3geo) acts as the glue between

geospatial libraries - from reading [STAC](https://stacspec.org) items and

rasterizing vector geometries to stacking multi-resolution datasets for custom

data pipelines. Learn more as the Pangeo community develops tutorials at

[Project Pythia](https://cookbooks.projectpythia.org), and join in to hear

about the challenges and ideas on scaling machine learning in the geosciences

with the [Pangeo ML Working Group](https://www.pangeo.io/meetings).

[](https://github.com/weiji14/foss4g2023oceania/pull/6)

[](https://github.com/weiji14/foss4g2023oceania/pull/8)

[](https://github.com/weiji14/foss4g2023oceania/pull/9)

# Getting started

## Installation

### NVIDIA GPU Direct Storage

Follow instructions at

https://docs.nvidia.com/cuda/cuda-installation-guide-linux/index.html#install-gpudirect-storage

to install NVIDIA GPU Direct Storage (GDS).

> [!NOTE]

> Starting with CUDA toolkit 12.2.2, GDS kernel driver package nvidia-gds version

> 12.2.2-1 (provided by nvidia-fs-dkms 2.17.5-1) and above is only supported with the

> NVIDIA open kernel driver. Follow instructions in

> [NVIDIA Open GPU Kernel Modules](https://docs.nvidia.com/cuda/cuda-installation-guide-linux/index.html#nvidia-open-gpu-kernel-modules)

> to install NVIDIA open kernel driver packages.

Verify that NVIDIA GDS has been installed properly following

https://docs.nvidia.com/gpudirect-storage/troubleshooting-guide/index.html#verify-suc-install.

E.g. if you are on Linux and have CUDA 12.2 installed, run:

/usr/local/cuda-12.2/gds/tools/gdscheck.py -p

Alternatively, if you have your conda environment setup below, follow

https://xarray.dev/blog/xarray-kvikio#appendix-ii--making-sure-gds-is-working

and run:

mamba activate foss4g2023oceania

curl -s https://raw.githubusercontent.com/rapidsai/kvikio/branch-23.08/python/benchmarks/single-node-io.py | python

### Basic

To help out with development, start by cloning this [repo-url](/../../)

git clone

Then I recommend [using mamba](https://mamba.readthedocs.io/en/latest/installation/mamba-installation.html)

to install the dependencies.

A virtual environment will also be created with Python and

[JupyterLab](https://github.com/jupyterlab/jupyterlab) installed.

cd foss4g2023oceania

mamba env create --file environment.yml

Activate the virtual environment first.

mamba activate foss4g2023oceania

Finally, double-check that the libraries have been installed.

mamba list

### Advanced

This is for those who want full reproducibility of the virtual environment.

Create a virtual environment with just Python and conda-lock installed first.

mamba create --name foss4g2023oceania python=3.10 conda-lock=2.3.0

mamba activate foss4g2023oceania

Generate a unified [`conda-lock.yml`](https://github.com/conda/conda-lock) file

based on the dependency specification in `environment.yml`. Use only when

creating a new `conda-lock.yml` file or refreshing an existing one.

conda-lock lock --mamba --file environment.yml --platform linux-64 --with-cuda=11.8

Installing/Updating a virtual environment from a lockile. Use this to sync your

dependencies to the exact versions in the `conda-lock.yml` file.

conda-lock install --mamba --name foss4g2023oceania conda-lock.yml

See also https://conda.github.io/conda-lock/output/#unified-lockfile for more

usage details.

## Running the scripts

To create a subset of the WeatherBench2 Zarr dataset, run:

python 0_weatherbench2zarr.py

This will save a one year subset of the WeatherBench2 ERA5 dataset at 6 hourly

resolution to your local disk (total size is about 18.2GB). It will include

data at pressure level 500hPa, with the variables 'geopotential',

'u_component_of_wind', and 'v_component_of_wind' only.

To run the benchmark experiment loading with the kvikIO engine, run:

python 1_benchmark_kvikIOzarr.py

This will print out a progress bar showing the ERA5 data being loaded in

mini-batches (simulating a neural network training loop). One 'epoch' should

take under 15 seconds on an Ampere generation (e.g. RTX A2000) NVIDIA GPU. A

total of ten epochs will be ran, and the total time taken will be reported, as

well as the median/mean/standard deviation time taken per epoch.

To compare the benchmark results between the `kvikio` and `zarr` engines, do

the following:

1. Run `jupyter lab` to launch a JupyterLab session

2. In your browser, open the `2_compare_results.ipynb` notebook in JupyterLab

3. Run all the cells in the notebook

The time to load the ERA5 subset data using the `kvikio` and `zarr` engines

will be printed out. There will also be a summary report of the relative

time difference between the CPU-based `zarr` and GPU-based `kvikio` engine, and

bar plots of the absolute time taken for each backend engine.

# References

## Links

- https://xarray.dev/blog/xarray-kvikio

- https://developer.nvidia.com/blog/gpudirect-storage

- https://developer.nvidia.com/blog/machine-learning-frameworks-interoperability-part-2-data-loading-and-data-transfer-bottlenecks/

- https://developmentseed.org/blog/2023-09-20-see-you-at-foss4g-sotm-oceania-2023

- https://medium.com/rapids-ai/pytorch-rapids-rmm-maximize-the-memory-efficiency-of-your-workflows-f475107ba4d4

- https://developer.nvidia.com/blog/introduction-cuda-aware-mpi/

## License

All code in this repository is licensed under

GNU Lesser General Public License 3.0

[(LGPL-3.0)](https://www.gnu.org/licenses/lgpl-3.0.en.html).

All other non-code content is licensed under

Creative Commons Attribution-ShareAlike 4.0 International

[(CC BY-SA 4.0)](https://creativecommons.org/licenses/by-sa/4.0).