https://github.com/williamlwj/pyxab

PyXAB - A Python Library for X-Armed Bandit and Online Blackbox Optimization Algorithms

https://github.com/williamlwj/pyxab

algorithm automl bandit-algorithms blackbox-optimization continuous-armed-bandit data-science hyperparameter-optimization hyperparameter-tuning lipschitz-bandit machine-learning machine-learning-algorithms online-learning optimization optimization-algorithms reinforcement-learning x-armed-bandit

Last synced: 5 months ago

JSON representation

PyXAB - A Python Library for X-Armed Bandit and Online Blackbox Optimization Algorithms

- Host: GitHub

- URL: https://github.com/williamlwj/pyxab

- Owner: WilliamLwj

- License: mit

- Created: 2022-03-16T19:21:23.000Z (almost 4 years ago)

- Default Branch: main

- Last Pushed: 2024-10-24T22:54:33.000Z (over 1 year ago)

- Last Synced: 2025-09-04T10:58:00.874Z (5 months ago)

- Topics: algorithm, automl, bandit-algorithms, blackbox-optimization, continuous-armed-bandit, data-science, hyperparameter-optimization, hyperparameter-tuning, lipschitz-bandit, machine-learning, machine-learning-algorithms, online-learning, optimization, optimization-algorithms, reinforcement-learning, x-armed-bandit

- Language: Python

- Homepage: https://pyxab.readthedocs.io/

- Size: 13.8 MB

- Stars: 126

- Watchers: 17

- Forks: 30

- Open Issues: 4

-

Metadata Files:

- Readme: README.md

- License: LICENSE

- Citation: CITATION.cff

Awesome Lists containing this project

README

# PyXAB - Python *X*-Armed Bandit

PyXAB is a Python open-source library for *X*-armed bandit algorithms, a prestigious set of optimizers for online black-box optimization and

hyperparameter optimization.

PyXAB contains the implementations of **10+** optimization algorithms, including the classic ones such as [Zooming](https://arxiv.org/pdf/0809.4882.pdf),

[StoSOO](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/StoSOO.py), and [HCT](https://proceedings.mlr.press/v32/azar14.html), and the most

recent works such as [GPO](https://proceedings.mlr.press/v98/xuedong19a.html), [StroquOOL](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/StroquOOL.py) and [VHCT](https://arxiv.org/abs/2106.09215).

PyXAB also provides the most commonly-used synthetic objectives to evaluate the performance of different algorithms and the implementations for different hierarchical partitions

**PyXAB is featured for:**

- **User-friendly APIs, clear documentation, and detailed examples**

- **Comprehensive library** of optimization algorithms, partitions and synthetic objectives

- **High standard code quality and high testing coverage**

- **Low dependency** for flexible combination with other packages such as PyTorch, Scikit-Learn

**Reminder**: The algorithms are maximization algorithms!

## Quick Links

- [Quick Example](#Quick-Example)

- [Documentations](#Documentations)

- [Installation](#Installation)

- [Features](#Features)

* [*X*-armed bandit algorithms](#Stochastic-X-armed-bandit-algorithms)

* [Hierarchical partition ](#Hierarchical-partition)

* [Synthetic objectives](#Synthetic-objectives)

- [Contributing](#Contributing)

- [Citations](#Citations)

## Quick Example

PyXAB follows a natural and straightforward API design completely aligned with the online blackbox

optimization paradigm. The following is a simple 6-line usage example.

First, we define the parameter domain and the algorithm to run.

At every round `t`, call `algo.pull(t)` to get a point and call

`algo.receive_reward(t, reward)` to give the algorithm the objective evaluation (reward)

```python3

from PyXAB.algos.HOO import T_HOO

domain = [[0, 1]] # Parameter is 1-D and between 0 and 1

algo = T_HOO(rounds=1000, domain=domain)

for t in range(1000):

point = algo.pull(t)

reward = 1 #TODO: User-defined objective returns the reward

algo.receive_reward(t, reward)

```

More detailed examples can be found [here](https://pyxab.readthedocs.io/en/latest/getting_started/auto_examples/index.html)

## Documentations

* The most up-to-date [documentations](https://pyxab.readthedocs.io/)

* The [roadmap](https://github.com/users/WilliamLwj/projects/1) for our project

* Our [manuscript](https://arxiv.org/abs/2303.04030) for the library

## Installation

To install via pip, run the following lines of code

```bash

pip install PyXAB # normal install

pip install --upgrade PyXAB # or update if needed

```

To install via git, run the following lines of code

```bash

git clone https://github.com/WilliamLwj/PyXAB.git

cd PyXAB

pip install .

```

## Features:

### *X*-armed bandit algorithms

* Algorithm starred are meta-algorithms (wrappers)

| Algorithm | Research Paper | Year |

|-------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|------|

| [Zooming](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/Zooming.py) | [Multi-Armed Bandits in Metric Spaces](https://arxiv.org/pdf/0809.4882.pdf) | 2008 |

| [T-HOO](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/HOO.py) | [*X*-Armed Bandit](https://jmlr.org/papers/v12/bubeck11a.html) | 2011 |

| [DOO](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/DOO.py) | [Optimistic Optimization of a Deterministic Function without the Knowledge of its Smoothness](https://proceedings.neurips.cc/paper/2011/file/7e889fb76e0e07c11733550f2a6c7a5a-Paper.pdf) | 2011 |

| [SOO](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/SOO.py) | [Optimistic Optimization of a Deterministic Function without the Knowledge of its Smoothness](https://proceedings.neurips.cc/paper/2011/file/7e889fb76e0e07c11733550f2a6c7a5a-Paper.pdf) | 2011 |

| [StoSOO](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/StoSOO.py) | [Stochastic Simultaneous Optimistic Optimization](http://proceedings.mlr.press/v28/valko13.pdf) | 2013 |

| [HCT](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/HCT.py) | [Online Stochastic Optimization Under Correlated Bandit Feedback](https://proceedings.mlr.press/v32/azar14.html) | 2014 |

| [POO*](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/POO.py) | [Black-box optimization of noisy functions with unknown smoothness](https://papers.nips.cc/paper/2015/hash/ab817c9349cf9c4f6877e1894a1faa00-Abstract.html) | 2015 |

| [GPO*](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/GPO.py) | [General Parallel Optimization Without A Metric](https://proceedings.mlr.press/v98/xuedong19a.html) | 2019 |

| [PCT](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/PCT.py) | [General Parallel Optimization Without A Metric](https://proceedings.mlr.press/v98/xuedong19a.html) | 2019 |

| [SequOOL](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/SequOOL.py) | [A Simple Parameter-free And Adaptive Approach to Optimization Under A Minimal Local Smoothness Assumption](https://arxiv.org/pdf/1810.00997.pdf) | 2019 |

| [StroquOOL](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/StroquOOL.py) | [A Simple Parameter-free And Adaptive Approach to Optimization Under A Minimal Local Smoothness Assumption](https://arxiv.org/pdf/1810.00997.pdf) | 2019 |

| [VROOM](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/VROOM.py) | [Derivative-Free & Order-Robust Optimisation](https://arxiv.org/pdf/1910.04034.pdf) | 2020 |

| [VHCT](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/VHCT.py) | [Optimum-statistical Collaboration Towards General and Efficient Black-box Optimization](https://openreview.net/forum?id=ClIcmwdlxn) | 2023 |

| [VPCT](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/VPCT.py) | N.A. ([GPO](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/GPO.py) + [VHCT](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/algos/VHCT.py)) | N.A. |

### Hierarchical partition

| Partition | Description |

|-----------------------------------------------------------------------------------------------------------------------|--------------------------------------------------------------------------------------------------------------------|

| [BinaryPartition](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/partition/BinaryPartition.py) | Equal-size binary partition of the parameter space, the split dimension is chosen uniform randomly |

| [RandomBinaryPartition](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/partition/RandomBinaryPartition.py) | Random-size binary partition of the parameter space, the split dimension is chosen uniform randomly |

| [DimensionBinaryPartition](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/partition/DimensionBinaryPartition.py) | Equal-size partition of the space with a binary split on each dimension, the number of children of one node is 2^d |

| [KaryPartition](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/partition/KaryPartition.py) | Equal-size K-ary partition of the parameter space, the split dimension is chosen uniform randomly |

| [RandomKaryPartition](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/partition/RandomKaryPartition.py) | Random-size K-ary partition of the parameter space, the split dimension is chosen uniform randomly |

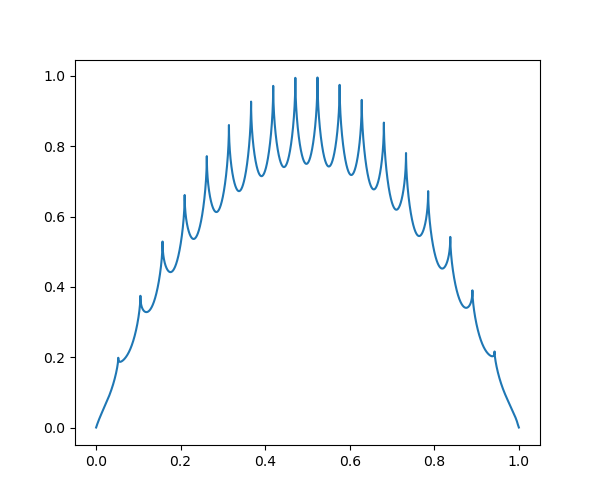

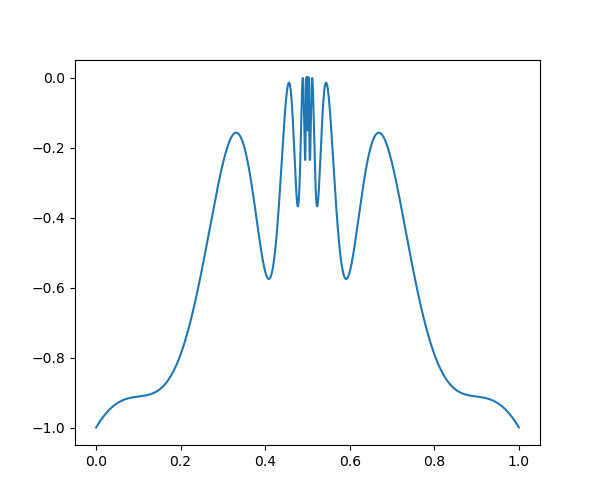

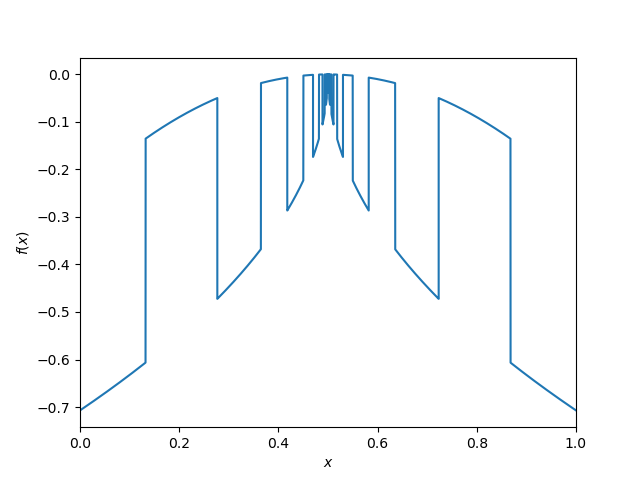

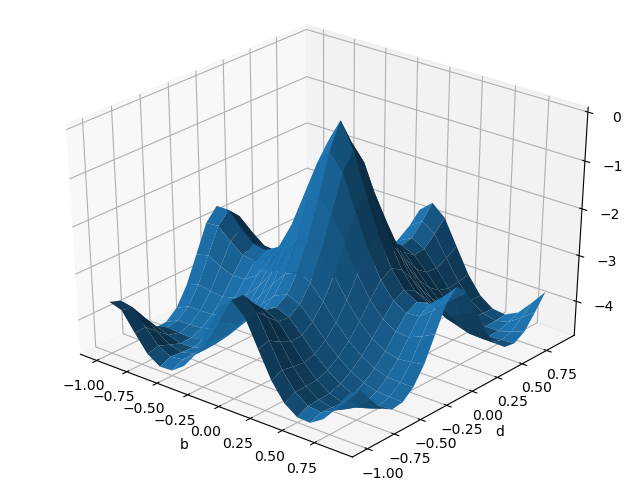

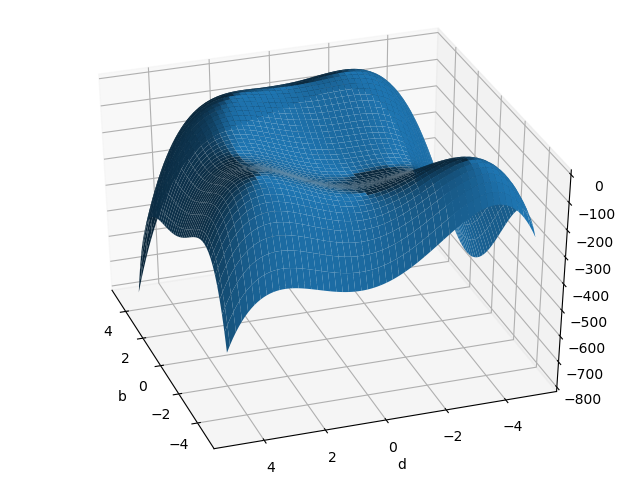

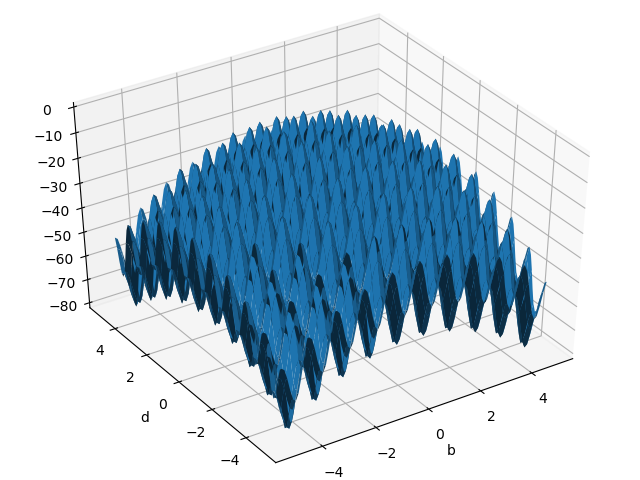

### Synthetic objectives

* Some of these objectives can be found [on Wikipedia](https://en.wikipedia.org/wiki/Test_functions_for_optimization)

| Objectives ![]() | Image |

| Image |

| --- |--- |

| [Garland](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/synthetic_obj/Garland.py) |  |

|

| [DoubleSine](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/synthetic_obj/DoubleSine.py) |  |

|

| [DifficultFunc](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/synthetic_obj/DifficultFunc.py) |  |

|

| [Ackley](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/synthetic_obj/Ackley.py) |  |

|

| [Himmelblau](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/synthetic_obj/Himmelblau.py) |  |

|

| [Rastrigin](https://github.com/WilliamLwj/PyXAB/blob/main/PyXAB/synthetic_obj/Rastrigin.py) |  |

|

## Contributing

We appreciate all forms of help and contributions, including but not limited to

* Star and watch our project

* Open an issue for any bugs you find or features you want to add to our library

* Fork our project and submit a pull request with your valuable codes

Please read the [contributing instructions](https://pyxab.readthedocs.io/en/latest/info/contributing.html) before submitting

a pull request.

## Citations

If you use our package in your research or projects, we kindly ask you to cite our work

```text

@article{Li2023PyXAB,

doi = {10.21105/joss.06507},

url = {https://joss.theoj.org/papers/10.21105/joss.06507},

author = {Li, Wenjie and Li, Haoze and Song, Qifan and Honorio, Jean},

title = {PyXAB -- A Python Library for $\mathcal{X}$-Armed Bandit and Online Blackbox Optimization Algorithms},

journal={Journal of Open Source Software},

year = {2024},

issn={2475-9066},

}

```

We would also appreciate it if you could cite our related works.

```text

@article{li2023optimumstatistical,

title={Optimum-statistical Collaboration Towards General and Efficient Black-box Optimization},

author={Wenjie Li and Chi-Hua Wang and Guang Cheng and Qifan Song},

journal={Transactions on Machine Learning Research},

issn={2835-8856},

year={2023},

url={https://openreview.net/forum?id=ClIcmwdlxn},

note={}

}

```

```text

@article{Li2022Federated,

title={Federated $\chi$-armed Bandit},

volume={38},

url={https://ojs.aaai.org/index.php/AAAI/article/view/29267},

DOI={10.1609/aaai.v38i12.29267},

number={12},

journal={Proceedings of the AAAI Conference on Artificial Intelligence},

author={Li, Wenjie and Song, Qifan and Honorio, Jean and Lin, Guang},

year={2024},

month={Mar.},

pages={13628-13636}

}

```

```text

@InProceedings{Li2024Personalized,

title = {Personalized Federated $\chi$-armed Bandit},

author = {Li, Wenjie and Song, Qifan and Honorio, Jean},

booktitle = {Proceedings of The 27th International Conference on Artificial Intelligence and Statistics},

pages = {37--45},

year = {2024},

editor = {Dasgupta, Sanjoy and Mandt, Stephan and Li, Yingzhen},

volume = {238},

series = {Proceedings of Machine Learning Research},

month = {02--04 May},

publisher = {PMLR},

pdf = {https://proceedings.mlr.press/v238/li24a/li24a.pdf},

url = {https://proceedings.mlr.press/v238/li24a.html},

}

```