https://github.com/willyfh/graph-transformer

An unofficial implementation of Graph Transformer (Masked Label Prediction: Unified Message Passing Model for Semi-Supervised Classification) - IJCAI 2021

https://github.com/willyfh/graph-transformer

deep-learning gnn graph-deep-learning graph-neural-networks graph-transformer neural-networks pytorch

Last synced: about 1 month ago

JSON representation

An unofficial implementation of Graph Transformer (Masked Label Prediction: Unified Message Passing Model for Semi-Supervised Classification) - IJCAI 2021

- Host: GitHub

- URL: https://github.com/willyfh/graph-transformer

- Owner: willyfh

- License: mit

- Created: 2023-01-17T10:13:47.000Z (over 2 years ago)

- Default Branch: main

- Last Pushed: 2024-04-20T11:07:38.000Z (about 1 year ago)

- Last Synced: 2025-06-03T02:10:12.491Z (about 1 month ago)

- Topics: deep-learning, gnn, graph-deep-learning, graph-neural-networks, graph-transformer, neural-networks, pytorch

- Language: Python

- Homepage: https://www.ijcai.org/proceedings/2021/0214.pdf

- Size: 483 KB

- Stars: 33

- Watchers: 1

- Forks: 6

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- License: LICENSE.txt

Awesome Lists containing this project

README

# Graph Transformer (IJCAI 2021)

[]() []() [](https://pepy.tech/project/graph-transformer)

An unofficial implementation of Graph Transformer:

Masked Label Prediction: Unified Message Passing Model for Semi-Supervised Classification) - IJCAI 2021 > https://www.ijcai.org/proceedings/2021/0214.pdf

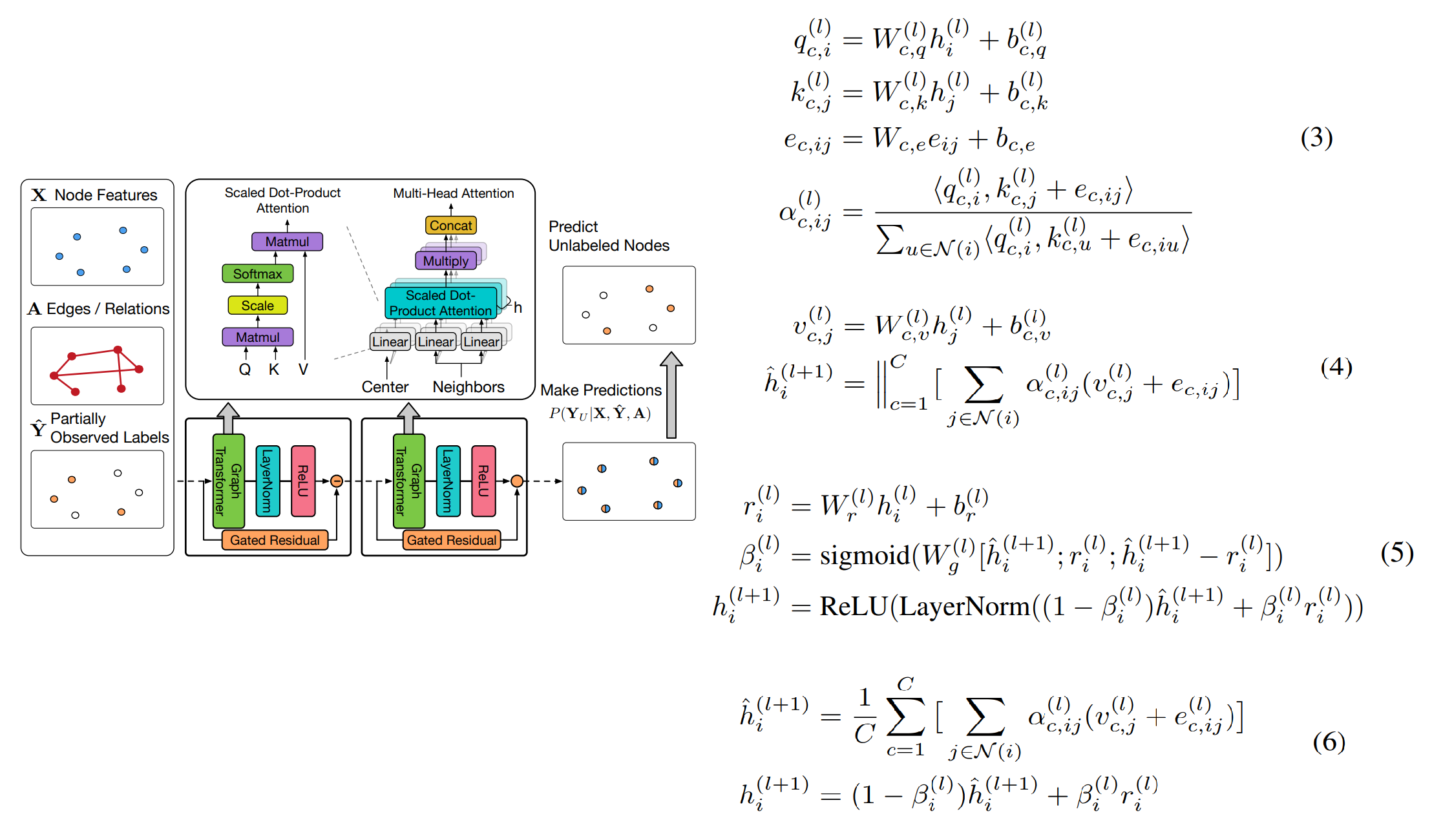

This GNN architecture is implemented based on Section 3.1 (Graph Transformer) in the paper.

I implemented the code by referring to [this repository](https://github.com/lucidrains/graph-transformer-pytorch), but with some modifications to match with the original published paper in IJCAI 2021.

## Installation

```bash

pip install graph-transformer

```

## Usage

```python

import torch

from graph_transformer import GraphTransformerModel

model = GraphTransformerModel(

node_dim = 512,

edge_dim = 512,

num_blocks = 3, # number of graph transformer blocks

num_heads = 8,

last_average=True, # wether to average or concatenation at the last block

model_dim=None # if None, node_dim will be used as the dimension of the graph transformer block

)

nodes = torch.randn(1, 128, 512)

edges = torch.randn(1, 128, 128, 512)

adjacency = torch.ones(1, 128, 128)

nodes = model(nodes, edges, adjacency)

```

**Note**: If your graph does not have edge features, you can set `edge_dim` and `edges` (in the forward pass) to `None`.