https://github.com/woctezuma/finetune-detr

Fine-tune Facebook's DETR (DEtection TRansformer) on Colaboratory.

https://github.com/woctezuma/finetune-detr

balloon balloons colab colab-notebook colaboratory detr facebook finetune finetunes finetuning google-colab google-colab-notebook google-colaboratory instance instance-segmentation instances segementation segment

Last synced: 5 months ago

JSON representation

Fine-tune Facebook's DETR (DEtection TRansformer) on Colaboratory.

- Host: GitHub

- URL: https://github.com/woctezuma/finetune-detr

- Owner: woctezuma

- License: mit

- Created: 2020-08-03T17:17:35.000Z (over 5 years ago)

- Default Branch: master

- Last Pushed: 2023-05-09T18:36:05.000Z (over 2 years ago)

- Last Synced: 2023-05-09T19:42:32.974Z (over 2 years ago)

- Topics: balloon, balloons, colab, colab-notebook, colaboratory, detr, facebook, finetune, finetunes, finetuning, google-colab, google-colab-notebook, google-colaboratory, instance, instance-segmentation, instances, segementation, segment

- Language: Jupyter Notebook

- Homepage:

- Size: 79.5 MB

- Stars: 90

- Watchers: 2

- Forks: 16

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

# Finetune DETR

The goal of this [Google Colab](https://colab.research.google.com/) notebook is to fine-tune Facebook's DETR (DEtection TRansformer).

->

->

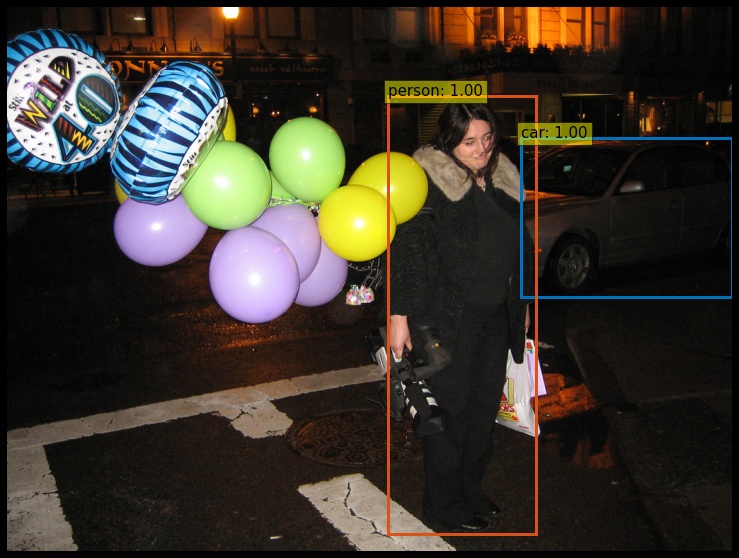

From left to right: results obtained with pre-trained DETR, and after fine-tuning on the `balloon` dataset.

## Usage

- Acquire a dataset, e.g. the the `balloon` dataset,

- Convert the dataset to the COCO format,

- Run [`finetune_detr.ipynb`][finetune_detr-notebook] to fine-tune DETR on this dataset.

[![Open In Colab][colab-badge]][finetune_detr-notebook]

- Alternatively, run [`finetune_detectron2.ipynb`][finetune_detectron2-notebook] to rely on the detectron2 wrapper.

[![Open In Colab][colab-badge]][finetune_detectron2-notebook]

NB: Fine-tuning is recommended if your dataset has [less than 10k images](https://github.com/facebookresearch/detr/issues/9#issuecomment-635357693).

Otherwise, training from scratch would be an option.

## Data

DETR will be fine-tuned on a tiny dataset: the [`balloon` dataset](https://github.com/matterport/Mask_RCNN/tree/master/samples/balloon).

We refer to it as the `custom` dataset.

There are 61 images in the training set, and 13 images in the validation set.

We expect the directory structure to be the following:

```

path/to/coco/

├ annotations/ # JSON annotations

│ ├ annotations/custom_train.json

│ └ annotations/custom_val.json

├ train2017/ # training images

└ val2017/ # validation images

```

NB: if you are confused about the number of classes, check [this Github issue](https://github.com/facebookresearch/detr/issues/108#issuecomment-650269223).

## Metrics

Typical metrics to monitor, partially shown in [this notebook][metrics-notebook], include:

- the Average Precision (AP), which is [the primary challenge metric](https://cocodataset.org/#detection-eval) for the COCO dataset,

- losses (total loss, classification loss, l1 bbox distance loss, GIoU loss),

- errors (cardinality error, class error).

As mentioned in [the paper](https://arxiv.org/abs/2005.12872), there are 3 components to the matching cost and to the total loss:

- classification loss,

```python

def loss_labels(self, outputs, targets, indices, num_boxes, log=True):

"""Classification loss (NLL)

targets dicts must contain the key "labels" containing a tensor of dim [nb_target_boxes]

"""

[...]

loss_ce = F.cross_entropy(src_logits.transpose(1, 2), target_classes, self.empty_weight)

losses = {'loss_ce': loss_ce}

```

- l1 bounding box distance loss,

```python

def loss_boxes(self, outputs, targets, indices, num_boxes):

"""Compute the losses related to the bounding boxes, the L1 regression loss and the GIoU loss

targets dicts must contain the key "boxes" containing a tensor of dim [nb_target_boxes, 4]

The target boxes are expected in format (center_x, center_y, w, h),normalized by the image

size.

"""

[...]

loss_bbox = F.l1_loss(src_boxes, target_boxes, reduction='none')

losses['loss_bbox'] = loss_bbox.sum() / num_boxes

```

- [Generalized Intersection over Union (GIoU)](https://giou.stanford.edu/) loss, which is scale-invariant.

```python

loss_giou = 1 - torch.diag(box_ops.generalized_box_iou(

box_ops.box_cxcywh_to_xyxy(src_boxes),

box_ops.box_cxcywh_to_xyxy(target_boxes)))

losses['loss_giou'] = loss_giou.sum() / num_boxes

```

Moreover, there are two errors:

- cardinality error,

```python

def loss_cardinality(self, outputs, targets, indices, num_boxes):

""" Compute the cardinality error, ie the absolute error in the number of predicted non-empty

boxes. This is not really a loss, it is intended for logging purposes only. It doesn't

propagate gradients

"""

[...]

# Count the number of predictions that are NOT "no-object" (which is the last class)

card_pred = (pred_logits.argmax(-1) != pred_logits.shape[-1] - 1).sum(1)

card_err = F.l1_loss(card_pred.float(), tgt_lengths.float())

losses = {'cardinality_error': card_err}

```

- [class error](https://github.com/facebookresearch/detr/blob/5e66b4cd15b2b182da347103dd16578d28b49d69/models/detr.py#L126),

```python

# TODO this should probably be a separate loss, not hacked in this one here

losses['class_error'] = 100 - accuracy(src_logits[idx], target_classes_o)[0]

```

where [`accuracy`](https://github.com/facebookresearch/detr/blob/5e66b4cd15b2b182da347103dd16578d28b49d69/util/misc.py#L432) is:

```python

def accuracy(output, target, topk=(1,)):

"""Computes the precision@k for the specified values of k"""

```

## Results

You should obtain acceptable results with 10 epochs, which require a few minutes of fine-tuning.

Out of curiosity, I have over-finetuned the model for 300 epochs (close to 3 hours).

Here are:

- the last [checkpoint][checkpoint-300-epochs] (~ 500 MB),

- the [log file][log-300-epochs].

All of the validation results are shown in [`view_balloon_validation.ipynb`][view-validation-notebook].

[![Open In Colab][colab-badge]][view-validation-notebook]

## References

- Official repositories:

- Facebook's [DETR](https://github.com/facebookresearch/detr) (and [the paper](https://arxiv.org/abs/2005.12872))

- Facebook's [detectron2 wrapper for DETR](https://github.com/facebookresearch/detr/tree/master/d2) ; caveat: this wrapper only supports box detection

- [DETR checkpoints](https://github.com/facebookresearch/detr#model-zoo): remove the classification head, then fine-tune

- My forks:

- My [fork](https://github.com/woctezuma/detr/tree/finetune) of DETR to fine-tune on a dataset with a single class

- My [fork](https://github.com/woctezuma/VIA2COCO/tree/fixes) of VIA2COCO to convert annotations from VIA format to COCO format

- Official notebooks:

- An [official notebook][detr_attention-notebook] showcasing DETR

- An [official notebook][pycocoDemo-notebook] showcasing the COCO API

- An [official notebook][detectron2-notebook] showcasing the detectron2 wrapper for DETR

- Tutorials:

- A [Github issue](https://github.com/facebookresearch/detr/issues/9) discussing the fine-tuning of DETR

- A [Github Gist](https://gist.github.com/woctezuma/e9f8f9fe1737987351582e9441c46b5d) explaining how to fine-tune DETR

- A [Github issue](https://github.com/facebookresearch/detr/issues/9#issuecomment-636391562) explaining how to load a fine-tuned DETR

- Datasets:

- A [blog post](https://engineering.matterport.com/splash-of-color-instance-segmentation-with-mask-r-cnn-and-tensorflow-7c761e238b46) about another approach (Mask R-CNN) and the [`balloon`](https://github.com/matterport/Mask_RCNN/tree/master/samples/balloon) dataset

- A [notebook][nucleus-notebook] about the [`nucleus`](https://github.com/matterport/Mask_RCNN/tree/master/samples/nucleus) dataset

[pretrained-detr-image]:

[training-loss-image]:

[finetuned-detr-image]:

[finetune_detr-notebook]:

[finetune_detectron2-notebook]:

[view-validation-notebook]:

[checkpoint-300-epochs]:

[log-300-epochs]:

[metrics-notebook]:

[colab-badge]:

[detr_attention-notebook]:

[pycocoDemo-notebook]:

[detectron2-notebook]:

[nucleus-notebook]: