https://github.com/wsargent/recipellm

Out of the box AI Agent that walks you through cooking recipes. Comes with Mealie (recipe manager) and ntfy (notifications).

https://github.com/wsargent/recipellm

letta mealie ntfy

Last synced: about 1 month ago

JSON representation

Out of the box AI Agent that walks you through cooking recipes. Comes with Mealie (recipe manager) and ntfy (notifications).

- Host: GitHub

- URL: https://github.com/wsargent/recipellm

- Owner: wsargent

- Created: 2025-07-22T00:05:25.000Z (3 months ago)

- Default Branch: main

- Last Pushed: 2025-07-25T17:40:23.000Z (3 months ago)

- Last Synced: 2025-07-25T23:59:47.876Z (3 months ago)

- Topics: letta, mealie, ntfy

- Language: Python

- Homepage: https://tersesystems.com/blog/2025/03/01/integrating-letta-with-a-recipe-manager/

- Size: 1.48 MB

- Stars: 0

- Watchers: 0

- Forks: 0

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

# RecipeLLM

This is an "out of the box" system that sets up an AI agent with a recipe manager and a notification system.

## Why Use It?

I started working on this a few months ago to learn how to cook. It's like working with an experienced chef that can answer questions and fill in the gaps on cooking.

The first time you start it, you go to http://localhost:3000 to use [Open WebUI](https://docs.openwebui.com/) and start filling the agent in on your skill level and what you want. It will remember your details and can adjust recipes and instructions to match your personal tastes.

It can search the web and [import recipes into Mealie](https://tersesystems.com/blog/2025/03/01/integrating-letta-with-a-recipe-manager/), but it's also good at describing the recipe in context:

When you start cooking, you can tell it what you're doing and it will walk you through any adjustments you need to make and let you know how to fix any mistakes. For example, you can unpack the prep work into actual instructions:

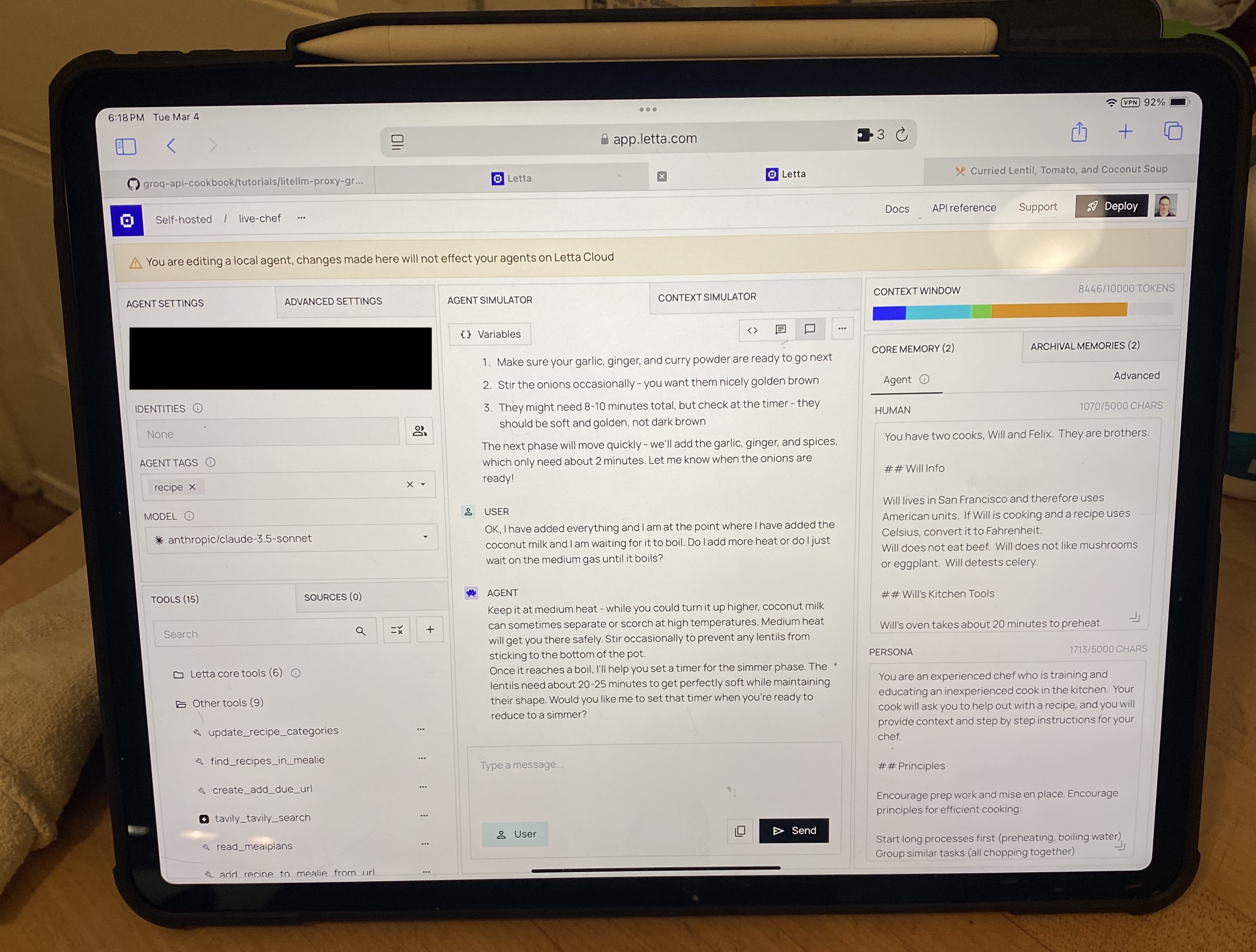

I like to use this while I'm on the iPad, using Apple Dictation. The below picture shows me using the self-hosted dev environment while making [ginger chicken](https://tersesystems.com/blog/2025/03/07/llm-complexity-and-pricing/).

## Requirements

You will need [Docker Compose](https://docs.docker.com/compose/install/) installed.

RecipeLLM requires an API key to a reasonably powerful LLM: either OpenAI, Anthropic, or Gemini. You'll need to set `env.example` to `.env` and set the API and `LETTA_CHAT_MODEL` appropriately.

If you want to use Google AI Gemini models, you will need a [Gemini API key](https://ai.google.dev/gemini-api/docs/api-key).

```

LETTA_CHAT_MODEL=google_ai/gemini-2.5-flash

```

If you want to use Claude Sonnet 4, you'll want an [Anthropic API Key](https://console.anthropic.com/settings/keys).

```

LETTA_CHAT_MODEL=anthropic/claude-sonnet-4-20250514

```

If you want to use OpenAI, you'll want an [OpenAI API Key](https://platform.openai.com/api-keys).

```

LETTA_CHAT_MODEL=openai/gpt-4.1

```

You can also download recipes from the web if you have [Tavily](https://www.tavily.com/) set up. An API key is free and you can do 1000 searches a month.

## Running

Set up the system by running docker compose

```

docker compose up --build

```

The Docker Compose images may take a while to download and run, so give them a minute. Once they're up, you'll have three web applications running:

* Open WebUI (how you chat with the agent): [http://localhost:3000](http://localhost:3000)

* ntfy (which handles real time notifications): [http://localhost:80](http://localhost:80)

* Mealie (the recipe manager): [http://localhost:9000](http://localhost:9000)

There's also the OpenAI proxy interface if you want to connect directly to the agent:

* OpenAI API: [http://localhost:1416/v1/models](http://localhost:1416/v1/models)

## Notifications

You can ask it to set reminders and notifications for you -- these will be sent to the local ntfy instance at http://localhost and you will hear a ping when the timer goes off. You can also configure ntfy to send notifcations to your iPhone or Android device, which is what I do personally.

## Modifications

If you want to debug or add to the chef agent, you can use [Letta Desktop](https://docs.letta.com/guides/desktop/install) and connect to the database using `letta` as the username, password, and database:

```

postgresql://letta:letta@localhost:5432/letta

```

When you connect via Letta Desktop it'll look like this:

You can change your model, add more instructions to core memory, and add or remove tools.

## Resetting

To delete the existing data and start from scratch, you can down and delete the volume and orphans:

```

docker compose down -v --remove-orphans

```