https://github.com/1backend/1backend

AI-native microservices platform.

https://github.com/1backend/1backend

ai ai-platform microservices microservices-platform on-premise on-premise-ai paas

Last synced: 7 months ago

JSON representation

AI-native microservices platform.

- Host: GitHub

- URL: https://github.com/1backend/1backend

- Owner: 1backend

- License: agpl-3.0

- Created: 2017-11-13T10:44:25.000Z (about 8 years ago)

- Default Branch: main

- Last Pushed: 2025-05-13T10:03:55.000Z (7 months ago)

- Last Synced: 2025-05-13T11:20:03.681Z (7 months ago)

- Topics: ai, ai-platform, microservices, microservices-platform, on-premise, on-premise-ai, paas

- Language: Go

- Homepage: https://1backend.com

- Size: 51.2 MB

- Stars: 2,187

- Watchers: 40

- Forks: 94

- Open Issues: 8

-

Metadata Files:

- Readme: README.md

- License: LICENSE

- Authors: AUTHORS

Awesome Lists containing this project

- awesome-golang-repositories - 1backend

- awesome-starred - 1backend/1backend - Run your web apps easily with a complete platform that you can install on any server. Build composable microservices and lambdas. (golang)

- awesome-homelab - 1Backend

- starred-awesome - 1backend - Run your web apps easily with a complete platform that you can install on any server. Build composable microservices and lambdas. (TypeScript)

- stars - 1backend

- awesome-selfhosted - 1Backend - Self-host web apps, microservices and lambdas on your server. Advanced features enable service reuse and composition. `AGPL-3.0` `Go` (Self-hosting Solutions / Localization)

- awesome-selfhosted123 - 1Backend - Self-host web apps, microservices and lambdas on your server. Advanced features enable service reuse and composition. `AGPL-3.0` `Go` (Self-hosting Solutions / Localization)

- awesome-static-website-services - 1Backend - Deploy your backend in seconds. Free tier included. Open source. (Functions as a Service / Code)

README

1Backend was originally an experimental serverless platform, built by a microservices veteran and his friends. It gained interest back in 2017, but development paused as the team moved on to other ventures.

Years later, while building an on-premise AI platform, the original creator realized there was still no microservices backend that fully matched their vision.

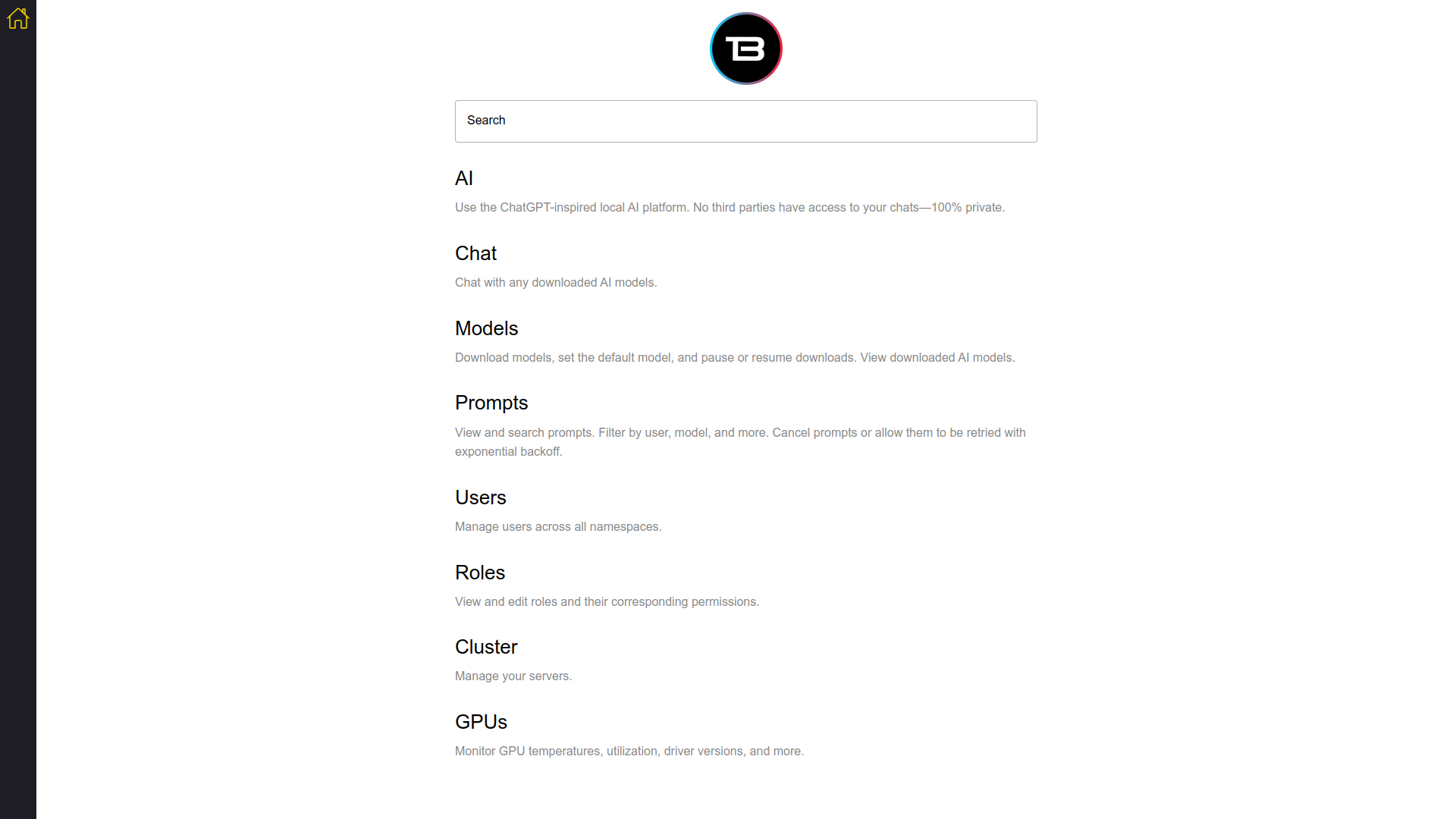

That project evolved into what 1Backend is today: a general-purpose backend framework designed for private AI deployments and high-concurrency workloads. It also includes a ChatGPT-inspired interface for user interaction, along with a network-accessible API for machines—but at its core, 1Backend is built to be a robust, flexible foundation for modern backend systems.

## Highlights

- On-premise ChatGPT alternative – Run your AI models locally through a UI, CLI or API.

- A "microservices-first" web framework – Think of it like Angular for the backend, built for large, scalable enterprise codebases.

- Out-of-the-box services – Includes built-in file uploads, downloads, user management, and more.

- Infrastructure simplification – Acts as a container orchestrator, reverse proxy, and more.

- Multi-database support – Comes with its own built-in ORM.

- AI integration – Works with LlamaCpp, StableDiffusion, and other AI platforms.

## Starting

Easiest way to run 1Backend is with Docker. [Install Docker if you don't have it](https://docs.docker.com/engine/install/).

Step into repo root and:

```sh

docker compose up

```

to run the platform in foreground. It stops running if you Ctrl+C it. If you want to run it in the background:

```sh

docker compose up -d

```

Also see [this page](https://1backend.com/docs/category/running-the-server) for other ways to launch 1Backend.

## Building microservices

Check out the [examples](./examples/go/services/) folder or the [relevant documentation](https://1backend.com/docs/writing-custom-services/your-first-service) to learn how to easily build testable, scalable microservices on 1Backend.

## Prompting

Now that the 1Backend is running you have a few options to interact with it.

### UI

You can go to `http://127.0.0.1:3901` and log in with username `1backend` and password `changeme` and start using it just like you would use ChatGPT.

Click on the big "AI" button and download a model first. Don't worry, this model will be persisted across restarts (see volumes in the docker-compose.yaml).

### Clients

For brevity the below example assumes you went to the UI and downloaded a model already. (That could also be done in code with the clients but then the code snippet would be longer).

Let's do a sync prompting in JS. In your project run

```sh

npm init -y && jq '. + { "type": "module" }' package.json > temp.json && mv temp.json package.json

npm i -s @1backend/client

```

Make sure your `package.json` contains `"type": "module"`, put the following snippet into `index.js`

```js

import { UserSvcApi, PromptSvcApi, Configuration } from "@1backend/client";

async function testDrive() {

let userService = new UserSvcApi();

let loginResponse = await userService.login({

body: {

slug: "1backend",

password: "changeme",

},

});

const promptSvc = new PromptSvcApi(

new Configuration({

apiKey: loginResponse.token?.token,

})

);

// Make sure there is a model downloaded and active at this point,

// either through the UI or programmatically .

let promptRsp = await promptSvc.prompt({

body: {

sync: true,

prompt: "Is a cat an animal? Just answer with yes or no please.",

},

});

console.log(promptRsp);

}

testDrive();

```

and run

```js

$ node index.js

{

prompt: {

createdAt: '2025-02-03T16:53:09.883792389Z',

id: 'prom_emaAv7SlM2',

prompt: 'Is a cat an animal? Just answer with yes or no please.',

status: 'scheduled',

sync: true,

threadId: 'prom_emaAv7SlM2',

type: "Text-to-Text",

userId: 'usr_ema9eJmyXa'

},

responseMessage: {

createdAt: '2025-02-03T16:53:12.128062235Z',

id: 'msg_emaAzDnLtq',

text: '\n' +

'I think the question is asking about dogs, so we should use "Dogs are animals". But what about cats?',

threadId: 'prom_emaAv7SlM2'

}

}

```

Depending on your system it might take a while for the AI to respond.

In case it takes long check the backend logs if it's processing, you should see something like this:

```sh

1backend-1backend-1 | {"time":"2024-11-27T17:27:14.602762664Z","level":"DEBUG","msg":"LLM is streaming","promptId":"prom_e3SA9bJV5u","responsesPerSecond":1,"totalResponses":1}

1backend-1backend-1 | {"time":"2024-11-27T17:27:15.602328634Z","level":"DEBUG","msg":"LLM is streaming","promptId":"prom_e3SA9bJV5u","responsesPerSecond":4,"totalResponses":9}

```

### CLI

Install `oo` to get started (at the moment you need Go to install it):

```sh

go install github.com/1backend/1backend/cli/oo@latest

```

```sh

$ oo env ls

ENV NAME SELECTED URL DESCRIPTION REACHABLE

local * http://127.0.0.1:11337 true

```

```sh

$ oo login 1backend changeme

$ oo whoami

slug: 1backend

id: usr_e9WSQYiJc9

roles:

- user-svc:admin

```

```sh

$ oo post /prompt-svc/prompt --sync=true --prompt="Is a cat an animal? Just answer with yes or no please."

# see example response above...

```

## Context

1Backend is a microservices-based AI platform, the seeds of which began taking shape in 2013 while I was at Hailo, an Uber competitor. The idea stuck with me and kept evolving over the years – including during my time at [Micro](https://github.com/micro/micro), a microservices platform company. I assumed someone else would eventually build it, but with the AI boom and the wave of AI apps we’re rolling out, I’ve realized it’s time to build it myself.

## Run On Your Servers

See the [Running the daemon](https://1backend.com/docs/category/running-the-server) page to help you get started.

## Services

For articles about the built-in services see the [Built-in services](https://1backend.com/docs/category/built-in-services) page.

For comprehensive API docs see the [1Backend API](https://1backend.com/docs/category/1backend-api) page.

## Run On Your Laptop/PC

We have temporarily discontinued the distribution of the desktop version. Please refer to this page for alternative methods to run the software.

## License

1Backend is licensed under AGPL-3.0.