https://github.com/GAP-LAB-CUHK-SZ/gaustudio

A Modular Framework for 3D Gaussian Splatting and Beyond

https://github.com/GAP-LAB-CUHK-SZ/gaustudio

3d-reconstruction 3dgs gaussian-splatting multi-view-reconstruction nerf pytorch surface-reconstruction

Last synced: 5 months ago

JSON representation

A Modular Framework for 3D Gaussian Splatting and Beyond

- Host: GitHub

- URL: https://github.com/GAP-LAB-CUHK-SZ/gaustudio

- Owner: GAP-LAB-CUHK-SZ

- License: mit

- Created: 2023-12-16T16:28:54.000Z (almost 2 years ago)

- Default Branch: master

- Last Pushed: 2024-11-19T01:11:02.000Z (11 months ago)

- Last Synced: 2024-11-19T02:24:16.151Z (11 months ago)

- Topics: 3d-reconstruction, 3dgs, gaussian-splatting, multi-view-reconstruction, nerf, pytorch, surface-reconstruction

- Language: Jupyter Notebook

- Homepage:

- Size: 13.3 MB

- Stars: 1,097

- Watchers: 42

- Forks: 57

- Open Issues: 23

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

- awesome-3D-gaussian-splatting - GauStudio - Unified framework with different paper implementations (Open Source Implementations / Framework)

README

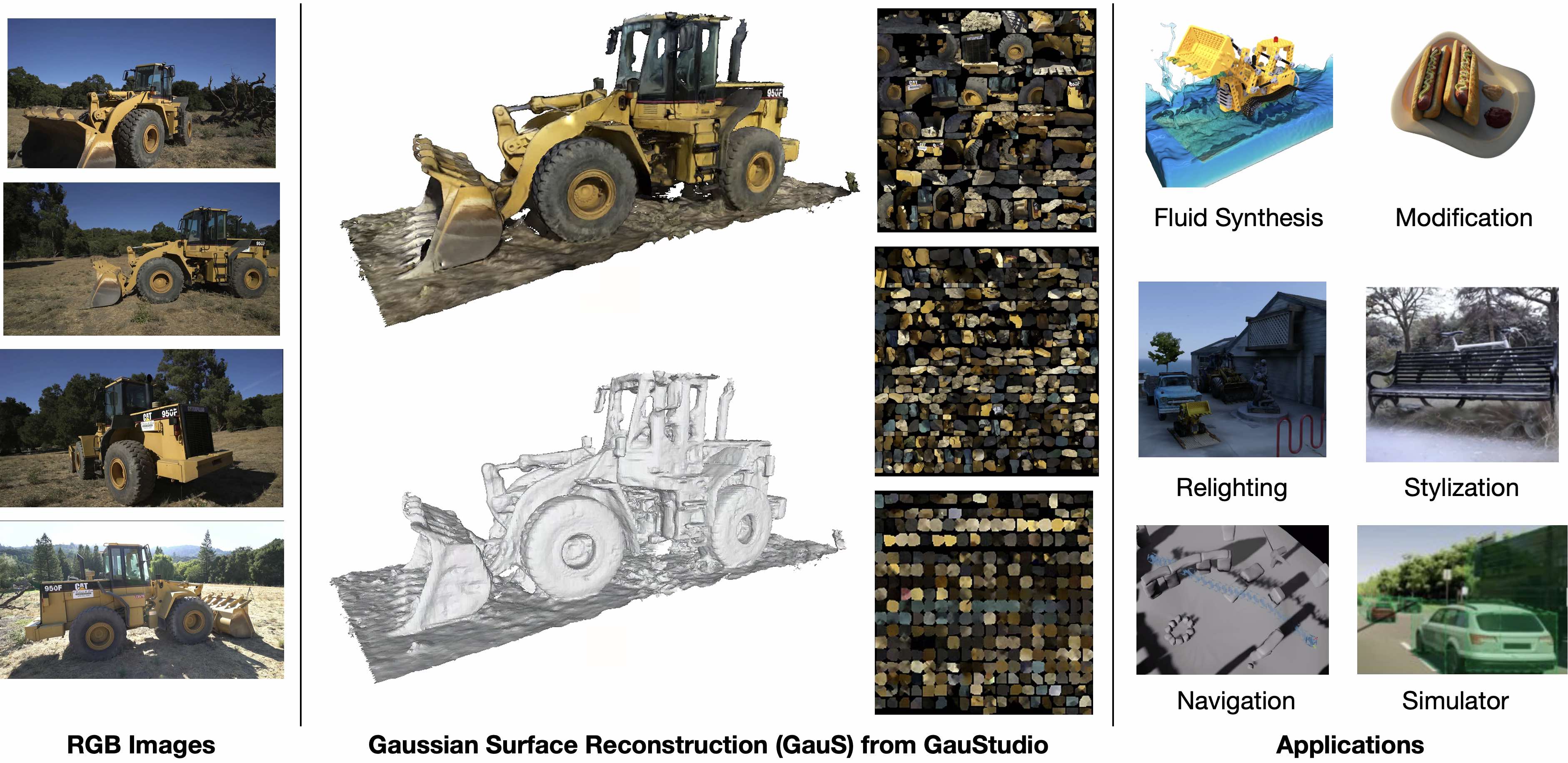

GauStudio is a modular framework that supports and accelerates research and development in the rapidly advancing field of 3D Gaussian Splatting (3DGS) and its diverse applications.

### [Paper](https://drive.google.com/file/d/1mizzZSXn-YToww7kW3OV0lUbfME9Mobg/view?usp=sharing) | [Document(Comming Soon)]()

Here's an improved version of the text with better structure and flow:

# Dataset

## [Download from Baidu Netdisk](https://pan.baidu.com/s/157mqM6C5Wy30DY3aip2NeA?pwd=ig3v#list/path=%2F) | [Download from Hugging Face(Comming Soon)]()

To comprehensively evaluate the robustness of 3DGS methods under diverse lighting conditions, materials, and geometric structures, we have curated the following datasets:

## 1. Collection of 5 Synthetic Datasets in COLMAP Format

We have integrated 5 synthetic datasets: *nerf_synthetic, refnerf_synthetic, nero_synthetic, nsvf_synthetic, and BlendedMVS*, totaling 143 complex real-world scenes. To ensure compatibility, we have utilized COLMAP to perform feature matching and triangulation based on the original poses, uniformly converting all data to the COLMAP format.

## 2. Real-world Scenes with High-quality Normal Annotations and LoFTR Initialization

* **COLMAP-format [MuSHRoom](https://github.com/TUTvision/MuSHRoom/tree/main)**: To address the difficulty of acquiring indoor scene data such as ScanNet++, we have processed and generated COLMAP-compatible data based on the publicly available MuSHRoom dataset. Please remember to use this data under the original license.

* **More Complete Tanks and Temples**: To address the lack of ground truth poses in the Tanks and Temples test set, we have converted the pose information provided by MVSNet to generate COLMAP-format data. This supports algorithm evaluation on a wider range of indoor and outdoor scenes. The leaderboard submission script will be released in a subsequent version.

* **Normal Annotations and LoFTR Initialization** To tackle modeling challenges such as sparse viewpoints and specular highlight regions, we have annotated high-quality, temporally consistent normal data based on our private model, providing new avenues for indoor and unbounded scene 3DGS reconstruction. The annotation code will be released soon. Additionally, we provide LoFTR-based initial point clouds to support better initialization.

# Installation

Before installing the software, please note that the following steps have been tested on Ubuntu 20.04. If you encounter any issues during the installation on Windows, we are open to addressing and resolving such issues.

## Prerequisites

* NVIDIA graphics card with at least 6GB VRAM

* CUDA installed

* Python >= 3.8

## Optional Step: Create a Conda Environment

It is recommended to create a conda environment before proceeding with the installation. You can create a conda environment using the following commands:

```sh

# Create a new conda environment

conda create -n gaustudio python=3.8

# Activate the conda environment

conda activate gaustudio

```

## Step 1: Install PyTorch

You will need to install PyTorch. The software has been tested with torch1.12.1+cu113 and torch2.0.1+cu118, but other versions should also work fine. You can install PyTorch using conda as follows:

```

# Example command to install PyTorch version 1.12.1+cu113

conda install pytorch=1.12.1 torchvision=0.13.1 cudatoolkit=11.3 -c pytorch

# Example command to install PyTorch version 2.0.1+cu118

pip install torch torchvision --index-url https://download.pytorch.org/whl/cu118

```

## Step 2: Install Dependencies

Install the necessary dependencies by running the following command:

```sh

pip install -r requirements.txt

```

## Step 3: Install Customed Rasterizer and Gaustudio

```

cd submodules/gaustudio-diff-gaussian-rasterization

python setup.py install

cd ../../

python setup.py develop

```

## Optional Step: Install PyTorch3D

If you require mesh rendering and further mesh refinement, you can install PyTorch3D follow the [link](https://github.com/facebookresearch/pytorch3d/blob/main/INSTALL.md):

# QuickStart

## Mesh Extraction for 3DGS

### Prepare the input data

We currently support the output directory generated by most gaussian splatting methods such as [3DGS](https://github.com/graphdeco-inria/gaussian-splatting), [mip-splatting](https://github.com/autonomousvision/mip-splatting), [GaussianPro](https://github.com/kcheng1021/GaussianPro) with the following minimal structure:

```

- output_dir

- cameras.json (necessary)

- point_cloud

- iteration_xxxx

- point_cloud.ply (necessary)

```

We are preparing some [demo data(comming soon)]() for quick-start testing.

### Running the Mesh Extraction

To extract a mesh from the input data, run the following command:

```

gs-extract-mesh -m ./data/1750250955326095360_data/result -o ./output/1750250955326095360_data

```

Replace `./data/1750250955326095360_data/result` with the path to your input output_dir.

Replace `./output/1750250955326095360_data` with the desired path for the output mesh.

### Binding Texture to the Mesh

The output data is organized in the same format as [mvs-texturing](https://github.com/nmoehrle/mvs-texturing/tree/master). Follow these steps to add texture to the mesh:

* Compile the mvs-texturing repository on your system.

* Add the build/bin directory to your PATH environment variable

* Navigate to the output directory containing the mesh.

* Run the following command:

```

texrecon ./images ./fused_mesh.ply ./textured_mesh --outlier_removal=gauss_clamping --data_term=area --no_intermediate_results

```

# Plan of Release

GauStudio will support more 3DGS-based methods in the near future, if you are also interested in GauStudio and want to improve it, welcome to submit PR!

- [x] Release mesh extraction and rendering toolkit

- [x] Release common nerf and neus dataset loader and preprocessing code.

- [ ] Release Semi-Dense, MVSplat-based, and DepthAnything-based Gaussians Initialization

- [ ] Release of full pipelines for training

- [ ] Release Gaussian Sky Modeling and Sky Mask Generation Scripts

- [ ] Release VastGaussian Reimplementation

- [ ] Release Mip-Splatting, Scaffold-GS, and Triplane-GS training

- [ ] Release 'gs-viewer' for online visualization and 'gs-compress' for 3DGS postprocessing

- [ ] Release SparseGS and FSGS training

- [ ] Release Sugar and GaussianPro training

# BibTeX

If you found this library useful for your research, please consider citing:

```

@article{ye2024gaustudio,

title={GauStudio: A Modular Framework for 3D Gaussian Splatting and Beyond},

author={Ye, Chongjie and Nie, Yinyu and Chang, Jiahao and Chen, Yuantao and Zhi, Yihao and Han, Xiaoguang},

journal={arXiv preprint arXiv:2403.19632},

year={2024}

}

```

# License

The code is released under the MIT License except the rasterizer. We also welcome commercial cooperation to advance the applications of 3DGS and address unresolved issues. If you are interested, welcome to contact Chongjie at chongjieye@link.cuhk.edu.cn