https://github.com/VainF/Awesome-Anything

General AI methods for Anything: AnyObject, AnyGeneration, AnyModel, AnyTask, AnyX

https://github.com/VainF/Awesome-Anything

List: Awesome-Anything

anything anything-ai awesome awesome-segment-anything general-ai segment-anything

Last synced: 2 months ago

JSON representation

General AI methods for Anything: AnyObject, AnyGeneration, AnyModel, AnyTask, AnyX

- Host: GitHub

- URL: https://github.com/VainF/Awesome-Anything

- Owner: VainF

- Created: 2023-04-10T09:53:23.000Z (about 2 years ago)

- Default Branch: main

- Last Pushed: 2023-11-15T08:34:36.000Z (over 1 year ago)

- Last Synced: 2025-04-10T05:05:18.415Z (2 months ago)

- Topics: anything, anything-ai, awesome, awesome-segment-anything, general-ai, segment-anything

- Homepage:

- Size: 204 KB

- Stars: 1,772

- Watchers: 52

- Forks: 98

- Open Issues: 3

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

- Awesome-Segment-Anything - Awesome Anything - Anything.svg?logo=github&label=Stars) (2 Project & Toolbox<span id='tool'>)

- Awesome-Segment-Anything - VainF/Awesome-Anything

- ultimate-awesome - Awesome-Anything - General AI methods for Anything: AnyObject, AnyGeneration, AnyModel, AnyTask, AnyX. (Other Lists / Julia Lists)

README

# Awesome-Anything

[](https://github.com/sindresorhus/awesome)

[](https://github.com/topics/awesome)

A curated list of **general AI methods for Anything**: AnyObject, AnyGeneration, AnyModel, AnyTask, etc.

[Contributions](https://github.com/VainF/Awesome-Anything/pulls) are welcome!

- [Awesome-Anything](#awesome-anything)

- [AnyObject](#anyobject) - Segmentation, Detection, Classification, Medical Image, OCR, Pose, etc.

- [AnyGeneration](#anygeneration) - Text-to-Image Generation, Editing, Inpainting, Style Transfer, Video Frame Interpolation, etc.

- [Any3D](#any3d) - 3D Generation, Segmentation, etc.

- [AnyModel](#anymodel) - Any Pruning, Any Quantization, Model Reuse.

- [AnyTask](#anytask) - LLM Controller + ModelZoo, General Decoding, Multi-Task Learning.

- [AnyX](#anyx) - Other Topics: Captioning, etc.

- [Paper List](#paper-list-for-anything-ai)

## AnyObject

| Title & Authors | Intro | Useful Links |

|:----| :----: | :---:|

| [](https://github.com/facebookresearch/segment-anything)

[**Segment Anything**](https://arxiv.org/abs/2304.02643)

*Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alex Berg, Wan-Yen Lo, Piotr Dollar, Ross Girshick*

> Meta Research

> Preprint'23

[[**Segment Anything (Project)**](https://github.com/facebookresearch/segment-anything)] |  | [[Github](https://github.com/facebookresearch/segment-anything)]

[[Page](https://segment-anything.com/)]

[[Demo](https://segment-anything.com/demo)] |

| [](https://github.com/facebookresearch/ov-seg)

[**OVSeg: Open-Vocabulary Semantic Segmentation with Mask-adapted CLIP**](https://arxiv.org/abs/2210.04150)

*Feng Liang, Bichen Wu, Xiaoliang Dai, Kunpeng Li, Yinan Zhao, Hang Zhang, Peizhao Zhang, Peter Vajda, Diana Marculescu*

> Meta Research

> Preprint'23

[[**OVSeg (Project)**](https://github.com/facebookresearch/segment-anything)] |  | [[Github](https://github.com/facebookresearch/ov-seg)]

| [[Github](https://github.com/facebookresearch/ov-seg)]

[[Page](https://jeff-liangf.github.io/projects/ovseg/)] |

| [](https://github.com/ronghanghu/seg_every_thing)

[**Learning to Segment Every Thing**](https://openaccess.thecvf.com/content_cvpr_2018/papers/Hu_Learning_to_Segment_CVPR_2018_paper.pdf)

*Ronghang Hu, Piotr Dollar, Kaiming He, Trevor Darrell, Ross Girshick*

> UC Berkeley, FAIR

> CVPR'18

[[**seg_every_thing (Project)**](https://github.com/ronghanghu/seg_every_thing)] |  | [[Github](https://github.com/ronghanghu/seg_every_thing)]

| [[Github](https://github.com/ronghanghu/seg_every_thing)]

[[Page](https://github.com/ronghanghu/seg_every_thing)] |

| [](https://github.com/IDEA-Research/Grounded-Segment-Anything)

[**Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection**](https://arxiv.org/abs/2303.05499)

*Shilong Liu and Zhaoyang Zeng and Tianhe Ren and Feng Li and Hao Zhang and Jie Yang and Chunyuan Li and Jianwei Yang and Hang Su and Jun Zhu and Lei Zhang*

> IDEA-Research

> Preprint'23

[[**Grounded-SAM**](https://github.com/IDEA-Research/Grounded-Segment-Anything), [**GroundingDINO (Project)**](https://github.com/IDEA-Research/GroundingDINO)] |  | [[Github](https://github.com/IDEA-Research/Grounded-Segment-Anything)]

[[Demo](https://colab.research.google.com/github/roboflow-ai/notebooks/blob/main/notebooks/zero-shot-object-detection-with-grounding-dino.ipynb)] |

| [](https://github.com/baaivision/Painter)

[**SegGPT: Segmenting Everything In Context**](https://arxiv.org/abs/2304.03284)

*Xinlong Wang, Xiaosong Zhang, Yue Cao, Wen Wang, Chunhua Shen, Tiejun Huang*

> BAAI-Vision

> Preprint'23

[[**SegGPT (Project)**](https://github.com/baaivision/Painter)] |  | [[Github](https://github.com/baaivision/Painter)] |

| [[Github](https://github.com/baaivision/Painter)] |

| [**V3Det: Vast Vocabulary Visual Detection Dataset**](https://arxiv.org/abs/2304.03752)

*Jiaqi Wang, Pan Zhang, Tao Chu, Yuhang Cao, Yujie Zhou, Tong Wu, Bin Wang, Conghui He, Dahua Lin*

> Shanghai AI Laboratory, CUHK

> Preprint'23 |  | -- |

| [](https://github.com/kadirnar/segment-anything-video)

[**segment-anything-video (Project)**](https://github.com/kadirnar/segment-anything-video)

Kadir Nar |  | [[Github](https://github.com/kadirnar/segment-anything-video)] |

| [](https://github.com/achalddave/segment-any-moving)

[**Towards Segmenting Anything That Moves**](https://arxiv.org/abs/1902.03715)

*Achal Dave, Pavel Tokmakov, Deva Ramanan*

> ICCV'19 Workshop

[[**segment-any-moving (Project)**](https://github.com/achalddave/segment-any-moving)] | [ ](http://www.achaldave.com/projects/anything-that-moves/videos/ZXN6A-tracked-with-objectness-trimmed.mp4)[

](http://www.achaldave.com/projects/anything-that-moves/videos/ZXN6A-tracked-with-objectness-trimmed.mp4)[ ](http://www.achaldave.com/projects/anything-that-moves/videos/c95cd17749.mp4)

](http://www.achaldave.com/projects/anything-that-moves/videos/c95cd17749.mp4) | [[Github](https://github.com/achalddave/segment-any-moving)] |

| [[Github](https://github.com/achalddave/segment-any-moving)] |

| [](https://github.com/fudan-zvg/Semantic-Segment-Anything)

[**Semantic Segment Anything**](https://github.com/fudan-zvg/Semantic-Segment-Anything)

*Jiaqi Chen, Zeyu Yang, Li Zhang*

[[**Semantic-Segment-Anything (Project)**](https://github.com/fudan-zvg/Semantic-Segment-Anything)] |  | [[Github](https://github.com/fudan-zvg/Semantic-Segment-Anything)] |

| [[Github](https://github.com/fudan-zvg/Semantic-Segment-Anything)] |

| [](https://github.com/Cheems-Seminar/segment-anything-and-name-it)

[Grounded Segment Anything: From Objects to **Parts** (Project)](https://github.com/Cheems-Seminar/segment-anything-and-name-it)

*Peize Sun* and *Shoufa Chen* |  | [[Github](https://github.com/Cheems-Seminar/segment-anything-and-name-it)]

| [](https://github.com/caoyunkang/GroundedSAM-zero-shot-anomaly-detection)

[**GroundedSAM-zero-shot-anomaly-detection (Project)**](https://github.com/caoyunkang/GroundedSAM-zero-shot-anomaly-detection)

*Yunkang Cao* |  | [[Github](https://github.com/caoyunkang/GroundedSAM-zero-shot-anomaly-detection)] |

| [[Github](https://github.com/caoyunkang/GroundedSAM-zero-shot-anomaly-detection)] |

| [](https://github.com/anuragxel/salt)

[**Segment Anything Labelling Tool (SALT) (Project)**](https://github.com/anuragxel/salt)

*Anurag Ghosh* |  | [[Github](https://github.com/caoyunkang/GroundedSAM-zero-shot-anomaly-detection)] |

| [](https://github.com/RockeyCoss/Prompt-Segment-Anything)

[**Prompt-Segment-Anything (Project)**](https://github.com/RockeyCoss/Prompt-Segment-Anything)

*Rockey* |  | [[Github](https://github.com/RockeyCoss/Prompt-Segment-Anything)]|

| [](https://github.com/Li-Qingyun/sam-mmrotate)

[**SAM-RBox (Project)**](https://github.com/Li-Qingyun/sam-mmrotate)

*Qingyun Li* |  | [[Github](https://github.com/Li-Qingyun/sam-mmrotate)] |

| [](https://github.com/BingfengYan/VISAM)

[**VISAM (Project)**](https://github.com/BingfengYan/VISAM)

*Feng Yan, Weixin Luo, Yujie Zhong, Yiyang Gan, Lin Ma* |  | [[Github](https://github.com/BingfengYan/VISAM)]

|

| [](https://github.com/aliaksandr960/segment-anything-eo)

[**Segment Anything EO tools: Earth observation tools for Meta AI Segment Anything (Project)**](https://github.com/aliaksandr960/segment-anything-eo)

*Aliaksandr Hancharenka, Alexander Chichigin* |  | [[Github](https://github.com/aliaksandr960/segment-anything-eo)] |

| [](https://github.com/JoOkuma/napari-segment-anything)

[**napari-segment-anything: Segment Anything Model (SAM) native Qt UI (Project)**](https://github.com/JoOkuma/napari-segment-anything)

*Jordão Bragantini, Kyle I S Harrington, Ajinkya Kulkarni* |  | [[Github](https://github.com/JoOkuma/napari-segment-anything)] |

| [[Github](https://github.com/JoOkuma/napari-segment-anything)] |

| [](https://github.com/amine0110/SAM-Medical-Imaging)

[**SAM-Medical-Imaging: Segment Anything Model (SAM) native Qt UI (Project)**](https://github.com/amine0110/SAM-Medical-Imaging)

*Jordão Bragantini, Kyle I S Harrington, Ajinkya Kulkarni* |  | [[Github](https://github.com/amine0110/SAM-Medical-Imaging)] |

| [](https://github.com/yeungchenwa/OCR-SAM)

[**OCR-SAM: Combining MMOCR with Segment Anything & Stable Diffusion. (Project)**](https://github.com/yeungchenwa/OCR-SAM)

*Zhenhua Yang, Qing Jiang* |  | [[Github](https://github.com/yeungchenwa/OCR-SAM)] |

| [](https://github.com/MaybeShewill-CV/segment-anything-u-specify)

[**segment-anything-u-specify: using sam+clip to segment any objs u specify with text prompts. (Project)**](https://github.com/MaybeShewill-CV/segment-anything-u-specify)

*MaybeShewill-CV* |  | [[Github](https://github.com/MaybeShewill-CV/segment-anything-u-specify)] |

| [](https://github.com/UX-Decoder/Segment-Everything-Everywhere-All-At-Once)

[**Segment Everything Everywhere All at Once**](https://arxiv.org/abs/2304.06718)

*Xueyan Zou, Jianwei Yang, Hao Zhang, Feng Li, Linjie Li, Jianfeng Gao, Yong Jae Lee*

[[SEEM (Project)](https://github.com/UX-Decoder/Segment-Everything-Everywhere-All-At-Once)] |  | [[Github](https://github.com/UX-Decoder/Segment-Everything-Everywhere-All-At-Once)] |

| [](https://github.com/lujiazho/SegDrawer)

[**SegDrawer: Simple static web-based mask drawer (Project)**](https://github.com/lujiazho/SegDrawer)

*Harry* |  | [[Github](https://github.com/lujiazho/SegDrawer)] |

| [](https://github.com/kevmo314/magic-copy)

[**Magic Copy: a Chrome extension (Project)**](https://github.com/kevmo314/magic-copy)

*Harry* |  | [[Github](https://github.com/kevmo314/magic-copy)] |

| [[Github](https://github.com/kevmo314/magic-copy)] |

| [](https://github.com/gaomingqi/Track-Anything)

[**Track Anything: Segment Anything Meets Videos**](https://arxiv.org/abs/2304.11968)

*Jinyu Yang, Mingqi Gao, Zhe Li, Shang Gao, Fangjing Wang, Feng Zheng*

[[Track-Anything (Project)](https://github.com/gaomingqi/Track-Anything)] |  | [[Github](https://github.com/gaomingqi/Track-Anything)]

[[Demo](https://huggingface.co/spaces/watchtowerss/Track-Anything)]|

| [](https://github.com/ylqi/Count-Anything)

[**Count Anything (Project)**](https://github.com/ylqi/Count-Anything)

*Liqi Yan* |  | [[Github](https://github.com/ylqi/Count-Anything)]|

| [[Github](https://github.com/ylqi/Count-Anything)]|

| [](https://github.com/z-x-yang/Segment-and-Track-Anything)

[**Segment-and-Track-Anything (Project)**](https://github.com/z-x-yang/Segment-and-Track-Anything)

*Zongxin Yang* |  | [[Github](https://github.com/z-x-yang/Segment-and-Track-Anything)]|

| [[Github](https://github.com/z-x-yang/Segment-and-Track-Anything)]|

| [](https://github.com/luminxu/Pose-for-Everything)

[**Pose for Everything: Towards Category-Agnostic Pose Estimation**](https://arxiv.org/abs/2207.10387)

*Lumin Xu\*, Sheng Jin\*, Wang Zeng, Wentao Liu, Chen Qian, Wanli Ouyang, Ping Luo, Xiaogang Wang*

> CUHK, SenseTime

> ECCV'22 Oral

[[**Pose-for-Everything (Project)**](https://github.com/luminxu/Pose-for-Everything)] |  | [[Github](https://github.com/luminxu/Pose-for-Everything)]|

| [](https://github.com/Luodian/RelateAnything)

[**Relate Anything Model (Project)**](https://github.com/Luodian/RelateAnything)

Zujin Guo*, Bo Li*, Jingkang Yang*, Zijian Zhou*, Ziwei Liu

> MMLab@NTU

> VisCom Lab, KCL/TongJi |  | [Github](https://github.com/Luodian/RelateAnything) |

| [](https://github.com/Jun-CEN/SegmentAnyRGBD)

[**SegmentAnyRGBD (Project)**](https://github.com/Jun-CEN/SegmentAnyRGBD)

Jun Cen, Yizheng Wu, Xingyi Li, Jingkang Yang, Yixuan Pei, Lingdong Kong

> Visual Intelligence Lab@HKUST,

> HUST,

> MMLab@NTU,

> Smiles Lab@XJTU,

> NUS |  | [Github](https://github.com/Jun-CEN/SegmentAnyRGBD) |

|

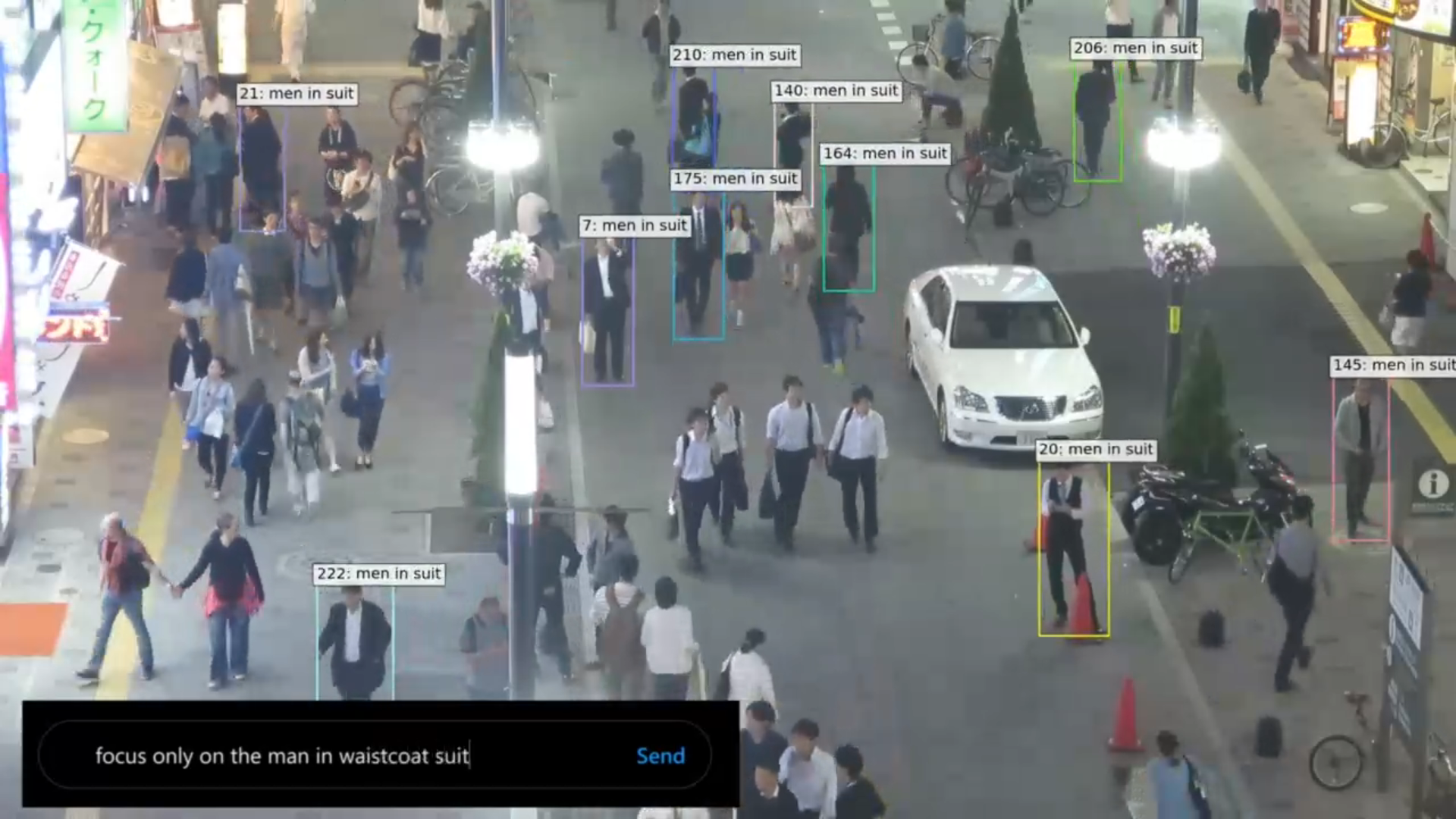

[**Retrieve Any Object via Prompt-based Tracking**](https://arxiv.org/abs/2305.13495)

Pha Nguyen, Kha Gia Quach, Kris Kitani, Khoa Luu

> CVIU@UArk,

> pdActive Inc.,

> RI@CMU |  | [[ArXiv](https://arxiv.org/abs/2305.13495)]

[[Page](https://uark-cviu.github.io/Type-to-Track/)] |

| [](https://github.com/jamesjg/FoodSAM)

[**FoodSAM (Project)**](https://github.com/jamesjg/FoodSAM)

Xing Lan, Jiayi Lyu, Hanyu Jiang, Kun Dong, Zehai Niu, Yi Zhang, Jian Xue

> UCAS |  | [[Github](https://github.com/jamesjg/FoodSAM)]

[[Page](https://starhiking.github.io/FoodSAM_Page/)]

[[ArXiv](https://arxiv.org/abs/2308.05938)]|

## AnyGeneration

| Title & Authors | Intro | Useful Links |

|:----| :----: | :---:|

| [](https://github.com/CompVis/stable-diffusion)

[**High-Resolution Image Synthesis with Latent Diffusion Models**](https://arxiv.org/abs/2112.10752)

*Robin Rombach and Andreas Blattmann and Dominik Lorenz and Patrick Esser and Björn Ommer*

> LMU München, Runway ML

> CVPR'22

[[**Stable-Diffusion (Project)**](https://github.com/CompVis/stable-diffusion)] |  | [[Github](https://github.com/CompVis/stable-diffusion)]

[[Page](https://stablediffusionweb.com/)]

[[Demo](https://stablediffusionweb.com/#demo)] |

| [](https://github.com/lllyasviel/ControlNet)

[**Adding Conditional Control to Text-to-Image Diffusion Models**](https://arxiv.org/abs/2302.05543)

*Lvmin Zhang, Maneesh Agrawala*

> Stanford University

> Preprint'23

[[**ControlNet (Project)**](https://github.com/lllyasviel/ControlNet)] |  | [[Github](https://github.com/lllyasviel/ControlNet)]

[[Demo](https://huggingface.co/spaces/hysts/ControlNet)] |

| [**GigaGAN: Large-scale GAN for Text-to-Image Synthesis**](https://arxiv.org/abs/2303.05511)

*Minguk Kang, Jun-Yan Zhu, Richard Zhang, Jaesik Park, Eli Shechtman, Sylvain Paris, Taesung Park*

> POSTECH, Carnegie Mellon University, Adobe Research

> CVPR'23 |  | [[Page](https://mingukkang.github.io/GigaGAN/)] |

| [[Page](https://mingukkang.github.io/GigaGAN/)] |

| [](https://github.com/geekyutao/Inpaint-Anything)

[**Inpaint-Anything: Segment Anything Meets Image Inpainting (Project)**](https://github.com/geekyutao/Inpaint-Anything)

*Tao Yu* |  | [[Github](https://github.com/geekyutao/Inpaint-Anything)] |

| [](https://github.com/feizc/IEA)

[**IEA: Image Editing Anything (Project)**](https://github.com/feizc/IEA)

*Zhengcong Fei* |  | [[Github](https://github.com/feizc/IEA)] |

| [](https://github.com/sail-sg/EditAnything)

[**EditAnything (Project)**](https://github.com/sail-sg/EditAnything)

*Shanghua Gao, Pan Zhou* |  | [[Github](https://github.com/sail-sg/EditAnything)] |

| [](https://github.com/continue-revolution/sd-webui-segment-anything)

[**Segment Anything for Stable Diffusion Webui (Project)**](https://github.com/continue-revolution/sd-webui-segment-anything)

*Chengsong Zhang* |  | [[Github](https://github.com/continue-revolution/sd-webui-segment-anything)] |

| [[Github](https://github.com/continue-revolution/sd-webui-segment-anything)] |

| [](https://github.com/Curt-Park/segment-anything-with-clip)

[**Segment Anything with Clip (Project)**](https://github.com/Curt-Park/segment-anything-with-clip)

*Jinwoo Park* |  | [[Github](https://github.com/Curt-Park/segment-anything-with-clip)] |

| [](https://github.com/showlab/ShowAnything)

[**ShowAnything: Edit and Generate Anything In Image and Video (Project)**](https://github.com/showlab/ShowAnything)

*Showlab, NUS* |  | [Github](https://github.com/showlab/ShowAnything) |

| [](https://github.com/Huage001/Transfer-Any-Style)

[**Transfer-Any-Style: About An interactive demo based on Segment-Anything for style transfer (Project)**](https://github.com/Huage001/Transfer-Any-Style)

*LV-Lab, NUS* |  | [Github](https://github.com/Huage001/Transfer-Any-Style) |

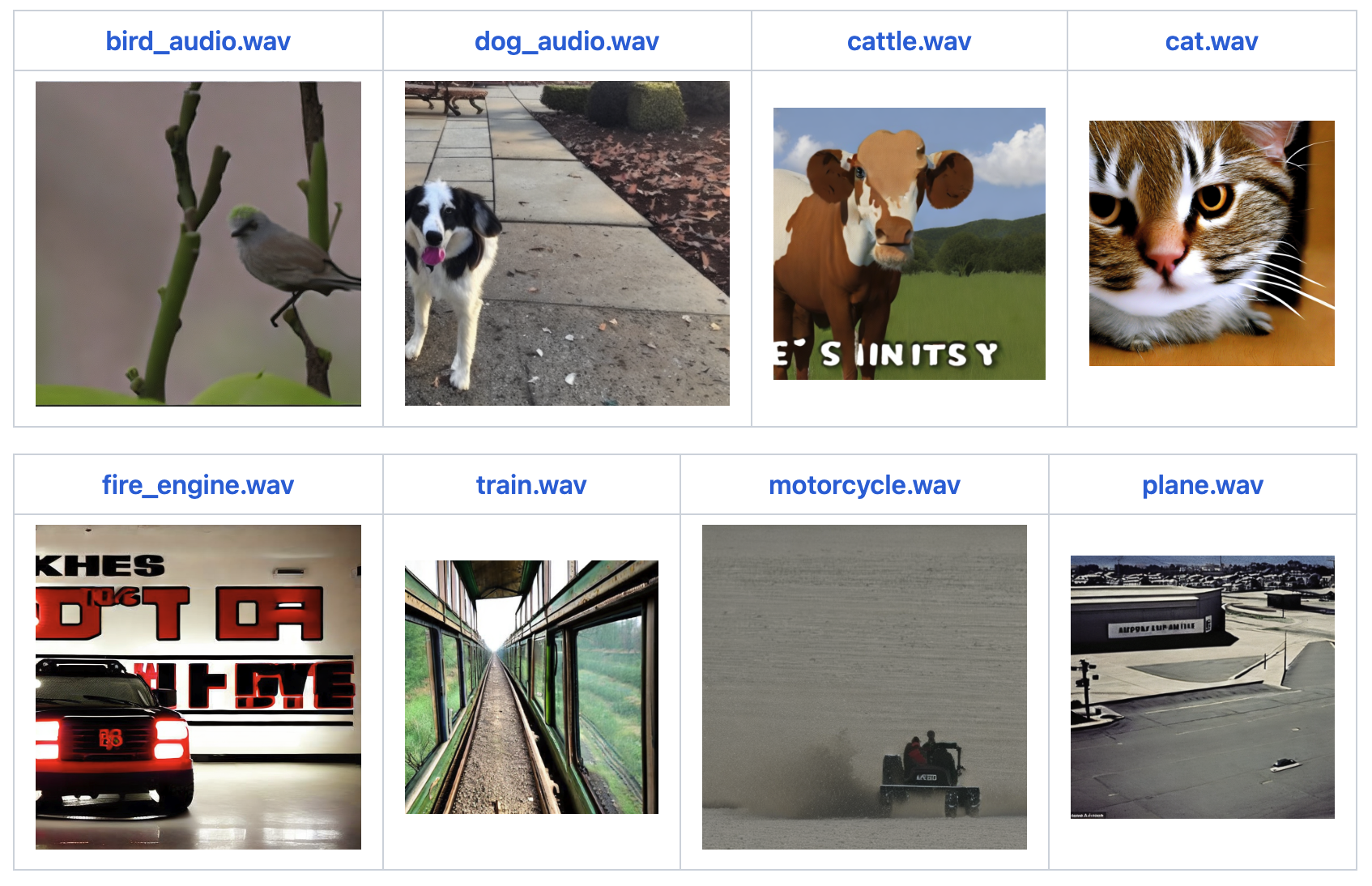

| [](https://github.com/Zeqiang-Lai/Anything2Image)

[**Anything To Image: Generate image from anything with ImageBind and Stable Diffusion (Project)**](https://github.com/Zeqiang-Lai/Anything2Image)

*Zeqiang-Lai* |  | [Github](https://github.com/Zeqiang-Lai/Anything2Image) |

| [](https://github.com/zzh-tech/InterpAny-Clearer)

[**Clearer Frames, Anytime: Resolving Velocity Ambiguity in Video Frame Interpolation**](https://zzh-tech.github.io/InterpAny-Clearer/)

*Zhihang Zhong, Gurunandan Krishnan, Xiao Sun, Yu Qiao, Sizhuo Ma, Jian Wang*

> Shanghai AI Laboratory, Snap Inc.

> Preprint'23 |  | [[Github]](https://github.com/zzh-tech/InterpAny-Clearer)

[[Page]](https://zzh-tech.github.io/InterpAny-Clearer/)

[[ArXiv]](https://arxiv.org/abs/2311.08007) |

## Any3D

| Title & Authors | Intro | Useful Links |

|:----| :----: | :---:|

| [](https://github.com/Pointcept/OpenIns3D)

[**OpenIns3D: Snap and Lookup for 3D Open-vocabulary Instance Segmentation**](https://arxiv.org/abs/2309.00616)

*Zhening Huang, Xiaoyang Wu, Xi Chen, Hengshuang Zhao, Lei Zhu, Joan Lasenby*

> Cambridge, HKU, HKUST

[[**OpenIns3D**](https://github.com/Pointcept/OpenIns3D)] |  | [[Github](https://github.com/Pointcept/OpenIns3D)]

[[Page](https://zheninghuang.github.io/OpenIns3D/)]|

| [](https://github.com/Anything-of-anything/Anything-3D)

[**Anything-3D: Segment-Anything + 3D, Let's lift the anything to 3D (Project)**](https://github.com/Anything-of-anything/Anything-3D)

*LV-Lab, NUS* |

| [Github](https://github.com/Anything-of-anything/Anything-3D) |

| [](https://github.com/nexuslrf/SAM-3D-Selector)

[**SAM 3D Selector: Utilizing segment-anything to help the region selection of 3D point cloud or mesh. (Project)**](https://github.com/nexuslrf/SAM-3D-Selector)

*Nexuslrf* |  | [Github](https://github.com/nexuslrf/SAM-3D-Selector) |

| [](https://github.com/dvlab-research/3D-Box-Segment-Anything)

[**3D-Box via Segment Anything. (Project)**](https://github.com/dvlab-research/3D-Box-Segment-Anything)

*dvlab-research* |  | [[Github](https://github.com/dvlab-research/3D-Box-Segment-Anything)] |

| [](https://github.com/Pointcept/SegmentAnything3D)

[**SAM3D: Segment Anything in 3D Scenes**](https://arxiv.org/abs/2306.03908)

*Yunhan Yang, Xiaoyang Wu, Tong He, Hengshuang Zhao, Xihui Liu*

> Shanghai AI Laboratory, HKU

[[**SAM3D: Segment Anything in 3D Scenes (Project)**](https://github.com/Pointcept/SegmentAnything3D)] |  | [[Github](https://github.com/Pointcept/SegmentAnything3D)] |

## AnyModel

| Title & Authors | Intro | Useful Links |

|:----| :----: | :---:|

| [](https://github.com/VainF/Torch-Pruning)

[**DepGraph: Towards Any Structural Pruning**](https://arxiv.org/abs/2301.12900)

*Gongfan Fang, Xinyin Ma, Mingli Song, Michael Bi Mi, Xinchao Wang*

> Learning and Vision Lab @ NUS

> CVPR'23

[[**Torch-Pruning (Project)**](https://github.com/VainF/Torch-Pruning)] |  | [[Github](https://github.com/VainF/Torch-Pruning)]

[[Demo](https://colab.research.google.com/drive/1TRvELQDNj9PwM-EERWbF3IQOyxZeDepp?usp=sharing)] |

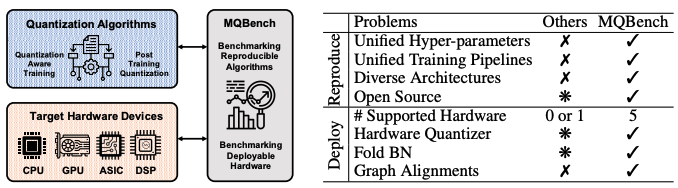

| [](https://github.com/ModelTC/MQBench)

[**MQBench: Towards Reproducible and Deployable Model Quantization Benchmark**](https://arxiv.org/abs/2111.03759)

*Yuhang Li and Mingzhu Shen and Jian Ma and Yan Ren and Mingxin Zhao and Qi Zhang and Ruihao Gong and Fengwei Yu and Junjie Yan*

> SenseTime Research

> NeurIPS'21

[[**MQBench (Project)**](https://github.com/ModelTC/MQBench)] |  | [[Github](https://github.com/ModelTC/MQBench)]

[[Page](http://mqbench.tech/)] |

| [](https://github.com/tianyic/only_train_once)

[**OTOv2: Automatic, Generic, User-Friendly**](https://openreview.net/pdf?id=7ynoX1ojPMt)

*Tianyi Chen, Luming Liang, Tianyu Ding, Ilya Zharkov*

> Microsoft

> ICLR'23

[[**Only Train Once (Project)**](https://github.com/tianyic/only_train_once)] |  | [[Github](https://github.com/tianyic/only_train_once)] |

| [](https://github.com/Adamdad/DeRy)

[**Deep Model Reassembly**](https://arxiv.org/abs/2210.17409)

*Xingyi Yang, Daquan Zhou, Songhua Liu, Jingwen Ye, Xinchao Wang*

LV Lab, NUS

> NeurIPS'22

[[**Deep Model Reassembly (Project)**](https://github.com/Adamdad/DeRy)]

|  | [[Github](https://github.com/Adamdad/DeRy)]

[[Page](https://adamdad.github.io/dery/)] |

## AnyTask

| Title & Authors | Intro | Useful Links |

|:----| :----: | :---:|

| [](https://github.com/microsoft/JARVIS)

[**HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in HuggingFace**](https://arxiv.org/abs/2303.17580)

*Yongliang Shen, Kaitao Song, Xu Tan, Dongsheng Li, Weiming Lu, Yueting Zhuang*

> Zhejiang University, MSRA

Preprint'23

[[**Jarvis (Project)**](https://github.com/microsoft/JARVIS)] |

![]() | [[Github](https://github.com/microsoft/JARVIS)]

| [[Github](https://github.com/microsoft/JARVIS)]

[[Demo](https://huggingface.co/spaces/microsoft/HuggingGPT)] |

| [**TaskMatrix.AI: Completing Tasks by Connecting Foundation Models with Millions of APIs**](https://arxiv.org/abs/2303.16434)

*Yaobo Liang, Chenfei Wu, Ting Song, Wenshan Wu, Yan Xia, Yu Liu, Yang Ou, Shuai Lu, Lei Ji, Shaoguang Mao, Yun Wang, Linjun Shou, Ming Gong, Nan Duan*

> Microsoft

> > Preprint'23 |  | [[Github](https://github.com/microsoft/visual-chatgpt/tree/main/TaskMatrix.AI)] |

| [](https://github.com/microsoft/X-Decoder)

[**Generalized Decoding for Pixel, Image and Language**](https://arxiv.org/abs/2212.11270)

*Xueyan Zou, Zi-Yi Dou, Jianwei Yang, Zhe Gan, Linjie Li, Chunyuan Li, Xiyang Dai, Harkirat Behl, Jianfeng Wang, Lu Yuan, Nanyun Peng, Lijuan Wang, Yong Jae Lee, Jianfeng Gao*

> Microsoft

> CVPR'23

[[**X-Decoder (Project)**](https://github.com/microsoft/X-Decoder/)] |  | [[Github](https://github.com/microsoft/X-Decoder/)]

[[Page](https://x-decoder-vl.github.io)]

[[Demo](https://huggingface.co/spaces/xdecoder/Demo)] |

| [](https://github.com/huawei-noah/Pretrained-IPT)

[**Pre-Trained Image Processing Transformer**]()

*Chen, Hanting and Wang, Yunhe and Guo, Tianyu and Xu, Chang and Deng, Yiping and Liu, Zhenhua and Ma, Siwei and Xu, Chunjing and Xu, Chao and Gao, Wen*

> Huawei-Noah

> CVPR'21

[[**Pretrained-IPT (Project)**](https://github.com/huawei-noah/Pretrained-IPT)] |  | [[Github](https://github.com/huawei-noah/Pretrained-IPT)] |

| [](https://github.com/agiresearch/OpenAGI)

[**OpenAGI: When LLM Meets Domain Experts**](https://arxiv.org/pdf/2304.04370.pdf)

*Yingqiang Ge, Wenyue Hua, Jianchao Ji, Juntao Tan, Shuyuan Xu, Yongfeng Zhang*

> Rutgers University

> Preprint'23

[[**OpenAGI (Project)**](https://github.com/agiresearch/OpenAGI)] |  | [Github](https://github.com/agiresearch/OpenAGI) |

## AnyX

| Title & Authors | Intro | Useful Links |

|:----| :----: | :---:|

| [](https://github.com/ttengwang/Caption-Anything)

[**Caption Anything: Interactive Image Description with Diverse Multimodal Controls**](https://arxiv.org/abs/2305.02677)

*Teng Wang, Jinrui Zhang, Junjie Fei, Hao Zheng, Yunlong Tang, Zhe Li, Mingqi Gao, Shanshan Zhao*

> SUSTech VIP Lab

> Preprint'23

[**Caption Anything (Project)**](https://github.com/ttengwang/Caption-Anything) |  | [[Github](https://github.com/ttengwang/Caption-Anything)]

[[Demo](https://huggingface.co/spaces/TencentARC/Caption-Anything)] |

| [](https://github.com/showlab/Image2Paragraph)

[**Image2Paragraph:Transform Image into Unique Paragraph (Project)**](https://github.com/showlab/Image2Paragraph)

*Jinpeng Wang* |  | [Github](https://github.com/showlab/Image2Paragraph) |

...

# Paper List for Anything AI

A paper list for Anything AI

## AnyObject

| Paper | First Author | Venue | Topic |

| :--- | :---: | :--: | :--: |

| [Segment Anything](https://arxiv.org/abs/2304.02643) | Alexander Kirillov | Preprint'23 | Segmentation |

| [Learning to Segment Every Thing](https://openaccess.thecvf.com/content_cvpr_2018/papers/Hu_Learning_to_Segment_CVPR_2018_paper.pdf) | Ronghang Hu | CVPR'18 |

| [Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499) | Shilong Liu | Preprint'23 | Grounding+Detection |

| [SegGPT: Segmenting Everything In Context](https://arxiv.org/abs/2304.03284) | Xinlong Wang | Preprint'23 | Segmentation |

| [V3Det: Vast Vocabulary Visual Detection Dataset](https://arxiv.org/abs/2304.03752) | Jiaqi Wang | Preprint'23 | Dataset |

| [Pose for Everything: Towards Category-Agnostic Pose Estimation](https://arxiv.org/abs/2207.10387) | Lumin Xu | ECCV'22 Oral | Pose |

| [Type-to-Track: Retrieve Any Object via Prompt-based Tracking](https://arxiv.org/abs/2305.13495) | Pha Nguyen | NeurIPS'23 | Grounding+Tracking |

## AnyGeneration

| Paper | First Author | Venue | Topic |

| :--- | :---: | :--: | :--: |

| [High-Resolution Image Synthesis with Latent Diffusion Models](https://arxiv.org/abs/2112.10752) | Robin Rombach | CVPR'22 | Text-to-Image Generation |

| [Adding Conditional Control to Text-to-Image Diffusion Models](https://arxiv.org/abs/2302.05543) | Lvmin Zhang | Preprint'23 | Controlllable Generation |

| [GigaGAN: Large-scale GAN for Text-to-Image Synthesis](https://arxiv.org/abs/2303.05511) | Minguk Kang | CVPR'23 | Large-scale GAN |

| [Inpaint Anything: Segment Anything Meets Image Inpainting](https://arxiv.org/abs/2304.06790) | Tao Yu | Preprint'23 | Inpainting |

## AnyModel

| Paper | First Author | Venue | Topic |

| :--- | :---: | :--: | :--: |

| [DepGraph: Towards Any Structural Pruning](https://arxiv.org/abs/2301.12900) | Gongfan Fang | CVPR'23 | Network Pruning |

| [MQBench: Towards Reproducible and Deployable Model Quantization Benchmark](https://arxiv.org/abs/2111.03759) | Yuhang Li | NeurIPS'21 | Network Quantization |

| [OTOv2: Automatic, Generic, User-Friendly](https://openreview.net/pdf?id=7ynoX1ojPMt) | Tianyi Chen | ICLR'23 | Network Pruning |

| [Deep Model Reassembly](https://arxiv.org/abs/2210.17409) | Xingyi Yang | NeurIPS'22 | Model Reuse |

## AnyTask

| Paper | First Author | Venue | Topic |

| :--- | :---: | :--: | :--: |

| [HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in HuggingFace](https://arxiv.org/abs/2303.17580) | Yongliang Shen | Preprint'23 | Modelzoo + LLM |

| [TaskMatrix.AI: Completing Tasks by Connecting Foundation Models with Millions of APIs](https://arxiv.org/abs/2303.16434) | Yaobo Liang | Preprint'23 | Modelzoo + LLM |

| [Generalized Decoding for Pixel, Image and Language](https://arxiv.org/abs/2212.11270) | Xueyan Zou | CVPR'23 | Multi Tasking |

| [Pre-Trained Image Processing Transformer](https://arxiv.org/abs/2012.00364) | Chen, Hanting | CVPR'21 | Low-level Vision |