https://github.com/Westlake-AI/openmixup

CAIRI Supervised, Semi- and Self-Supervised Visual Representation Learning Toolbox and Benchmark

https://github.com/Westlake-AI/openmixup

automix awesome-list awesome-mim awesome-mixup benchmark contrastive-learning data-augmentation data-generation deep-learning image-classifcation imagenet machine-learning masked-image-modeling mixup pytorch self-supervised-learning semi-supervised-learning vision-transformer

Last synced: 10 months ago

JSON representation

CAIRI Supervised, Semi- and Self-Supervised Visual Representation Learning Toolbox and Benchmark

- Host: GitHub

- URL: https://github.com/Westlake-AI/openmixup

- Owner: Westlake-AI

- License: apache-2.0

- Created: 2021-12-30T09:42:15.000Z (about 4 years ago)

- Default Branch: main

- Last Pushed: 2023-10-19T22:27:45.000Z (about 2 years ago)

- Last Synced: 2023-10-20T07:13:38.623Z (about 2 years ago)

- Topics: automix, awesome-list, awesome-mim, awesome-mixup, benchmark, contrastive-learning, data-augmentation, data-generation, deep-learning, image-classifcation, imagenet, machine-learning, masked-image-modeling, mixup, pytorch, self-supervised-learning, semi-supervised-learning, vision-transformer

- Language: Python

- Homepage: https://openmixup.readthedocs.io

- Size: 3.28 MB

- Stars: 499

- Watchers: 17

- Forks: 53

- Open Issues: 5

-

Metadata Files:

- Readme: README.md

- Contributing: .github/CONTRIBUTING.md

- License: LICENSE

Awesome Lists containing this project

- ultimate-awesome - openmixup - CAIRI Supervised, Semi- and Self-Supervised Visual Representation Learning Toolbox and Benchmark. (Other Lists / PowerShell Lists)

- Awesome-Mixup - [Code

- Awesome-Mix - OpenMixup

- Awesome-MIM - [Code - AI/A2MIM)] (Fundamental MIM Methods / MIM for Transformers)

README

# OpenMixup

[](https://github.com/Westlake-AI/openmixup/releases)

[](https://pypi.org/project/openmixup)

[](https://arxiv.org/abs/2209.04851)

[](https://openmixup.readthedocs.io/en/latest/)

[](https://github.com/Westlake-AI/openmixup/blob/main/LICENSE)

[](https://github.com/Westlake-AI/openmixup/issues)

[📘Documentation](https://openmixup.readthedocs.io/en/latest/) |

[🛠️Installation](https://openmixup.readthedocs.io/en/latest/install.html) |

[🚀Model Zoo](https://github.com/Westlake-AI/openmixup/tree/main/docs/en/model_zoos) |

[👀Awesome Mixup](https://openmixup.readthedocs.io/en/latest/awesome_mixups/Mixup_SL.html) |

[🔍Awesome MIM](https://openmixup.readthedocs.io/en/latest/awesome_selfsup/MIM.html) |

[🆕News](https://openmixup.readthedocs.io/en/latest/changelog.html)

## Introduction

The main branch works with **PyTorch 1.8** (required by some self-supervised methods) or higher (we recommend **PyTorch 1.12**). You can still use **PyTorch 1.6** for supervised classification methods.

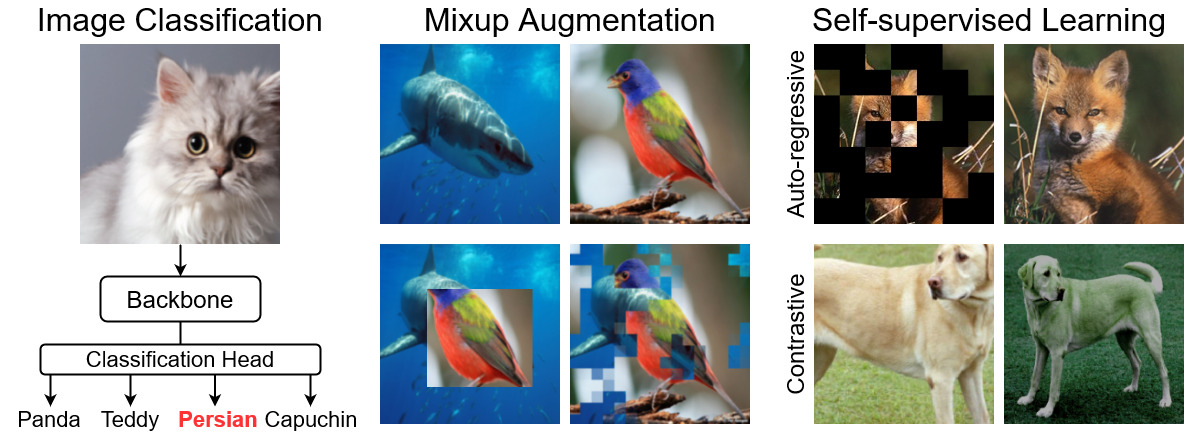

`OpenMixup` is an open-source toolbox for supervised, self-, and semi-supervised visual representation learning with mixup based on PyTorch, especially for mixup-related methods. *Recently, `OpenMixup` is on updating to adopt new features and code structures of OpenMMLab 2.0 ([#42](https://github.com/Westlake-AI/openmixup/issues/42)).*

Major Features

- **Modular Design.**

OpenMixup follows a similar code architecture of OpenMMLab projects, which decompose the framework into various components, and users can easily build a customized model by combining different modules. OpenMixup is also transplantable to OpenMMLab projects (e.g., [MMPreTrain](https://github.com/open-mmlab/mmpretrain)).

- **All in One.**

OpenMixup provides popular backbones, mixup methods, semi-supervised, and self-supervised algorithms. Users can perform image classification (CNN & Transformer) and self-supervised pre-training (contrastive and autoregressive) under the same framework.

- **Standard Benchmarks.**

OpenMixup supports standard benchmarks of image classification, mixup classification, self-supervised evaluation, and provides smooth evaluation on downstream tasks with open-source projects (e.g., object detection and segmentation on [Detectron2](https://github.com/facebookresearch/maskrcnn-benchmark) and [MMSegmentation](https://github.com/open-mmlab/mmsegmentation)).

- **State-of-the-art Methods.**

Openmixup provides awesome lists of popular mixup and self-supervised methods. OpenMixup is updating to support more state-of-the-art image classification and self-supervised methods.

Table of Contents

- Introduction

- News and Updates

- Installation

- Getting Started

- Overview of Model Zoo

- Change Log

- License

- Acknowledgement

- Contributors

- Contributors and Contact

## News and Updates

[2023-12-23] `OpenMixup` v0.2.9 is released, updating more features in mixup augmentations, self-supervised learning, and optimizers.

## Installation

OpenMixup is compatible with **Python 3.6/3.7/3.8/3.9** and **PyTorch >= 1.6**. Here are quick installation steps for development:

```shell

conda create -n openmixup python=3.8 pytorch=1.12 cudatoolkit=11.3 torchvision -c pytorch -y

conda activate openmixup

pip install openmim

mim install mmcv-full

git clone https://github.com/Westlake-AI/openmixup.git

cd openmixup

python setup.py develop

```

Please refer to [install.md](docs/en/install.md) for more detailed installation and dataset preparation.

## Getting Started

OpenMixup supports Linux and macOS. It enables easy implementation and extensions of mixup data augmentation methods in existing supervised, self-, and semi-supervised visual recognition models. Please see [get_started.md](docs/en/get_started.md) for the basic usage of OpenMixup.

### Training and Evaluation Scripts

Here, we provide scripts for starting a quick end-to-end training with multiple `GPUs` and the specified `CONFIG_FILE`.

```shell

bash tools/dist_train.sh ${CONFIG_FILE} ${GPUS} [optional arguments]

```

For example, you can run the script below to train a ResNet-50 classifier on ImageNet with 4 GPUs:

```shell

CUDA_VISIBLE_DEVICES=0,1,2,3 PORT=29500 bash tools/dist_train.sh configs/classification/imagenet/resnet/resnet50_4xb64_cos_ep100.py 4

```

After training, you can test the trained models with the corresponding evaluation script:

```shell

bash tools/dist_test.sh ${CONFIG_FILE} ${GPUS} ${PATH_TO_MODEL} [optional arguments]

```

### Development

Please see [Tutorials](docs/en/tutorials) for more developing examples and tech details:

- [config files](docs/en/tutorials/0_config.md)

- [add new dataset](docs/en/tutorials/1_new_dataset.md)

- [data pipeline](docs/en/tutorials/2_data_pipeline.md)

- [add new modules](docs/en/tutorials/3_new_module.md)

- [customize schedules](docs/en/tutorials/4_schedule.md)

- [customize runtime](docs/en/tutorials/5_runtime.md)

Downetream Tasks for Self-supervised Learning

- [Classification](docs/en/tutorials/ssl_classification.md)

- [Detection](docs/en/tutorials/ssl_detection.md)

- [Segmentation](docs/en/tutorials/ssl_segmentation.md)

Useful Tools

- [Analysis](docs/en/tutorials/analysis.md)

- [Visualization](docs/en/tutorials/visualization.md)

- [pytorch2onnx](docs/en/tutorials/pytorch2onnx.md)

- [pytorch2torchscript](docs/en/tutorials/pytorch2torchscript.md)

## Overview of Model Zoo

Please run experiments or find results on each config page. Refer to [Mixup Benchmarks](docs/en/mixup_benchmarks) for benchmarking results of mixup methods. View [Model Zoos Sup](docs/en/model_zoos/Model_Zoo_sup.md) and [Model Zoos SSL](docs/en/model_zoos/Model_Zoo_selfsup.md) for a comprehensive collection of mainstream backbones and self-supervised algorithms. We also provide the paper lists of [Awesome Mixups](docs/en/awesome_mixups) and [Awesome MIM](docs/en/awesome_selfsup/MIM.md) for your reference. Please view config files and links to models at the following config pages. Checkpoints and training logs are on updating!

Supported Backbone Architectures

Mixup Data Augmentations

-

AlexNet (NeurIPS'2012) config -

VGG (ICLR'2015) config -

InceptionV3 (CVPR'2016) config -

ResNet (CVPR'2016) config -

ResNeXt (CVPR'2017) config -

SE-ResNet (CVPR'2018) config -

SE-ResNeXt (CVPR'2018) config -

ShuffleNetV1 (CVPR'2018) config -

ShuffleNetV2 (ECCV'2018) config -

MobileNetV2 (CVPR'2018) config -

MobileNetV3 (ICCV'2019) config -

EfficientNet (ICML'2019) config -

EfficientNetV2 (ICML'2021) config -

HRNet (TPAMI'2019) config -

Res2Net (ArXiv'2019) config -

CSPNet (CVPRW'2020) config -

RegNet (CVPR'2020) config -

Vision-Transformer (ICLR'2021) config -

Swin-Transformer (ICCV'2021) config -

PVT (ICCV'2021) config -

T2T-ViT (ICCV'2021) config -

LeViT (ICCV'2021) config -

RepVGG (CVPR'2021) config -

DeiT (ICML'2021) config -

MLP-Mixer (NeurIPS'2021) config -

Twins (NeurIPS'2021) config -

ConvMixer (TMLR'2023) config -

BEiT (ICLR'2022) config -

UniFormer (ICLR'2022) config -

MobileViT (ICLR'2022) config -

PoolFormer (CVPR'2022) config -

ConvNeXt (CVPR'2022) config -

MViTV2 (CVPR'2022) config -

RepMLP (CVPR'2022) config -

VAN (CVMJ'2023) config -

DeiT-3 (ECCV'2022) config -

LITv2 (NeurIPS'2022) config -

HorNet (NeurIPS'2022) config -

DaViT (ECCV'2022) config -

EdgeNeXt (ECCVW'2022) config -

EfficientFormer (NeurIPS'2022) config -

MogaNet (ICLR'2024) config -

MetaFormer (TPAMI'2024) config -

ConvNeXtV2 (CVPR'2023) config -

CoC (ICLR'2023) config -

MobileOne (CVPR'2023) config -

VanillaNet (NeurIPS'2023) config -

RWKV (ArXiv'2023) config -

UniRepLKNet (CVPR'2024) config -

TransNeXt (CVPR'2024) config -

StarNet (CVPR'2024) config

-

Mixup (ICLR'2018) config -

CutMix (ICCV'2019) config -

ManifoldMix (ICML'2019) config -

FMix (ArXiv'2020) config -

AttentiveMix (ICASSP'2020) config -

SmoothMix (CVPRW'2020) config -

SaliencyMix (ICLR'2021) config -

PuzzleMix (ICML'2020) config -

SnapMix (AAAI'2021) config -

GridMix (Pattern Recognition'2021) config -

ResizeMix (CVMJ'2023) config -

AlignMix (CVPR'2022) config -

TransMix (CVPR'2022) config -

AutoMix (ECCV'2022) config -

SAMix (ArXiv'2021) config -

DecoupleMix (NeurIPS'2023) config -

SMMix (ICCV'2023) config -

AdAutoMix (ICLR'2024) config -

SUMix (ECCV'2024)

Self-supervised Learning Algorithms

Supported Datasets

-

Relative Location (ICCV'2015) config -

Rotation Prediction (ICLR'2018) config -

DeepCluster (ECCV'2018) config -

NPID (CVPR'2018) config -

ODC (CVPR'2020) config -

MoCov1 (CVPR'2020) config -

SimCLR (ICML'2020) config -

MoCoV2 (ArXiv'2020) config -

BYOL (NeurIPS'2020) config -

SwAV (NeurIPS'2020) config -

DenseCL (CVPR'2021) config -

SimSiam (CVPR'2021) config -

Barlow Twins (ICML'2021) config -

MoCoV3 (ICCV'2021) config -

DINO (ICCV'2021) config -

BEiT (ICLR'2022) config -

MAE (CVPR'2022) config -

SimMIM (CVPR'2022) config -

MaskFeat (CVPR'2022) config -

CAE (IJCV'2024) config -

A2MIM (ICML'2023) config

-

ImageNet [download (1K)] [download (21K)] config -

CIFAR-10 [download] config -

CIFAR-100 [download] config -

Tiny-ImageNet [download] config -

FashionMNIST [download] -

STL-10 [download] config -

CUB-200-2011 [download] config -

FGVC-Aircraft [download] config -

Stanford-Cars [download] config -

Places205 [download] config -

iNaturalist-2017 [download] config -

iNaturalist-2018 [download] config -

AgeDB [download] [download (baidu)] config -

IMDB-WIKI [download (imdb)] [download (wiki)] config -

RCFMNIST [download] config

## Change Log

Please refer to [changelog.md](docs/en/changelog.md) for more details and release history.

## License

This project is released under the [Apache 2.0 license](LICENSE). See `LICENSE` for more information.

## Acknowledgement

- OpenMixup is an open-source project for mixup methods and visual representation learning created by researchers in **CAIRI AI Lab**. We encourage researchers interested in backbone architectures, mixup augmentations, and self-supervised learning methods to contribute to OpenMixup!

- This project borrows the architecture design and part of the code from [MMPreTrain](https://github.com/open-mmlab/mmpretrain) and the official implementations of supported algorisms.

## Citation

If you find this project useful in your research, please consider star `OpenMixup` or cite our [tech report](https://arxiv.org/abs/2209.04851):

```BibTeX

@article{li2022openmixup,

title = {OpenMixup: A Comprehensive Mixup Benchmark for Visual Classification},

author = {Siyuan Li and Zedong Wang and Zicheng Liu and Di Wu and Cheng Tan and Stan Z. Li},

journal = {ArXiv},

year = {2022},

volume = {abs/2209.04851}

}

```

## Contributors and Contact

For help, new features, or reporting bugs associated with OpenMixup, please open a [GitHub issue](https://github.com/Westlake-AI/openmixup/issues) and [pull request](https://github.com/Westlake-AI/openmixup/pulls) with the tag "help wanted" or "enhancement". For now, the direct contributors include: Siyuan Li ([@Lupin1998](https://github.com/Lupin1998)), Zedong Wang ([@Jacky1128](https://github.com/Jacky1128)), and Zicheng Liu ([@pone7](https://github.com/pone7)). We thank all public contributors and contributors from MMPreTrain (MMSelfSup and MMClassification)!

This repo is currently maintained by:

- Siyuan Li (lisiyuan@westlake.edu.cn), Westlake University

- Zedong Wang (wangzedong@westlake.edu.cn), Westlake University

- Zicheng Liu (liuzicheng@westlake.edu.cn), Westlake University