https://github.com/fscdc/Awesome-Efficient-Reasoning-Models

[Arxiv 2025] Efficient Reasoning Models: A Survey

https://github.com/fscdc/Awesome-Efficient-Reasoning-Models

List: Awesome-Efficient-Reasoning-Models

chain-of-thought compression efficient-reasoning

Last synced: 7 months ago

JSON representation

[Arxiv 2025] Efficient Reasoning Models: A Survey

- Host: GitHub

- URL: https://github.com/fscdc/Awesome-Efficient-Reasoning-Models

- Owner: fscdc

- License: apache-2.0

- Created: 2025-04-11T15:04:28.000Z (7 months ago)

- Default Branch: master

- Last Pushed: 2025-04-22T06:20:23.000Z (7 months ago)

- Last Synced: 2025-04-22T07:43:00.634Z (7 months ago)

- Topics: chain-of-thought, compression, efficient-reasoning

- Language: Python

- Homepage: https://arxiv.org/abs/2504.10903

- Size: 24 MB

- Stars: 109

- Watchers: 3

- Forks: 6

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

- awesome-multimodal-token-compression - Awesome-Efficient-Reasoning-Models - Efficient-LLM](https://github.com/horseee/Awesome-Efficient-LLM/), [Awesome-Context-Engineering](https://github.com/Meirtz/Awesome-Context-Engineering) (🙏 Acknowledgments / Published in Recent Conference/Journal)

- Awesome-Efficient-LLM - curated list

- ultimate-awesome - Awesome-Efficient-Reasoning-Models - [TMLR 2025] Efficient Reasoning Models: A Survey. (Other Lists / TeX Lists)

- Awesome-Efficient-Reasoning - fscdc/Awesome-Efficient-Reasoning-Models

README

Efficient Reasoning Models: A Survey

An overview of research in efficient reasoning models

**[arXiv]** **[HuggingFace]**

This repository is for our paper:

> **[Efficient Reasoning Models: A Survey](https://arxiv.org/abs/2504.10903)** \

> [Sicheng Feng](https://fscdc.github.io/)1,2, [Gongfan Fang](https://fangggf.github.io/)1, [Xinyin Ma](https://horseee.github.io/)1, [Xinchao Wang](https://sites.google.com/site/sitexinchaowang/)1,* \

> 1National University of Singapore, Singapore \

> 2Nankai University, Tianjin, China \

> ∗Corresponding author: xinchao@nus.edu.sg

---

>

> 🙋 Please let us know if you find out a mistake or have any suggestions!

>

> 🌟 If you find this resource helpful, please consider to star this repository and cite our [research](#citation)!

## Updates

- 2025-04-16: 📝 The survey is now available on [arXiv](https://arxiv.org/abs/2504.10903)!

- 2025-04-11: 📚 The full paper list is now available and our survey is coming soon!

- 2025-03-16: 🚀 Efficient Reasoning Repo launched!

## Full list

> **Contributions**

>

> If you want to add your paper or update details like conference info or code URLs, please submit a pull request. You can generate the necessary markdown for each paper by filling out `generate_item.py` and running `python generate_item.py`. We greatly appreciate your contributions. Alternatively, you can email me ([Gmail](fscnkucs@gmail.com)) the links to your paper and code, and I will add your paper to the list as soon as possible.

---

### Quick Links

- [Make Long CoT Short](#Make-Long-CoT-Short)

- [SFT-based Methods](#SFT-based-Methods)

- [RL-based Methods](#RL-based-Methods)

- [Prompt-driven Methods](#Prompt-driven-Methods)

- [Latent Reasoning](#Latent-Reasoning)

- [Build SLM with Strong Reasoning Ability](#Build-SLM-with-Strong-Reasoning-Ability)

- [Distillation](#Distillation)

- [Quantization and Pruning](#Quantization-and-Pruning)

- [RL-based Methods](#RL-based-Methods)

- [Let Decoding More Efficient](#Let-Decoding-More-Efficient)

- [Efficient TTS](#Efficient-TTS)

- [Other Optimal Methods](#Other-Optimal-Methods)

- [Evaluation and Benchmarks](#Evaluation-and-Benchmarks)

- [Background Papers](#Background-Papers)

### Make Long CoT Short

#### SFT-based Methods

| Title & Authors | Introduction | Links |

|:--| :----: | :---:|

|[](https://github.com/horseee/CoT-Valve)

[CoT-Valve: Length-Compressible Chain-of-Thought Tuning](https://arxiv.org/abs/2502.09601)

Xinyin Ma, Guangnian Wan, Runpeng Yu, Gongfan Fang, Xinchao Wang | |[Github](https://github.com/horseee/CoT-Valve)

|[Github](https://github.com/horseee/CoT-Valve)

[Paper](https://arxiv.org/abs/2502.09601)|[//]: #03/16

|[]()

[C3oT: Generating Shorter Chain-of-Thought without Compromising Effectiveness](https://arxiv.org/abs/2412.11664)

Yu Kang, Xianghui Sun, Liangyu Chen, Wei Zou | |[Paper](https://arxiv.org/abs/2412.11664)|[//]: #03/16

|[Paper](https://arxiv.org/abs/2412.11664)|[//]: #03/16

|[](https://github.com/tengxiaoliu/LM_skip) []()

[Can Language Models Learn to Skip Steps?](https://arxiv.org/abs/2411.01855)

Tengxiao Liu, Qipeng Guo, Xiangkun Hu, Cheng Jiayang, Yue Zhang, Xipeng Qiu, Zheng Zhang | |[Github](https://github.com/tengxiaoliu/LM_skip)

|[Github](https://github.com/tengxiaoliu/LM_skip)

[Paper](https://arxiv.org/abs/2411.01855)|[//]: #03/16

|[Distilling System 2 into System 1](https://arxiv.org/abs/2407.06023)

Ping Yu, Jing Xu, Jason Weston, Ilia Kulikov | |[Paper](https://arxiv.org/abs/2407.06023)|[//]: #03/16

|[Paper](https://arxiv.org/abs/2407.06023)|[//]: #03/16

|[](https://github.com/hemingkx/TokenSkip)

[TokenSkip: Controllable Chain-of-Thought Compression in LLMs](https://arxiv.org/abs/2502.12067)

Heming Xia, Yongqi Li, Chak Tou Leong, Wenjie Wang, Wenjie Li | |[Github](https://github.com/hemingkx/TokenSkip)

|[Github](https://github.com/hemingkx/TokenSkip)

[Paper](https://arxiv.org/abs/2502.12067)|[//]: #03/20

|[Stepwise Perplexity-Guided Refinement for Efficient Chain-of-Thought Reasoning in Large Language Models](https://arxiv.org/abs/2502.13260)

Yingqian Cui, Pengfei He, Jingying Zeng, Hui Liu, Xianfeng Tang, Zhenwei Dai, Yan Han, Chen Luo, Jing Huang, Zhen Li, Suhang Wang, Yue Xing, Jiliang Tang, Qi He | |[Paper](https://arxiv.org/abs/2502.13260)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2502.13260)| [//]: #04/08

|[Towards Thinking-Optimal Scaling of Test-Time Compute for LLM Reasoning](https://arxiv.org/abs/2502.18080)

Wenkai Yang, Shuming Ma, Yankai Lin, Furu Wei | |[Paper](https://arxiv.org/abs/2502.18080)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2502.18080)| [//]: #04/08

|[](https://github.com/TergelMunkhbat/concise-reasoning)

[Self-Training Elicits Concise Reasoning in Large Language Models](https://arxiv.org/abs/2502.20122)

Tergel Munkhbat, Namgyu Ho, Seo Hyun Kim, Yongjin Yang, Yujin Kim, Se-Young Yun | |[Github](https://github.com/TergelMunkhbat/concise-reasoning)

|[Github](https://github.com/TergelMunkhbat/concise-reasoning)

[Paper](https://arxiv.org/abs/2502.20122)| [//]: #04/08

|[](https://github.com/GeniusHTX/TALE)

[Token-Budget-Aware LLM Reasoning](https://arxiv.org/abs/2412.18547)

Tingxu Han, Zhenting Wang, Chunrong Fang, Shiyu Zhao, Shiqing Ma, Zhenyu Chen | |[Github](https://github.com/GeniusHTX/TALE)

|[Github](https://github.com/GeniusHTX/TALE)

[Paper](https://arxiv.org/abs/2412.18547)| [//]: #04/08

#### RL-based Methods

| Title & Authors | Introduction | Links |

|:--| :----: | :---:|

|[](https://github.com/StarDewXXX/O1-Pruner)

[O1-Pruner: Length-Harmonizing Fine-Tuning for O1-Like Reasoning Pruning](https://arxiv.org/abs/2501.12570)

Haotian Luo, Li Shen, Haiying He, Yibo Wang, Shiwei Liu, Wei Li, Naiqiang Tan, Xiaochun Cao, Dacheng Tao | |[Github](https://github.com/StarDewXXX/O1-Pruner)

|[Github](https://github.com/StarDewXXX/O1-Pruner)

[Paper](https://arxiv.org/abs/2501.12570)|[//]: #03/16

|[Kimi k1.5: Scaling Reinforcement Learning with LLMs](https://arxiv.org/abs/2501.12599)

Kimi Team | |[Paper](https://arxiv.org/abs/2501.12599)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2501.12599)| [//]: #04/08

|[](https://github.com/eddycmu/demystify-long-cot)

[Demystifying Long Chain-of-Thought Reasoning in LLMs](https://arxiv.org/abs/2502.03373)

Edward Yeo, Yuxuan Tong, Morry Niu, Graham Neubig, Xiang Yue | |[Github](https://github.com/eddycmu/demystify-long-cot)

|[Github](https://github.com/eddycmu/demystify-long-cot)

[Paper](https://arxiv.org/abs/2502.03373)| [//]: #04/08

|[](https://github.com/Zanette-Labs/efficient-reasoning)

[Training Language Models to Reason Efficiently](https://arxiv.org/abs/2502.04463)

Daman Arora, Andrea Zanette | |[Github](https://github.com/Zanette-Labs/efficient-reasoning)

|[Github](https://github.com/Zanette-Labs/efficient-reasoning)

[Paper](https://arxiv.org/abs/2502.04463)| [//]: #04/08

|[](https://github.com/cmu-l3/l1)

[L1: Controlling How Long A Reasoning Model Thinks With Reinforcement Learning](https://www.arxiv.org/abs/2503.04697)

Pranjal Aggarwal, Sean Welleck | |[Github](https://github.com/cmu-l3/l1)

|[Github](https://github.com/cmu-l3/l1)

[Paper](https://www.arxiv.org/abs/2503.04697)| [//]: #04/08

|[DAST: Difficulty-Adaptive Slow-Thinking for Large Reasoning Models](https://arxiv.org/abs/2503.04472)

Yi Shen, Jian Zhang, Jieyun Huang, Shuming Shi, Wenjing Zhang, Jiangze Yan, Ning Wang, Kai Wang, Shiguo Lian | |[Paper](https://arxiv.org/abs/2503.04472)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2503.04472)| [//]: #04/08

|[Adaptive Group Policy Optimization: Towards Stable Training and Token-Efficient Reasoning](https://arxiv.org/abs/2503.15952)

Chen Li, Nazhou Liu, Kai Yang | |[Paper](https://arxiv.org/abs/2503.15952)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2503.15952)| [//]: #04/08

|[](https://github.com/UCSB-NLP-Chang/ThinkPrune)

[ThinkPrune: Pruning Long Chain-of-Thought of LLMs via Reinforcement Learning](https://arxiv.org/abs/2504.01296)

Bairu Hou, Yang Zhang, Jiabao Ji, Yujian Liu, Kaizhi Qian, Jacob Andreas, Shiyu Chang | |[Github](https://github.com/UCSB-NLP-Chang/ThinkPrune)

|[Github](https://github.com/UCSB-NLP-Chang/ThinkPrune)

[Paper](https://arxiv.org/abs/2504.01296)| [//]: #04/08

|[Think When You Need: Self-Adaptive Chain-of-Thought Learning](https://arxiv.org/abs/2504.03234)

Junjie Yang, Ke Lin, Xing Yu | |[Paper](https://arxiv.org/abs/2504.03234)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2504.03234)| [//]: #04/08

#### Prompt-driven Methods

##### Prompt-guided Efficint Reasoning

| Title & Authors | Introduction | Links |

|:--| :----: | :---:|

|[Time's Up! An Empirical Study of LLM Reasoning Ability Under Output Length Constraint](https://arxiv.org/abs/2504.14350)

Yi Sun, Han Wang, Jiaqiang Li, Jiacheng Liu, Xiangyu Li, Hao Wen, Huiwen Zheng, Yan Liang, Yuanchun Li, Yunxin Liu | |[Paper](https://arxiv.org/abs/2504.14350)| [//]: #04/23

|[Paper](https://arxiv.org/abs/2504.14350)| [//]: #04/23

|[CoT-RAG: Integrating Chain of Thought and Retrieval-Augmented Generation to Enhance Reasoning in Large Language Models](https://arxiv.org/abs/2504.13534)

Feiyang Li, Peng Fang, Zhan Shi, Arijit Khan, Fang Wang, Dan Feng, Weihao Wang, Xin Zhang, Yongjian Cui | |[Paper](https://arxiv.org/abs/2504.13534)| [//]: #04/21

|[Paper](https://arxiv.org/abs/2504.13534)| [//]: #04/21

|[Thought Manipulation: External Thought Can Be Efficient for Large Reasoning Models](https://arxiv.org/abs/2504.13626)

Yule Liu, Jingyi Zheng, Zhen Sun, Zifan Peng, Wenhan Dong, Zeyang Sha, Shiwen Cui, Weiqiang Wang, Xinlei He | |[Paper](https://arxiv.org/abs/2504.13626)| [//]: #04/21

|[Paper](https://arxiv.org/abs/2504.13626)| [//]: #04/21

|[](https://github.com/GeniusHTX/TALE)

[Token-Budget-Aware LLM Reasoning](https://arxiv.org/abs/2412.18547)

Tingxu Han, Zhenting Wang, Chunrong Fang, Shiyu Zhao, Shiqing Ma, Zhenyu Chen | |[Github](https://github.com/GeniusHTX/TALE)

|[Github](https://github.com/GeniusHTX/TALE)

[Paper](https://arxiv.org/abs/2412.18547)| [//]: #04/08

|[](https://github.com/matthewrenze/jhu-concise-cot) []()

[The Benefits of a Concise Chain of Thought on Problem-Solving in Large Language Models](https://arxiv.org/abs/2401.05618)

Matthew Renze, Erhan Guven | |[Github](https://github.com/matthewrenze/jhu-concise-cot)

|[Github](https://github.com/matthewrenze/jhu-concise-cot)

[Paper](https://arxiv.org/abs/2401.05618)| [//]: #04/08

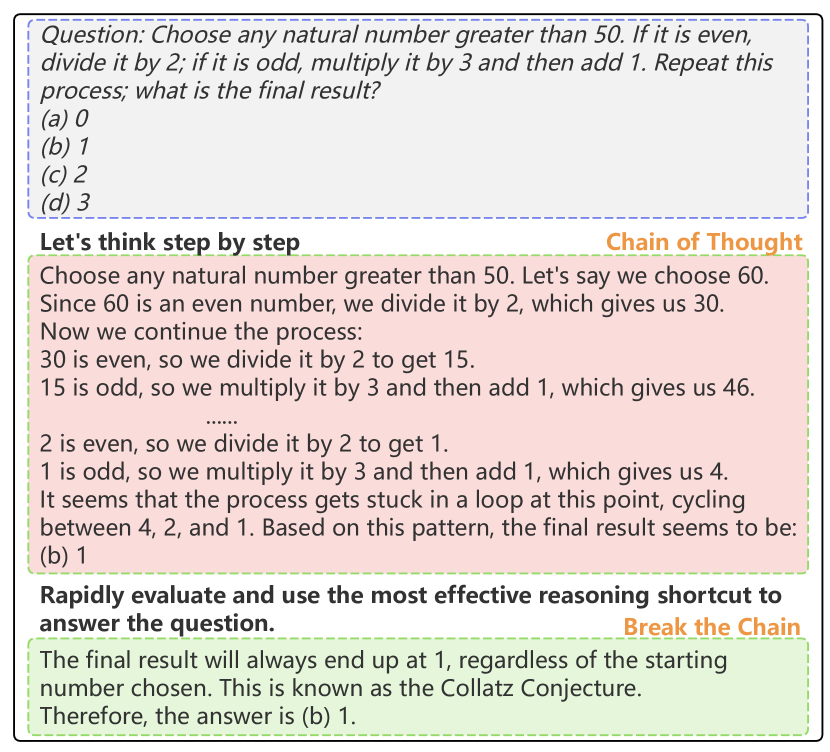

|[Break the Chain: Large Language Models Can be Shortcut Reasoners](https://arxiv.org/abs/2406.06580)

Mengru Ding, Hanmeng Liu, Zhizhang Fu, Jian Song, Wenbo Xie, Yue Zhang | |[Paper](https://arxiv.org/abs/2406.06580)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2406.06580)| [//]: #04/08

|[](https://github.com/sileix/chain-of-draft)

[Chain of Draft: Thinking Faster by Writing Less](https://arxiv.org/abs/2502.18600)

Silei Xu, Wenhao Xie, Lingxiao Zhao, Pengcheng He | |[Github](https://github.com/sileix/chain-of-draft)

|[Github](https://github.com/sileix/chain-of-draft)

[Paper](https://arxiv.org/abs/2502.18600)| [//]: #04/08

|[](https://github.com/LightChen233/reasoning-boundary) []()

[Unlocking the Capabilities of Thought: A Reasoning Boundary Framework to Quantify and Optimize Chain-of-Thought](https://arxiv.org/abs/2410.05695)

Qiguang Chen, Libo Qin, Jiaqi Wang, Jinxuan Zhou, Wanxiang Che | |[Github](https://github.com/LightChen233/reasoning-boundary)

|[Github](https://github.com/LightChen233/reasoning-boundary)

[Paper](https://arxiv.org/abs/2410.05695)| [//]: #04/08

|[How Well do LLMs Compress Their Own Chain-of-Thought? A Token Complexity Approach](https://arxiv.org/abs/2503.01141)

Ayeong Lee, Ethan Che, Tianyi Peng |

|[Paper](https://arxiv.org/abs/2503.01141)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2503.01141)| [//]: #04/08

##### Prompt Attribute-Aware Reasoning Routing

| Title & Authors | Introduction | Links |

|:--| :----: | :---:|

|[How Well do LLMs Compress Their Own Chain-of-Thought? A Token Complexity Approach](https://arxiv.org/abs/2503.01141)

Ayeong Lee, Ethan Che, Tianyi Peng |

|[Paper](https://arxiv.org/abs/2503.01141)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2503.01141)| [//]: #04/08

| []()

[RouteLLM: Learning to Route LLMs with Preference Data](https://arxiv.org/abs/2406.18665)

Isaac Ong, Amjad Almahairi, Vincent Wu, Wei-Lin Chiang, Tianhao Wu, Joseph E. Gonzalez, M Waleed Kadous, Ion Stoica | |[Paper](https://arxiv.org/abs/2406.18665)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2406.18665)| [//]: #04/08

|[](https://github.com/SimonAytes/SoT)

[Sketch-of-Thought: Efficient LLM Reasoning with Adaptive Cognitive-Inspired Sketching](https://arxiv.org/abs/2503.05179)

Simon A. Aytes, Jinheon Baek, Sung Ju Hwang | |[Github](https://github.com/SimonAytes/SoT)

|[Github](https://github.com/SimonAytes/SoT)

[Paper](https://arxiv.org/abs/2503.05179)| [//]: #04/08

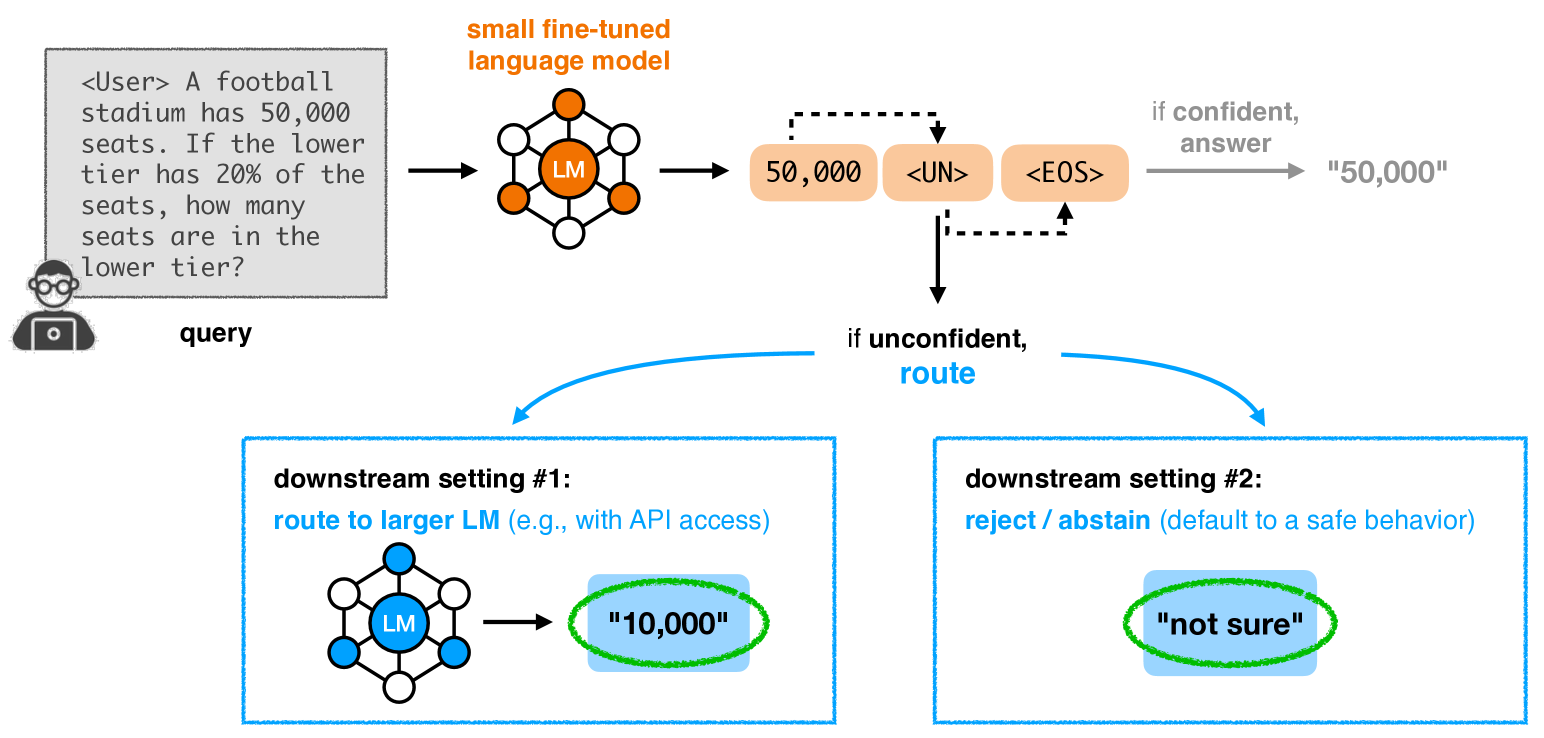

|[Learning to Route LLMs with Confidence Tokens](https://arxiv.org/abs/2410.13284)

Yu-Neng Chuang, Helen Zhou, Prathusha Kameswara Sarma, Parikshit Gopalan, John Boccio, Sara Bolouki, Xia Hu | |[Paper](https://arxiv.org/abs/2410.13284)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2410.13284)| [//]: #04/08

|[Confident or Seek Stronger: Exploring Uncertainty-Based On-device LLM Routing From Benchmarking to Generalization](https://arxiv.org/abs/2502.04428)

Yu-Neng Chuang, Leisheng Yu, Guanchu Wang, Lizhe Zhang, Zirui Liu, Xuanting Cai, Yang Sui, Vladimir Braverman, Xia Hu | |[Paper](https://arxiv.org/abs/2502.04428)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2502.04428)| [//]: #04/08

###### Blog

* [Claude 3.7 Sonnet](https://www.anthropic.com/news/claude-3-7-sonnet). Claude team. [[Paper]](https://www.anthropic.com/news/claude-3-7-sonnet)

#### Latent Reasoning

| Title & Authors | Introduction | Links |

|:--| :----: | :---:|

|[Beyond Chains of Thought: Benchmarking Latent-Space Reasoning Abilities in Large Language Models](https://arxiv.org/abs/2504.10615)

Thilo Hagendorff, Sarah Fabi | |[Paper](https://arxiv.org/abs/2504.10615)|[//]: #04/17

|[Paper](https://arxiv.org/abs/2504.10615)|[//]: #04/17

|[Distilling System 2 into System 1](https://arxiv.org/abs/2407.06023)

Ping Yu, Jing Xu, Jason Weston, Ilia Kulikov | |[Paper](https://arxiv.org/abs/2407.06023)|[//]: #03/16

|[Paper](https://arxiv.org/abs/2407.06023)|[//]: #03/16

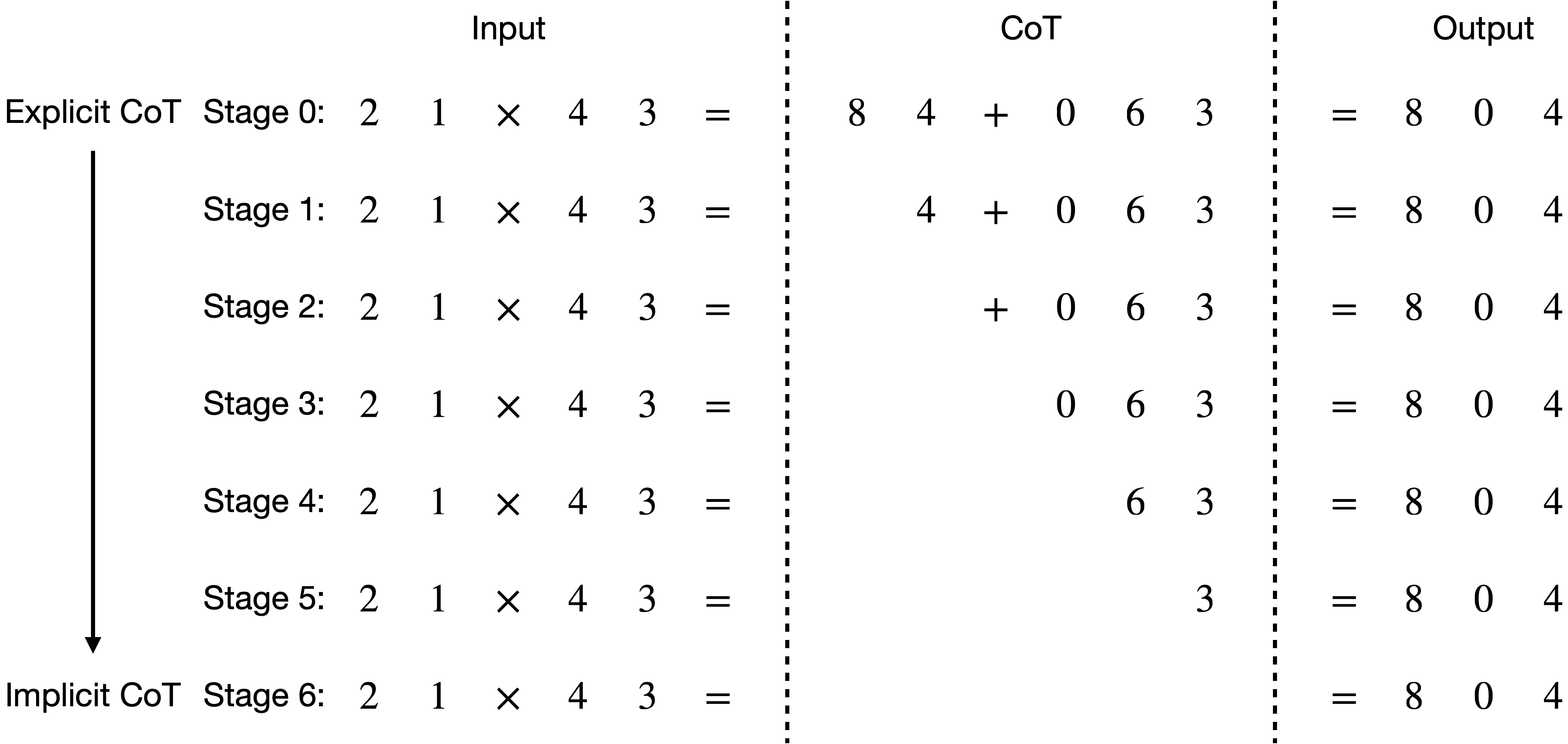

|[](https://github.com/da03/implicit_chain_of_thought/)

[Implicit Chain of Thought Reasoning via Knowledge Distillation](https://arxiv.org/abs/2311.01460)

Yuntian Deng, Kiran Prasad, Roland Fernandez, Paul Smolensky, Vishrav Chaudhary, Stuart Shieber | |[Github](https://github.com/da03/implicit_chain_of_thought/)

|[Github](https://github.com/da03/implicit_chain_of_thought/)

[Paper](https://arxiv.org/abs/2311.01460)| [//]: #04/08

|[](https://github.com/HKUNLP/diffusion-of-thoughts) []()

[Diffusion of Thoughts: Chain-of-Thought Reasoning in Diffusion Language Models](https://arxiv.org/abs/2402.07754)

Jiacheng Ye, Shansan Gong, Liheng Chen, Lin Zheng, Jiahui Gao, Han Shi, Chuan Wu, Xin Jiang, Zhenguo Li, Wei Bi, Lingpeng Kong | |[Github](https://github.com/HKUNLP/diffusion-of-thoughts)

|[Github](https://github.com/HKUNLP/diffusion-of-thoughts)

[Paper](https://arxiv.org/abs/2402.07754)| [//]: #04/08

|[](https://github.com/da03/Internalize_CoT_Step_by_Step)

[From Explicit CoT to Implicit CoT: Learning to Internalize CoT Step by Step](https://arxiv.org/abs/2405.14838)

Yuntian Deng, Yejin Choi, Stuart Shieber | |[Github](https://github.com/da03/Internalize_CoT_Step_by_Step)

|[Github](https://github.com/da03/Internalize_CoT_Step_by_Step)

[Paper](https://arxiv.org/abs/2405.14838)| [//]: #04/08

|[Compressed Chain of Thought: Efficient Reasoning Through Dense Representations](https://arxiv.org/abs/2412.13171)

Jeffrey Cheng, Benjamin Van Durme | |[Paper](https://arxiv.org/abs/2412.13171)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2412.13171)| [//]: #04/08

|[SoftCoT: Soft Chain-of-Thought for Efficient Reasoning with LLMs](https://arxiv.org/abs/2502.12134)

Yige Xu, Xu Guo, Zhiwei Zeng, Chunyan Miao | |[Paper](https://arxiv.org/abs/2502.12134)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2502.12134)| [//]: #04/08

| []()

[Reasoning with Latent Thoughts: On the Power of Looped Transformers](https://arxiv.org/abs/2502.17416)

Nikunj Saunshi, Nishanth Dikkala, Zhiyuan Li, Sanjiv Kumar, Sashank J. Reddi | |[Paper](https://arxiv.org/abs/2502.17416)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2502.17416)| [//]: #04/08

|[](https://github.com/qifanyu/RELAY)

[Enhancing Auto-regressive Chain-of-Thought through Loop-Aligned Reasoning](https://arxiv.org/abs/2502.08482)

Qifan Yu, Zhenyu He, Sijie Li, Xun Zhou, Jun Zhang, Jingjing Xu, Di He | |[Github](https://github.com/qifanyu/RELAY)

|[Github](https://github.com/qifanyu/RELAY)

[Paper](https://arxiv.org/abs/2502.08482)| [//]: #04/08

|[CODI: Compressing Chain-of-Thought into Continuous Space via Self-Distillation](https://arxiv.org/abs/2502.21074)

Zhenyi Shen, Hanqi Yan, Linhai Zhang, Zhanghao Hu, Yali Du, Yulan He | |[Paper](https://arxiv.org/abs/2502.21074)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2502.21074)| [//]: #04/08

|[](https://github.com/zjunlp/LightThinker)

[LightThinker: Thinking Step-by-Step Compression](https://arxiv.org/abs/2502.15589)

Jintian Zhang, Yuqi Zhu, Mengshu Sun, Yujie Luo, Shuofei Qiao, Lun Du, Da Zheng, Huajun Chen, Ningyu Zhang | |[Github](https://github.com/zjunlp/LightThinker)

|[Github](https://github.com/zjunlp/LightThinker)

[Paper](https://arxiv.org/abs/2502.15589)| [//]: #04/08

|[](https://github.com/WANGXinyiLinda/planning_tokens) []()

[Guiding Language Model Reasoning with Planning Tokens](https://arxiv.org/abs/2310.05707)

Xinyi Wang, Lucas Caccia, Oleksiy Ostapenko, Xingdi Yuan, William Yang Wang, Alessandro Sordoni | |[Github](https://github.com/WANGXinyiLinda/planning_tokens)

|[Github](https://github.com/WANGXinyiLinda/planning_tokens)

[Paper](https://arxiv.org/abs/2310.05707)| [//]: #04/08

|[](https://github.com/JacobPfau/fillerTokens) []()

[Let's Think Dot by Dot: Hidden Computation in Transformer Language Models](https://arxiv.org/abs/2404.15758)

Jacob Pfau, William Merrill, Samuel R. Bowman | |[Github](https://github.com/JacobPfau/fillerTokens)

|[Github](https://github.com/JacobPfau/fillerTokens)

[Paper](https://arxiv.org/abs/2404.15758)| [//]: #04/08

|[](https://github.com/MingyuJ666/Disentangling-Memory-and-Reasoning)

[Disentangling Memory and Reasoning Ability in Large Language Models](https://arxiv.org/abs/2411.13504)

Mingyu Jin, Weidi Luo, Sitao Cheng, Xinyi Wang, Wenyue Hua, Ruixiang Tang, William Yang Wang, Yongfeng Zhang | |[Github](https://github.com/MingyuJ666/Disentangling-Memory-and-Reasoning)

|[Github](https://github.com/MingyuJ666/Disentangling-Memory-and-Reasoning)

[Paper](https://arxiv.org/abs/2411.13504)| [//]: #04/08

|[Token Assorted: Mixing Latent and Text Tokens for Improved Language Model Reasoning](https://arxiv.org/abs/2502.03275)

DiJia Su, Hanlin Zhu, Yingchen Xu, Jiantao Jiao, Yuandong Tian, Qinqing Zheng | |[Paper](https://arxiv.org/abs/2502.03275)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2502.03275)| [//]: #04/08

|[Training Large Language Models to Reason in a Continuous Latent Space](https://arxiv.org/abs/2412.06769)

Shibo Hao, Sainbayar Sukhbaatar, DiJia Su, Xian Li, Zhiting Hu, Jason Weston, Yuandong Tian | |[Paper](https://arxiv.org/abs/2412.06769)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2412.06769)| [//]: #04/08

|[](https://github.com/shawnricecake/Heima)

[Efficient Reasoning with Hidden Thinking](https://arxiv.org/abs/2501.19201)

Xuan Shen, Yizhou Wang, Xiangxi Shi, Yanzhi Wang, Pu Zhao, Jiuxiang Gu | |[Github](https://github.com/shawnricecake/Heima)

|[Github](https://github.com/shawnricecake/Heima)

[Paper](https://arxiv.org/abs/2501.19201)| [//]: #04/08

| []()

[Think before you speak: Training Language Models With Pause Tokens](https://arxiv.org/abs/2310.02226)

Sachin Goyal, Ziwei Ji, Ankit Singh Rawat, Aditya Krishna Menon, Sanjiv Kumar, Vaishnavh Nagarajan | |[Paper](https://arxiv.org/abs/2310.02226)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2310.02226)| [//]: #04/08

|[](https://github.com/seal-rg/recurrent-pretraining)

[Scaling up Test-Time Compute with Latent Reasoning: A Recurrent Depth Approach](https://arxiv.org/abs/2502.05171)

Jonas Geiping, Sean McLeish, Neel Jain, John Kirchenbauer, Siddharth Singh, Brian R. Bartoldson, Bhavya Kailkhura, Abhinav Bhatele, Tom Goldstein | |[Github](https://github.com/seal-rg/recurrent-pretraining)

|[Github](https://github.com/seal-rg/recurrent-pretraining)

[Paper](https://arxiv.org/abs/2502.05171)| [//]: #04/08

|[Weight-of-Thought Reasoning: Exploring Neural Network Weights for Enhanced LLM Reasoning](https://arxiv.org/abs/2504.10646)

Saif Punjwani, Larry Heck | |[Paper](https://arxiv.org/abs/2504.10646)|[//]: #04/16

|[Paper](https://arxiv.org/abs/2504.10646)|[//]: #04/16

### Build SLM with Strong Reasoning Ability

#### Distillation

| Title & Authors | Introduction | Links |

|:--| :----: | :---:|

| []()

[Teaching Small Language Models to Reason](https://arxiv.org/abs/2212.08410)

Lucie Charlotte Magister, Jonathan Mallinson, Jakub Adamek, Eric Malmi, Aliaksei Severyn | |[Paper](https://arxiv.org/abs/2212.08410)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2212.08410)| [//]: #04/08

| []()

[Mixed Distillation Helps Smaller Language Model Better Reasoning](https://arxiv.org/abs/2312.10730)

Chenglin Li, Qianglong Chen, Liangyue Li, Caiyu Wang, Yicheng Li, Zulong Chen, Yin Zhang | |[Paper](https://arxiv.org/abs/2312.10730)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2312.10730)| [//]: #04/08

|[](https://github.com/Small-Model-Gap/Small-Model-Learnability-Gap)

[Small Models Struggle to Learn from Strong Reasoners](https://arxiv.org/abs/2502.12143)

Yuetai Li, Xiang Yue, Zhangchen Xu, Fengqing Jiang, Luyao Niu, Bill Yuchen Lin, Bhaskar Ramasubramanian, Radha Poovendran | |[Github](https://github.com/Small-Model-Gap/Small-Model-Learnability-Gap)

|[Github](https://github.com/Small-Model-Gap/Small-Model-Learnability-Gap)

[Paper](https://arxiv.org/abs/2502.12143)| [//]: #04/08

|[](https://github.com/Yiwei98/TDG) []()

[Turning Dust into Gold: Distilling Complex Reasoning Capabilities from LLMs by Leveraging Negative Data](https://arxiv.org/abs/2312.12832)

Yiwei Li, Peiwen Yuan, Shaoxiong Feng, Boyuan Pan, Bin Sun, Xinglin Wang, Heda Wang, Kan Li | |[Github](https://github.com/Yiwei98/TDG)

|[Github](https://github.com/Yiwei98/TDG)

[Paper](https://arxiv.org/abs/2312.12832)| [//]: #04/08

| []()

[Teaching Small Language Models Reasoning through Counterfactual Distillation](https://aclanthology.org/2024.emnlp-main.333/)

Tao Feng, Yicheng Li, Li Chenglin, Hao Chen, Fei Yu, Yin Zhang | |[Paper](https://aclanthology.org/2024.emnlp-main.333/)| [//]: #04/08

|[Paper](https://aclanthology.org/2024.emnlp-main.333/)| [//]: #04/08

|[Deconstructing Long Chain-of-Thought: A Structured Reasoning Optimization Framework for Long CoT Distillation](https://arxiv.org/abs/2503.16385)

Yijia Luo, Yulin Song, Xingyao Zhang, Jiaheng Liu, Weixun Wang, GengRu Chen, Wenbo Su, Bo Zheng | |[Paper](https://arxiv.org/abs/2503.16385)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2503.16385)| [//]: #04/08

|[](https://github.com/yunx-z/SCORE) []()

[Small Language Models Need Strong Verifiers to Self-Correct Reasoning](https://arxiv.org/abs/2404.17140)

Yunxiang Zhang, Muhammad Khalifa, Lajanugen Logeswaran, Jaekyeom Kim, Moontae Lee, Honglak Lee, Lu Wang | |[Github](https://github.com/yunx-z/SCORE)

|[Github](https://github.com/yunx-z/SCORE)

[Paper](https://arxiv.org/abs/2404.17140)| [//]: #04/08

|[Improving Mathematical Reasoning Capabilities of Small Language Models via Feedback-Driven Distillation](https://arxiv.org/abs/2411.14698)

Xunyu Zhu, Jian Li, Can Ma, Weiping Wang | |[Paper](https://arxiv.org/abs/2411.14698)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2411.14698)| [//]: #04/08

|[](https://github.com/Xnhyacinth/SKIntern) []()

[SKIntern : Internalizing Symbolic Knowledge for Distilling Better CoT Capabilities into Small Language Models](https://arxiv.org/abs/2409.13183)

Huanxuan Liao, Shizhu He, Yupu Hao, Xiang Li, Yuanzhe Zhang, Jun Zhao, Kang Liu | |[Github](https://github.com/Xnhyacinth/SKIntern)

|[Github](https://github.com/Xnhyacinth/SKIntern)

[Paper](https://arxiv.org/abs/2409.13183)| [//]: #04/08

| []()

[Probe then Retrieve and Reason: Distilling Probing and Reasoning Capabilities into Smaller Language Models](https://aclanthology.org/2024.lrec-main.1140.pdf)

Yichun Zhao, Shuheng Zhou, Huijia Zhu | |[Paper](https://aclanthology.org/2024.lrec-main.1140.pdf)| [//]: #04/08

|[Paper](https://aclanthology.org/2024.lrec-main.1140.pdf)| [//]: #04/08

|[Thinking Slow, Fast: Scaling Inference Compute with Distilled Reasoners](https://arxiv.org/abs/2502.20339)

Daniele Paliotta, Junxiong Wang, Matteo Pagliardini, Kevin Y. Li, Aviv Bick, J. Zico Kolter, Albert Gu, François Fleuret, Tri Dao | |[Paper](https://arxiv.org/abs/2502.20339)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2502.20339)| [//]: #04/08

|[Distilling Reasoning Ability from Large Language Models with Adaptive Thinking](https://arxiv.org/abs/2404.09170)

Xiaoshu Chen, Sihang Zhou, Ke Liang, Xinwang Liu | |[Paper](https://arxiv.org/abs/2404.09170)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2404.09170)| [//]: #04/08

|[](https://github.com/EIT-NLP/Distilling-CoT-Reasoning)

[Unveiling the Key Factors for Distilling Chain-of-Thought Reasoning](https://arxiv.org/abs/2502.18001)

Xinghao Chen, Zhijing Sun, Wenjin Guo, Miaoran Zhang, Yanjun Chen, Yirong Sun, Hui Su, Yijie Pan, Dietrich Klakow, Wenjie Li, Xiaoyu Shen | |[Github](https://github.com/EIT-NLP/Distilling-CoT-Reasoning)

|[Github](https://github.com/EIT-NLP/Distilling-CoT-Reasoning)

[Paper](https://arxiv.org/abs/2502.18001)| [//]: #04/08

#### Quantization and Pruning

| Title & Authors | Introduction | Links |

|:--| :----: | :---:|

|[Towards Reasoning Ability of Small Language Models](https://arxiv.org/abs/2502.11569)

Gaurav Srivastava, Shuxiang Cao, Xuan Wang | |[Paper](https://arxiv.org/abs/2502.11569)| [//]: #04/14

|[Paper](https://arxiv.org/abs/2502.11569)| [//]: #04/14

|[](https://github.com/ruikangliu/Quantized-Reasoning-Models)

[Quantization Hurts Reasoning? An Empirical Study on Quantized Reasoning Models](https://arxiv.org/abs/2504.04823)

Ruikang Liu, Yuxuan Sun, Manyi Zhang, Haoli Bai, Xianzhi Yu, Tiezheng Yu, Chun Yuan, Lu Hou | |[Github](https://github.com/ruikangliu/Quantized-Reasoning-Models)

|[Github](https://github.com/ruikangliu/Quantized-Reasoning-Models)

[Paper](https://arxiv.org/abs/2504.04823)| [//]: #04/14

|[When Reasoning Meets Compression: Benchmarking Compressed Large Reasoning Models on Complex Reasoning Tasks](https://arxiv.org/abs/2504.02010)

Nan Zhang, Yusen Zhang, Prasenjit Mitra, Rui Zhang | |[Paper](https://arxiv.org/abs/2504.02010)| [//]: #04/14

|[Paper](https://arxiv.org/abs/2504.02010)| [//]: #04/14

#### RL-based Methods

| Title & Authors | Introduction | Links |

|:--| :----: | :---:|

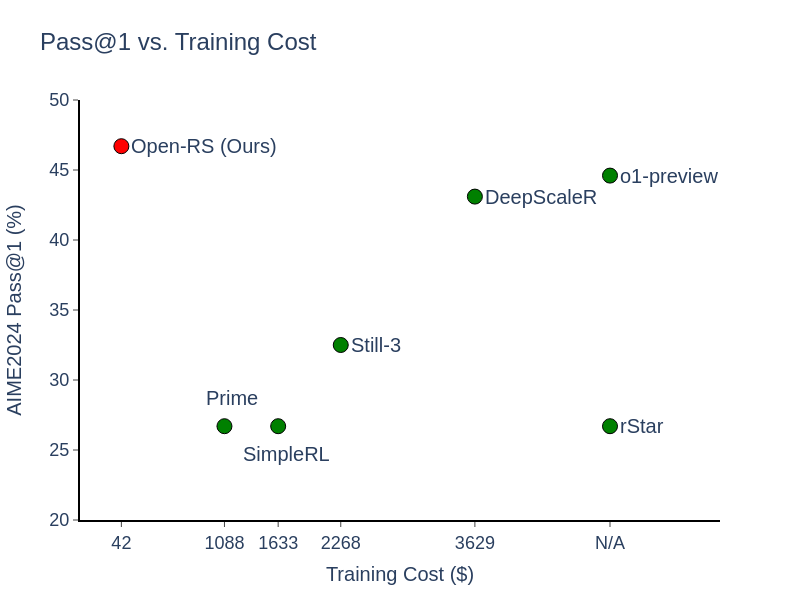

|[](https://github.com/knoveleng/open-rs)

[Reinforcement Learning for Reasoning in Small LLMs: What Works and What Doesn't](https://arxiv.org/abs/2503.16219)

Quy-Anh Dang, Chris Ngo |

|[Github](https://github.com/knoveleng/open-rs)

|[Github](https://github.com/knoveleng/open-rs)

[Paper](https://arxiv.org/abs/2503.16219)| [//]: #04/08

|[](https://github.com/hkust-nlp/simpleRL-reason)

[SimpleRL-Zoo: Investigating and Taming Zero Reinforcement Learning for Open Base Models in the Wild](https://arxiv.org/abs/2503.18892)

Weihao Zeng, Yuzhen Huang, Qian Liu, Wei Liu, Keqing He, Zejun Ma, Junxian He | |[Github](https://github.com/hkust-nlp/simpleRL-reason)

|[Github](https://github.com/hkust-nlp/simpleRL-reason)

[Paper](https://arxiv.org/abs/2503.18892)| [//]: #04/08

###### Repo

* [DeepScaleR](https://github.com/agentica-project/deepscaler). DeepScaleR team. [Webpage](https://agentica-project.com/)

### Let Decoding More Efficient

#### Efficient TTS

| Title & Authors | Introduction | Links |

|:--| :----: | :---:|

|[Think Deep, Think Fast: Investigating Efficiency of Verifier-free Inference-time-scaling Methods](https://arxiv.org/abs/2504.14047)

Junlin Wang, Shang Zhu, Jon Saad-Falcon, Ben Athiwaratkun, Qingyang Wu, Jue Wang, Shuaiwen Leon Song, Ce Zhang, Bhuwan Dhingra, James Zou | |[Paper](https://arxiv.org/abs/2504.14047)| [//]: #04/23

|[Paper](https://arxiv.org/abs/2504.14047)| [//]: #04/23

|[](https://github.com/IAAR-Shanghai/xVerify)

[xVerify: Efficient Answer Verifier for Reasoning Model Evaluations](https://arxiv.org/abs/2504.10481)

Ding Chen, Qingchen Yu, Pengyuan Wang, Wentao Zhang, Bo Tang, Feiyu Xiong, Xinchi Li, Minchuan Yang, Zhiyu Li | |[Github](https://github.com/IAAR-Shanghai/xVerify)

|[Github](https://github.com/IAAR-Shanghai/xVerify)

[Paper](https://arxiv.org/abs/2504.10481)| [//]: #04/17

|[](https://github.com/Pranjal2041/AdaptiveConsistency)

[Let's Sample Step by Step: Adaptive-Consistency for Efficient Reasoning and Coding with LLMs](https://arxiv.org/abs/2305.11860)

Pranjal Aggarwal, Aman Madaan, Yiming Yang, Mausam | |[Github](https://github.com/Pranjal2041/AdaptiveConsistency)

|[Github](https://github.com/Pranjal2041/AdaptiveConsistency)

[Paper](https://arxiv.org/abs/2305.11860)| [//]: #04/08

|[](https://github.com/Yiwei98/ESC) []()

[Escape Sky-high Cost: Early-stopping Self-Consistency for Multi-step Reasoning](https://arxiv.org/abs/2401.10480)

Yiwei Li, Peiwen Yuan, Shaoxiong Feng, Boyuan Pan, Xinglin Wang, Bin Sun, Heda Wang, Kan Li | |[Github](https://github.com/Yiwei98/ESC)

|[Github](https://github.com/Yiwei98/ESC)

[Paper](https://arxiv.org/abs/2401.10480)| [//]: #04/08

|[](https://github.com/WangXinglin/DSC) []()

[Make Every Penny Count: Difficulty-Adaptive Self-Consistency for Cost-Efficient Reasoning](https://arxiv.org/abs/2408.13457)

Xinglin Wang, Shaoxiong Feng, Yiwei Li, Peiwen Yuan, Yueqi Zhang, Chuyi Tan, Boyuan Pan, Yao Hu, Kan Li | |[Github](https://github.com/WangXinglin/DSC)

|[Github](https://github.com/WangXinglin/DSC)

[Paper](https://arxiv.org/abs/2408.13457)| [//]: #04/08

|[Path-Consistency: Prefix Enhancement for Efficient Inference in LLM](https://arxiv.org/abs/2409.01281)

Jiace Zhu, Yingtao Shen, Jie Zhao, An Zou | |[Paper](https://arxiv.org/abs/2409.01281)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2409.01281)| [//]: #04/08

|[Bridging Internal Probability and Self-Consistency for Effective and Efficient LLM Reasoning](https://arxiv.org/abs/2502.00511)

Zhi Zhou, Tan Yuhao, Zenan Li, Yuan Yao, Lan-Zhe Guo, Xiaoxing Ma, Yu-Feng Li | |[Paper](https://arxiv.org/abs/2502.00511)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2502.00511)| [//]: #04/08

|[Confidence Improves Self-Consistency in LLMs](https://arxiv.org/abs/2502.06233)

Amir Taubenfeld, Tom Sheffer, Eran Ofek, Amir Feder, Ariel Goldstein, Zorik Gekhman, Gal Yona | |[Paper](https://arxiv.org/abs/2502.06233)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2502.06233)| [//]: #04/08

|[](https://github.com/Chengsong-Huang/Self-Calibration)

[Efficient Test-Time Scaling via Self-Calibration](https://arxiv.org/abs/2503.00031)

Chengsong Huang, Langlin Huang, Jixuan Leng, Jiacheng Liu, Jiaxin Huang | |[Github](https://github.com/Chengsong-Huang/Self-Calibration)

|[Github](https://github.com/Chengsong-Huang/Self-Calibration)

[Paper](https://arxiv.org/abs/2503.00031)| [//]: #04/08

|[](https://github.com/Zanette-Labs/SpeculativeRejection) []()

[Fast Best-of-N Decoding via Speculative Rejection](https://arxiv.org/abs/2410.20290)

Hanshi Sun, Momin Haider, Ruiqi Zhang, Huitao Yang, Jiahao Qiu, Ming Yin, Mengdi Wang, Peter Bartlett, Andrea Zanette | |[Github](https://github.com/Zanette-Labs/SpeculativeRejection)

|[Github](https://github.com/Zanette-Labs/SpeculativeRejection)

[Paper](https://arxiv.org/abs/2410.20290)| [//]: #04/08

|[Sampling-Efficient Test-Time Scaling: Self-Estimating the Best-of-N Sampling in Early Decoding](https://arxiv.org/abs/2503.01422)

Yiming Wang, Pei Zhang, Siyuan Huang, Baosong Yang, Zhuosheng Zhang, Fei Huang, Rui Wang | |[Paper](https://arxiv.org/abs/2503.01422)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2503.01422)| [//]: #04/08

|[FastMCTS: A Simple Sampling Strategy for Data Synthesis](https://www.arxiv.org/abs/2502.11476)

Peiji Li, Kai Lv, Yunfan Shao, Yichuan Ma, Linyang Li, Xiaoqing Zheng, Xipeng Qiu, Qipeng Guo | |[Paper](https://www.arxiv.org/abs/2502.11476)| [//]: #04/08

|[Paper](https://www.arxiv.org/abs/2502.11476)| [//]: #04/08

|[](https://github.com/chang-github-00/LLM-Predictive-Decoding) []()

[Non-myopic Generation of Language Models for Reasoning and Planning](https://arxiv.org/abs/2410.17195)

Chang Ma, Haiteng Zhao, Junlei Zhang, Junxian He, Lingpeng Kong | |[Github](https://github.com/chang-github-00/LLM-Predictive-Decoding)

|[Github](https://github.com/chang-github-00/LLM-Predictive-Decoding)

[Paper](https://arxiv.org/abs/2410.17195)| [//]: #04/08

|[](https://github.com/ethanm88/self-taught-lookahead)

[Language Models can Self-Improve at State-Value Estimation for Better Search](https://arxiv.org/abs/2503.02878)

Ethan Mendes, Alan Ritter | |[Github](https://github.com/ethanm88/self-taught-lookahead)

|[Github](https://github.com/ethanm88/self-taught-lookahead)

[Paper](https://arxiv.org/abs/2503.02878)| [//]: #04/08

|[](https://github.com/xufangzhi/phi-Decoding)

[ϕ-Decoding: Adaptive Foresight Sampling for Balanced Inference-Time Exploration and Exploitation](https://arxiv.org/abs/2503.13288)

Fangzhi Xu, Hang Yan, Chang Ma, Haiteng Zhao, Jun Liu, Qika Lin, Zhiyong Wu | |[Github](https://github.com/xufangzhi/phi-Decoding)

|[Github](https://github.com/xufangzhi/phi-Decoding)

[Paper](https://arxiv.org/abs/2503.13288)| [//]: #04/08

|[Dynamic Parallel Tree Search for Efficient LLM Reasoning](https://arxiv.org/abs/2502.16235)

Yifu Ding, Wentao Jiang, Shunyu Liu, Yongcheng Jing, Jinyang Guo, Yingjie Wang, Jing Zhang, Zengmao Wang, Ziwei Liu, Bo Du, Xianglong Liu, Dacheng Tao | |[Paper](https://arxiv.org/abs/2502.16235)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2502.16235)| [//]: #04/08

|[](https://github.com/Soistesimmer/Fetch)

[Don't Get Lost in the Trees: Streamlining LLM Reasoning by Overcoming Tree Search Exploration Pitfalls](https://arxiv.org/abs/2502.11183)

Ante Wang, Linfeng Song, Ye Tian, Dian Yu, Haitao Mi, Xiangyu Duan, Zhaopeng Tu, Jinsong Su, Dong Yu | |[Github](https://github.com/Soistesimmer/Fetch)

|[Github](https://github.com/Soistesimmer/Fetch)

[Paper](https://arxiv.org/abs/2502.11183)| [//]: #04/08

#### Other Optimal Methods

| Title & Authors | Introduction | Links |

|:--| :----: | :---:|

|[](https://github.com/Parallel-Reasoning/APR)

[Learning Adaptive Parallel Reasoning with Language Models](https://arxiv.org/abs/2504.15466)

Jiayi Pan, Xiuyu Li, Long Lian, Charlie Snell, Yifei Zhou, Adam Yala, Trevor Darrell, Kurt Keutzer, Alane Suhr | |[Github](https://github.com/Parallel-Reasoning/APR)

|[Github](https://github.com/Parallel-Reasoning/APR)

[Paper](https://arxiv.org/abs/2504.15466)| [//]: #04/23

|[THOUGHTTERMINATOR: Benchmarking, Calibrating, and Mitigating Overthinking in Reasoning Models](https://arxiv.org/abs/2504.13367)

Xiao Pu, Michael Saxon, Wenyue Hua, William Yang Wang | |[Paper](https://arxiv.org/abs/2504.13367)| [//]: #04/21

|[Paper](https://arxiv.org/abs/2504.13367)| [//]: #04/21

|[](https://github.com/imagination-research/sot) []()

[Skeleton-of-Thought: Prompting LLMs for Efficient Parallel Generation](https://arxiv.org/abs/2307.15337)

Xuefei Ning, Zinan Lin, Zixuan Zhou, Zifu Wang, Huazhong Yang, Yu Wang | |[Github](https://github.com/imagination-research/sot)

|[Github](https://github.com/imagination-research/sot)

[Paper](https://arxiv.org/abs/2307.15337)| [//]: #04/08

|[Adaptive Skeleton Graph Decoding](https://arxiv.org/abs/2402.12280)

Shuowei Jin, Yongji Wu, Haizhong Zheng, Qingzhao Zhang, Matthew Lentz, Z. Morley Mao, Atul Prakash, Feng Qian, Danyang Zhuo | |[Paper](https://arxiv.org/abs/2402.12280)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2402.12280)| [//]: #04/08

|[](https://github.com/BaohaoLiao/RSD)

[Reward-Guided Speculative Decoding for Efficient LLM Reasoning](https://arxiv.org/abs/2501.19324)

Baohao Liao, Yuhui Xu, Hanze Dong, Junnan Li, Christof Monz, Silvio Savarese, Doyen Sahoo, Caiming Xiong | |[Github](https://github.com/BaohaoLiao/RSD)

|[Github](https://github.com/BaohaoLiao/RSD)

[Paper](https://arxiv.org/abs/2501.19324)| [//]: #04/08

|[Meta-Reasoner: Dynamic Guidance for Optimized Inference-time Reasoning in Large Language Models](https://arxiv.org/abs/2502.19918)

Yuan Sui, Yufei He, Tri Cao, Simeng Han, Bryan Hooi | |[Paper](https://arxiv.org/abs/2502.19918)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2502.19918)| [//]: #04/08

|[](https://github.com/qixucen/atom)

[Atom of Thoughts for Markov LLM Test-Time Scaling](https://arxiv.org/abs/2502.12018)

Fengwei Teng, Zhaoyang Yu, Quan Shi, Jiayi Zhang, Chenglin Wu, Yuyu Luo | |[Github](https://github.com/qixucen/atom)

|[Github](https://github.com/qixucen/atom)

[Paper](https://arxiv.org/abs/2502.12018)| [//]: #04/08

|[DISC: Dynamic Decomposition Improves LLM Inference Scaling](https://arxiv.org/abs/2502.16706)

Jonathan Light, Wei Cheng, Wu Yue, Masafumi Oyamada, Mengdi Wang, Santiago Paternain, Haifeng Chen | |[Paper](https://arxiv.org/abs/2502.16706)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2502.16706)| [//]: #04/08

|[From Chaos to Order: The Atomic Reasoner Framework for Fine-grained Reasoning in Large Language Models](https://arxiv.org/abs/2503.15944)

Jinyi Liu, Yan Zheng, Rong Cheng, Qiyu Wu, Wei Guo, Fei Ni, Hebin Liang, Yifu Yuan, Hangyu Mao, Fuzheng Zhang, Jianye Hao | |[Paper](https://arxiv.org/abs/2503.15944)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2503.15944)| [//]: #04/08

|[](https://github.com/Quinn777/AtomThink)

[Can Atomic Step Decomposition Enhance the Self-structured Reasoning of Multimodal Large Models?](https://arxiv.org/abs/2503.06252)

Kun Xiang, Zhili Liu, Zihao Jiang, Yunshuang Nie, Kaixin Cai, Yiyang Yin, Runhui Huang, Haoxiang Fan, Hanhui Li, Weiran Huang, Yihan Zeng, Yu-Jie Yuan, Jianhua Han, Lanqing Hong, Hang Xu, Xiaodan Liang | |[Github](https://github.com/Quinn777/AtomThink)

|[Github](https://github.com/Quinn777/AtomThink)

[Paper](https://arxiv.org/abs/2503.06252)| [//]: #04/08

| []()

[Scaling LLM Test-Time Compute Optimally can be More Effective than Scaling Model Parameters](https://arxiv.org/abs/2408.03314)

Charlie Snell, Jaehoon Lee, Kelvin Xu, Aviral Kumar | |[Paper](https://arxiv.org/abs/2408.03314)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2408.03314)| [//]: #04/08

|[](https://github.com/thu-wyz/inference_scaling) []()

[Inference Scaling Laws: An Empirical Analysis of Compute-Optimal Inference for Problem-Solving with Language Models](https://arxiv.org/abs/2408.00724)

Yangzhen Wu, Zhiqing Sun, Shanda Li, Sean Welleck, Yiming Yang | |[Github](https://github.com/thu-wyz/inference_scaling)

|[Github](https://github.com/thu-wyz/inference_scaling)

[Paper](https://arxiv.org/abs/2408.00724)| [//]: #04/08

|[](https://github.com/CMU-AIRe/MRT)

[Optimizing Test-Time Compute via Meta Reinforcement Fine-Tuning](https://arxiv.org/abs/2503.07572)

Yuxiao Qu, Matthew Y. R. Yang, Amrith Setlur, Lewis Tunstall, Edward Emanuel Beeching, Ruslan Salakhutdinov, Aviral Kumar | |[Github](https://github.com/CMU-AIRe/MRT)

|[Github](https://github.com/CMU-AIRe/MRT)

[Paper](https://arxiv.org/abs/2503.07572)| [//]: #04/08

|[](https://github.com/ruipeterpan/specreason)

[SpecReason: Fast and Accurate Inference-Time Compute via Speculative Reasoning](https://arxiv.org/abs/2504.07891)

Rui Pan, Yinwei Dai, Zhihao Zhang, Gabriele Oliaro, Zhihao Jia, Ravi Netravali | |[Github](https://github.com/ruipeterpan/specreason)

|[Github](https://github.com/ruipeterpan/specreason)

[Paper](https://arxiv.org/abs/2504.07891)| [//]: #04/14

### Evaluation and Benchmarks

#### Metric

| Title & Authors | Introduction | Links |

|:--| :----: | :---:|

|[Do NOT Think That Much for 2+3=? On the Overthinking of o1-Like LLMs](https://arxiv.org/abs/2412.21187)

Xingyu Chen, Jiahao Xu, Tian Liang, Zhiwei He, Jianhui Pang, Dian Yu, Linfeng Song, Qiuzhi Liu, Mengfei Zhou, Zhuosheng Zhang, Rui Wang, Zhaopeng Tu, Haitao Mi, Dong Yu | |[Paper](https://arxiv.org/abs/2412.21187)|[//]: #03/16

|[Paper](https://arxiv.org/abs/2412.21187)|[//]: #03/16

|[](https://github.com/horseee/CoT-Valve)

[CoT-Valve: Length-Compressible Chain-of-Thought Tuning](https://arxiv.org/abs/2502.09601)

Xinyin Ma, Guangnian Wan, Runpeng Yu, Gongfan Fang, Xinchao Wang | |[Github](https://github.com/horseee/CoT-Valve)

|[Github](https://github.com/horseee/CoT-Valve)

[Paper](https://arxiv.org/abs/2502.09601)|[//]: #03/16

|[](https://github.com/breckbaldwin/llm-stability)

[Non-Determinism of "Deterministic" LLM Settings](https://arxiv.org/abs/2408.04667)

Berk Atil, Sarp Aykent, Alexa Chittams, Lisheng Fu, Rebecca J. Passonneau, Evan Radcliffe, Guru Rajan Rajagopal, Adam Sloan, Tomasz Tudrej, Ferhan Ture, Zhe Wu, Lixinyu Xu, Breck Baldwin | |[Github](https://github.com/breckbaldwin/llm-stability)

|[Github](https://github.com/breckbaldwin/llm-stability)

[Paper](https://arxiv.org/abs/2408.04667)| [//]: #04/08

|[The Danger of Overthinking: Examining the Reasoning-Action Dilemma in Agentic Tasks](https://arxiv.org/abs/2502.08235)

Alejandro Cuadron, Dacheng Li, Wenjie Ma, Xingyao Wang, Yichuan Wang, Siyuan Zhuang, Shu Liu, Luis Gaspar Schroeder, Tian Xia, Huanzhi Mao, Nicholas Thumiger, Aditya Desai, Ion Stoica, Ana Klimovic, Graham Neubig, Joseph E. Gonzalez | |[Paper](https://arxiv.org/abs/2502.08235)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2502.08235)| [//]: #04/08

|[Evaluating Large Language Models Trained on Code](https://arxiv.org/abs/2107.03374)

Mark Chen, Jerry Tworek, et al. | |[Paper](https://arxiv.org/abs/2107.03374)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2107.03374)| [//]: #04/08

|[τ-bench: A Benchmark for Tool-Agent-User Interaction in Real-World Domains](https://arxiv.org/abs/2406.12045)

Shunyu Yao, Noah Shinn, Pedram Razavi, Karthik Narasimhan | |[Paper](https://arxiv.org/abs/2406.12045)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2406.12045)| [//]: #04/08

|[](https://github.com/open-compass/GPassK)

[Are Your LLMs Capable of Stable Reasoning?](https://arxiv.org/abs/2412.13147)

Junnan Liu, Hongwei Liu, Linchen Xiao, Ziyi Wang, Kuikun Liu, Songyang Gao, Wenwei Zhang, Songyang Zhang, Kai Chen | |[Github](https://github.com/open-compass/GPassK)

|[Github](https://github.com/open-compass/GPassK)

[Paper](https://arxiv.org/abs/2412.13147)| [//]: #04/08

#### Benchmarks and Datasets

| Title & Authors | Introduction | Links |

|:--| :----: | :---:|

|[LongPerceptualThoughts: Distilling System-2 Reasoning for System-1 Perception](https://arxiv.org/abs/2504.15362)

Yuan-Hong Liao, Sven Elflein, Liu He, Laura Leal-Taixé, Yejin Choi, Sanja Fidler, David Acuna | |[Paper](https://arxiv.org/abs/2504.15362)| [//]: #04/23

|[Paper](https://arxiv.org/abs/2504.15362)| [//]: #04/23

|[THOUGHTTERMINATOR: Benchmarking, Calibrating, and Mitigating Overthinking in Reasoning Models](https://arxiv.org/abs/2504.13367)

Xiao Pu, Michael Saxon, Wenyue Hua, William Yang Wang | |[Paper](https://arxiv.org/abs/2504.13367)| [//]: #04/21

|[Paper](https://arxiv.org/abs/2504.13367)| [//]: #04/21

|[Do NOT Think That Much for 2+3=? On the Overthinking of o1-Like LLMs](https://arxiv.org/abs/2412.21187)

Xingyu Chen, Jiahao Xu, Tian Liang, Zhiwei He, Jianhui Pang, Dian Yu, Linfeng Song, Qiuzhi Liu, Mengfei Zhou, Zhuosheng Zhang, Rui Wang, Zhaopeng Tu, Haitao Mi, Dong Yu | |[Paper](https://arxiv.org/abs/2412.21187)|[//]: #03/16

|[Paper](https://arxiv.org/abs/2412.21187)|[//]: #03/16

|[The Danger of Overthinking: Examining the Reasoning-Action Dilemma in Agentic Tasks](https://arxiv.org/abs/2502.08235)

Alejandro Cuadron, Dacheng Li, Wenjie Ma, Xingyao Wang, Yichuan Wang, Siyuan Zhuang, Shu Liu, Luis Gaspar Schroeder, Tian Xia, Huanzhi Mao, Nicholas Thumiger, Aditya Desai, Ion Stoica, Ana Klimovic, Graham Neubig, Joseph E. Gonzalez | |[Paper](https://arxiv.org/abs/2502.08235)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2502.08235)| [//]: #04/08

|[](https://github.com/divelab/sys2bench)

[Inference-Time Computations for LLM Reasoning and Planning: A Benchmark and Insights](https://arxiv.org/abs/2502.12521)

Shubham Parashar, Blake Olson, Sambhav Khurana, Eric Li, Hongyi Ling, James Caverlee, Shuiwang Ji | |[Github](https://github.com/divelab/sys2bench)

|[Github](https://github.com/divelab/sys2bench)

[Paper](https://arxiv.org/abs/2502.12521)| [//]: #04/08

|[](https://github.com/usail-hkust/benchmark_inference_time_computation_LLM)

[Bag of Tricks for Inference-time Computation of LLM Reasoning](https://arxiv.org/abs/2502.07191)

Fan Liu, Wenshuo Chao, Naiqiang Tan, Hao Liu | |[Github](https://github.com/usail-hkust/benchmark_inference_time_computation_LLM)

|[Github](https://github.com/usail-hkust/benchmark_inference_time_computation_LLM)

[Paper](https://arxiv.org/abs/2502.07191)| [//]: #04/08

|[](https://github.com/RyanLiu112/compute-optimal-tts)

[Can 1B LLM Surpass 405B LLM? Rethinking Compute-Optimal Test-Time Scaling](https://arxiv.org/abs/2502.06703)

Runze Liu, Junqi Gao, Jian Zhao, Kaiyan Zhang, Xiu Li, Biqing Qi, Wanli Ouyang, Bowen Zhou | |[Github](https://github.com/RyanLiu112/compute-optimal-tts)

|[Github](https://github.com/RyanLiu112/compute-optimal-tts)

[Paper](https://arxiv.org/abs/2502.06703)| [//]: #04/08

|[DNA Bench: When Silence is Smarter -- Benchmarking Over-Reasoning in Reasoning LLMs](https://arxiv.org/abs/2503.15793)

Masoud Hashemi, Oluwanifemi Bamgbose, Sathwik Tejaswi Madhusudhan, Jishnu Sethumadhavan Nair, Aman Tiwari, Vikas Yadav | |[Paper](https://arxiv.org/abs/2503.15793)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2503.15793)| [//]: #04/08

|[S1-Bench: A Simple Benchmark for Evaluating System 1 Thinking Capability of Large Reasoning Models](https://arxiv.org/abs/2504.10368)

Wenyuan Zhang, Shuaiyi Nie, Xinghua Zhang, Zefeng Zhang, Tingwen Liu | |[Paper](https://arxiv.org/abs/2504.10368)| [//]: #04/14

|[Paper](https://arxiv.org/abs/2504.10368)| [//]: #04/14

|[](https://github.com/zhishuifeiqian/VCR-Bench)

[VCR-Bench: A Comprehensive Evaluation Framework for Video Chain-of-Thought Reasoning](https://arxiv.org/abs/2504.07956)

Yukun Qi, Yiming Zhao, Yu Zeng, Xikun Bao, Wenxuan Huang, Lin Chen, Zehui Chen, Jie Zhao, Zhongang Qi, Feng Zhao | |[Github](https://github.com/zhishuifeiqian/VCR-Bench)

|[Github](https://github.com/zhishuifeiqian/VCR-Bench)

[Paper](https://arxiv.org/abs/2504.07956)| [//]: #04/16

### Background Papers

| Title & Authors | Introduction | Links |

|:--| :----: | :---:|

|[](https://github.com/LeapLabTHU/limit-of-RLVR)

[Does Reinforcement Learning Really Incentivize Reasoning Capacity in LLMs Beyond the Base Model?](https://arxiv.org/abs/2504.13837)

Yang Yue, Zhiqi Chen, Rui Lu, Andrew Zhao, Zhaokai Wang, Yang Yue, Shiji Song, Gao Huang | |[Github](https://github.com/LeapLabTHU/limit-of-RLVR)

|[Github](https://github.com/LeapLabTHU/limit-of-RLVR)

[Paper](https://arxiv.org/abs/2504.13837)| [//]: #04/22

| []()

[Chain-of-Thought Prompting Elicits Reasoning in Large Language Models](https://arxiv.org/abs/2201.11903)

Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Brian Ichter, Fei Xia, Ed Chi, Quoc Le, Denny Zhou | |[Paper](https://arxiv.org/abs/2201.11903)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2201.11903)| [//]: #04/08

|[](https://github.com/princeton-nlp/tree-of-thought-llm) []()

[Tree of Thoughts: Deliberate Problem Solving with Large Language Models](https://arxiv.org/abs/2305.10601)

Shunyu Yao, Dian Yu, Jeffrey Zhao, Izhak Shafran, Thomas L. Griffiths, Yuan Cao, Karthik Narasimhan | |[Github](https://github.com/princeton-nlp/tree-of-thought-llm)

|[Github](https://github.com/princeton-nlp/tree-of-thought-llm)

[Paper](https://arxiv.org/abs/2305.10601)| [//]: #04/08

|[](https://github.com/spcl/graph-of-thoughts) []()

[Graph of Thoughts: Solving Elaborate Problems with Large Language Models](https://arxiv.org/abs/2308.09687)

Maciej Besta, Nils Blach, Ales Kubicek, Robert Gerstenberger, Michal Podstawski, Lukas Gianinazzi, Joanna Gajda, Tomasz Lehmann, Hubert Niewiadomski, Piotr Nyczyk, Torsten Hoefler | |[Github](https://github.com/spcl/graph-of-thoughts)

|[Github](https://github.com/spcl/graph-of-thoughts)

[Paper](https://arxiv.org/abs/2308.09687)| [//]: #04/08

| []()

[Self-Consistency Improves Chain of Thought Reasoning in Language Models](https://arxiv.org/abs/2203.11171)

Xuezhi Wang, Jason Wei, Dale Schuurmans, Quoc Le, Ed Chi, Sharan Narang, Aakanksha Chowdhery, Denny Zhou | |[Paper](https://arxiv.org/abs/2203.11171)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2203.11171)| [//]: #04/08

|[](https://github.com/TIGER-AI-Lab/Program-of-Thoughts) []()

[Program of Thoughts Prompting: Disentangling Computation from Reasoning for Numerical Reasoning Tasks](https://arxiv.org/abs/2211.12588)

Wenhu Chen, Xueguang Ma, Xinyi Wang, William W. Cohen | |[Github](https://github.com/TIGER-AI-Lab/Program-of-Thoughts)

|[Github](https://github.com/TIGER-AI-Lab/Program-of-Thoughts)

[Paper](https://arxiv.org/abs/2211.12588)| [//]: #04/08

|[](https://github.com/hanxuhu/chain-of-symbol-planning) []()

[Chain-of-Symbol Prompting Elicits Planning in Large Langauge Models](https://arxiv.org/abs/2305.10276)

Hanxu Hu, Hongyuan Lu, Huajian Zhang, Yun-Ze Song, Wai Lam, Yue Zhang | |[Github](https://github.com/hanxuhu/chain-of-symbol-planning)

|[Github](https://github.com/hanxuhu/chain-of-symbol-planning)

[Paper](https://arxiv.org/abs/2305.10276)| [//]: #04/08

###### Survey

| Title & Authors | Introduction | Links |

|:--| :----: | :---:|

|[Thinking Machines: A Survey of LLM based Reasoning Strategies](https://arxiv.org/abs/2503.10814)

Dibyanayan Bandyopadhyay, Soham Bhattacharjee, Asif Ekbal | |[Paper](https://arxiv.org/abs/2503.10814)| [//]: #04/08

|[Paper](https://arxiv.org/abs/2503.10814)| [//]: #04/08

|[](https://github.com/zzli2022/Awesome-System2-Reasoning-LLM)

[From System 1 to System 2: A Survey of Reasoning Large Language Models](https://arxiv.org/abs/2502.17419)

Zhong-Zhi Li, Duzhen Zhang, Ming-Liang Zhang, Jiaxin Zhang, Zengyan Liu, Yuxuan Yao, Haotian Xu, Junhao Zheng, Pei-Jie Wang, Xiuyi Chen, Yingying Zhang, Fei Yin, Jiahua Dong, Zhijiang Guo, Le Song, Cheng-Lin Liu | |[Github](https://github.com/zzli2022/Awesome-System2-Reasoning-LLM)

|[Github](https://github.com/zzli2022/Awesome-System2-Reasoning-LLM)

[Paper](https://arxiv.org/abs/2502.17419)| [//]: #04/08

## Acknowledgement

This repository is inspired by [Awesome-Efficient-LLM](https://github.com/horseee/Awesome-Efficient-LLM/)

## Citation

```bibtex

@article{,

title={Efficient Reasoning Models: A Survey},

author={Feng, Sicheng and Fang, Gongfan and Ma, Xinyin and Wang, Xinchao},

journal={arXiv preprint arXiv:2504.10903},

year={2025},

}

```